Amy R. Reibman

Shadow Augmentation for Handwashing Action Recognition: from Synthetic to Real Datasets

Oct 04, 2024

Abstract:Video analytics systems designed for deployment in outdoor conditions can be vulnerable to many environmental changes, particularly changes in shadow. Existing works have shown that shadow and its introduced distribution shift can cause system performance to degrade sharply. In this paper, we explore mitigation strategies to shadow-induced breakdown points of an action recognition system, using the specific application of handwashing action recognition for improving food safety. Using synthetic data, we explore the optimal shadow attributes to be included when training an action recognition system in order to improve performance under different shadow conditions. Experimental results indicate that heavier and larger shadow is more effective at mitigating the breakdown points. Building upon this observation, we propose a shadow augmentation method to be applied to real-world data. Results demonstrate the effectiveness of the shadow augmentation method for model training and consistency of its effectiveness across different neural network architectures and datasets.

End-to-end Evaluation of Practical Video Analytics Systems for Face Detection and Recognition

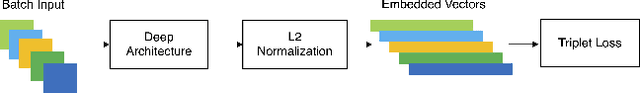

Oct 10, 2023Abstract:Practical video analytics systems that are deployed in bandwidth constrained environments like autonomous vehicles perform computer vision tasks such as face detection and recognition. In an end-to-end face analytics system, inputs are first compressed using popular video codecs like HEVC and then passed onto modules that perform face detection, alignment, and recognition sequentially. Typically, the modules of these systems are evaluated independently using task-specific imbalanced datasets that can misconstrue performance estimates. In this paper, we perform a thorough end-to-end evaluation of a face analytics system using a driving-specific dataset, which enables meaningful interpretations. We demonstrate how independent task evaluations, dataset imbalances, and inconsistent annotations can lead to incorrect system performance estimates. We propose strategies to create balanced evaluation subsets of our dataset and to make its annotations consistent across multiple analytics tasks and scenarios. We then evaluate the end-to-end system performance sequentially to account for task interdependencies. Our experiments show that our approach provides consistent, accurate, and interpretable estimates of the system's performance which is critical for real-world applications.

* Accepted to Autonomous Vehicles and Machines 2023 Conference, IS&T Electronic Imaging (EI) Symposium

Illumination Variation Correction Using Image Synthesis For Unsupervised Domain Adaptive Person Re-Identification

Jan 23, 2023

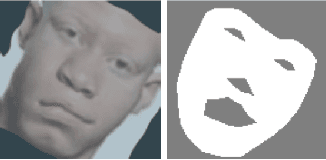

Abstract:Unsupervised domain adaptive (UDA) person re-identification (re-ID) aims to learn identity information from labeled images in source domains and apply it to unlabeled images in a target domain. One major issue with many unsupervised re-identification methods is that they do not perform well relative to large domain variations such as illumination, viewpoint, and occlusions. In this paper, we propose a Synthesis Model Bank (SMB) to deal with illumination variation in unsupervised person re-ID. The proposed SMB consists of several convolutional neural networks (CNN) for feature extraction and Mahalanobis matrices for distance metrics. They are trained using synthetic data with different illumination conditions such that their synergistic effect makes the SMB robust against illumination variation. To better quantify the illumination intensity and improve the quality of synthetic images, we introduce a new 3D virtual-human dataset for GAN-based image synthesis. From our experiments, the proposed SMB outperforms other synthesis methods on several re-ID benchmarks.

Turkey Behavior Identification System with a GUI Using Deep Learning and Video Analytics

Feb 09, 2021

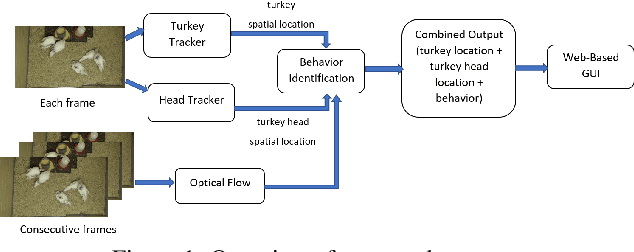

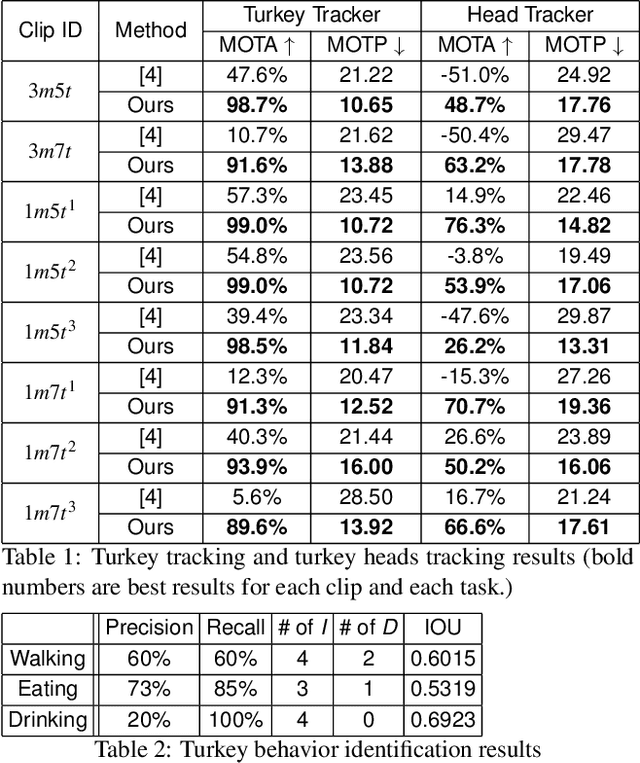

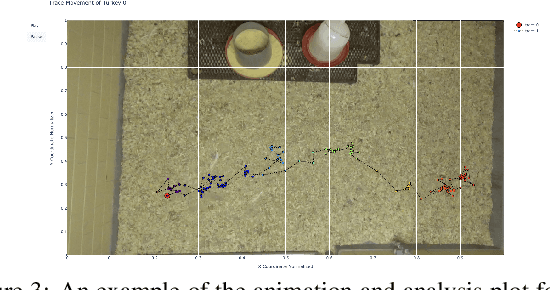

Abstract:In this paper, we propose a video analytics system to identify the behavior of turkeys. Turkey behavior provides evidence to assess turkey welfare, which can be negatively impacted by uncomfortable ambient temperature and various diseases. In particular, healthy and sick turkeys behave differently in terms of the duration and frequency of activities such as eating, drinking, preening, and aggressive interactions. Our system incorporates recent advances in object detection and tracking to automate the process of identifying and analyzing turkey behavior captured by commercial grade cameras. We combine deep-learning and traditional image processing methods to address challenges in this practical agricultural problem. Our system also includes a web-based user interface to create visualization of automated analysis results. Together, we provide an improved tool for turkey researchers to assess turkey welfare without the time-consuming and labor-intensive manual inspection.

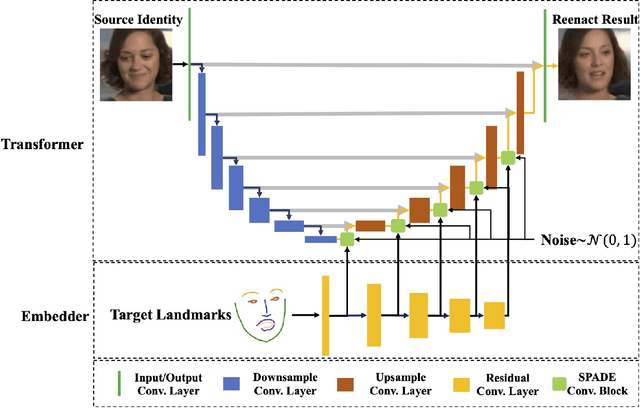

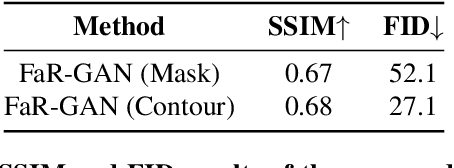

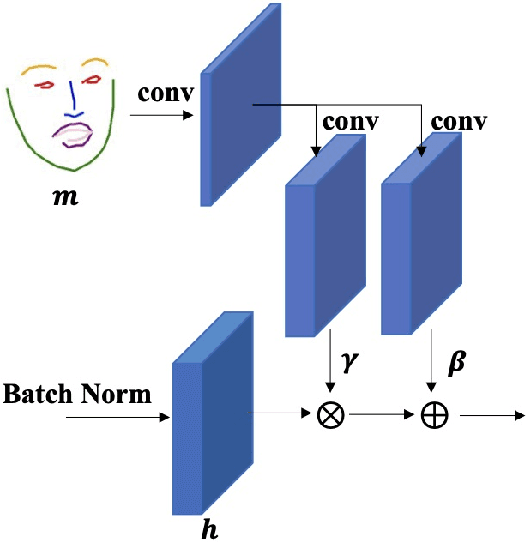

FaR-GAN for One-Shot Face Reenactment

May 13, 2020

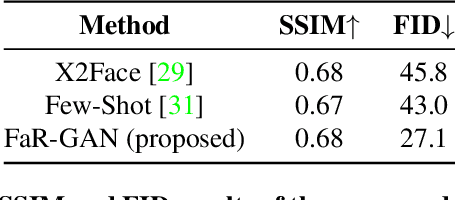

Abstract:Animating a static face image with target facial expressions and movements is important in the area of image editing and movie production. This face reenactment process is challenging due to the complex geometry and movement of human faces. Previous work usually requires a large set of images from the same person to model the appearance. In this paper, we present a one-shot face reenactment model, FaR-GAN, that takes only one face image of any given source identity and a target expression as input, and then produces a face image of the same source identity but with the target expression. The proposed method makes no assumptions about the source identity, facial expression, head pose, or even image background. We evaluate our method on the VoxCeleb1 dataset and show that our method is able to generate a higher quality face image than the compared methods.

A Utility-Preserving GAN for Face Obscuration

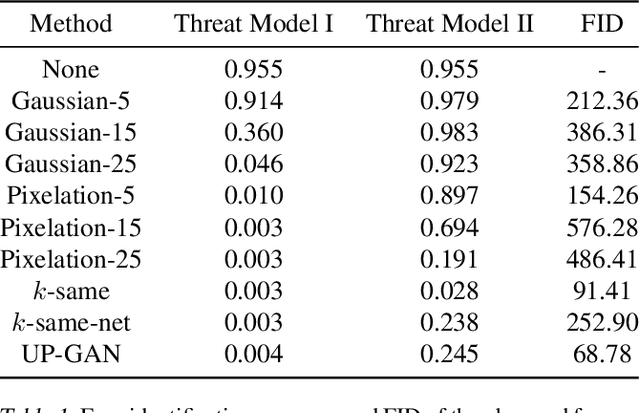

Jun 27, 2019

Abstract:From TV news to Google StreetView, face obscuration has been used for privacy protection. Due to recent advances in the field of deep learning, obscuration methods such as Gaussian blurring and pixelation are not guaranteed to conceal identity. In this paper, we propose a utility-preserving generative model, UP-GAN, that is able to provide an effective face obscuration, while preserving facial utility. By utility-preserving we mean preserving facial features that do not reveal identity, such as age, gender, skin tone, pose, and expression. We show that the proposed method achieves the best performance in terms of obscuration and utility preservation.

Robustness Analysis of Face Obscuration

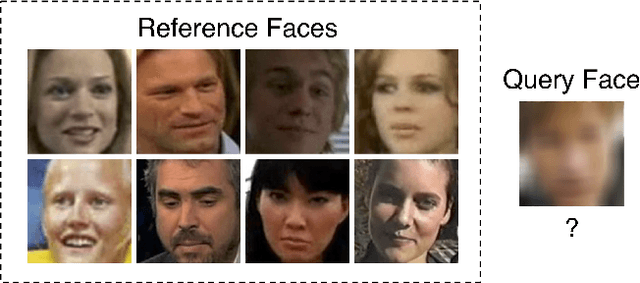

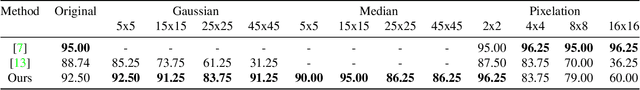

May 13, 2019

Abstract:Face obscuration is often needed by law enforcement or mass media outlets to provide privacy protection. Sharing sensitive content where the obscuration or redaction technique may have failed to completely remove all identifiable traces can lead to life-threatening consequences. Hence, it is critical to be able to systematically measure the face obscuration performance of a given technique. In this paper we propose to measure the effectiveness of three obscuration techniques: Gaussian blurring, median blurring, and pixelation. We do so by identifying the redacted faces under two scenarios: classifying an obscured face into a group of identities and comparing the similarity of an obscured face with a clear face. Threat modeling is also considered to provide a vulnerability analysis for each studied obscuration technique. Based on our evaluation, we show that pixelation-based face obscuration approaches are the most effective.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge