Amin Haeri

Generative AI Enhanced Financial Risk Management Information Retrieval

Apr 10, 2025Abstract:Risk management in finance involves recognizing, evaluating, and addressing financial risks to maintain stability and ensure regulatory compliance. Extracting relevant insights from extensive regulatory documents is a complex challenge requiring advanced retrieval and language models. This paper introduces RiskData, a dataset specifically curated for finetuning embedding models in risk management, and RiskEmbed, a finetuned embedding model designed to improve retrieval accuracy in financial question-answering systems. The dataset is derived from 94 regulatory guidelines published by the Office of the Superintendent of Financial Institutions (OSFI) from 1991 to 2024. We finetune a state-of-the-art sentence BERT embedding model to enhance domain-specific retrieval performance typically for Retrieval-Augmented Generation (RAG) systems. Experimental results demonstrate that RiskEmbed significantly outperforms general-purpose and financial embedding models, achieving substantial improvements in ranking metrics. By open-sourcing both the dataset and the model, we provide a valuable resource for financial institutions and researchers aiming to develop more accurate and efficient risk management AI solutions.

Subspace Graph Physics: Real-Time Rigid Body-Driven Granular Flow Simulation

Nov 18, 2021

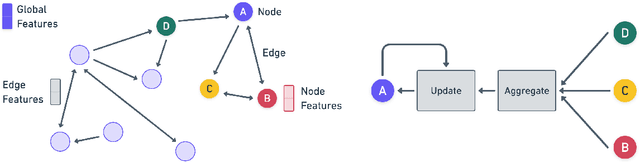

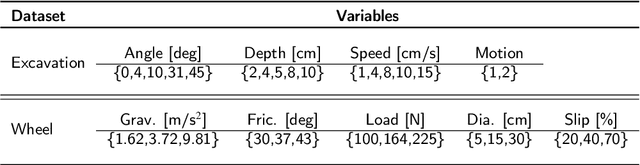

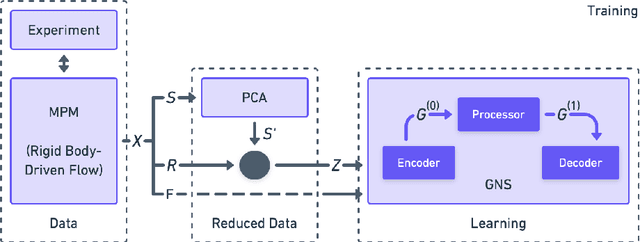

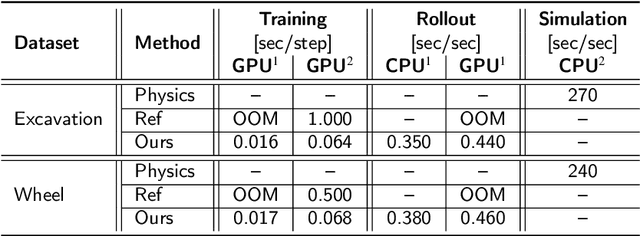

Abstract:An important challenge in robotics is understanding the interactions between robots and deformable terrains that consist of granular material. Granular flows and their interactions with rigid bodies still pose several open questions. A promising direction for accurate, yet efficient, modeling is using continuum methods. Also, a new direction for real-time physics modeling is the use of deep learning. This research advances machine learning methods for modeling rigid body-driven granular flows, for application to terrestrial industrial machines as well as space robotics (where the effect of gravity is an important factor). In particular, this research considers the development of a subspace machine learning simulation approach. To generate training datasets, we utilize our high-fidelity continuum method, material point method (MPM). Principal component analysis (PCA) is used to reduce the dimensionality of data. We show that the first few principal components of our high-dimensional data keep almost the entire variance in data. A graph network simulator (GNS) is trained to learn the underlying subspace dynamics. The learned GNS is then able to predict particle positions and interaction forces with good accuracy. More importantly, PCA significantly enhances the time and memory efficiency of GNS in both training and rollout. This enables GNS to be trained using a single desktop GPU with moderate VRAM. This also makes the GNS real-time on large-scale 3D physics configurations (700x faster than our continuum method).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge