Alfredo De la Fuente

Discretized Approximate Ancestral Sampling

May 09, 2025Abstract:The Fourier Basis Density Model (FBM) was recently introduced as a flexible probability model for band-limited distributions, i.e. ones which are smooth in the sense of having a characteristic function with limited support around the origin. Its density and cumulative distribution functions can be efficiently evaluated and trained with stochastic optimization methods, which makes the model suitable for deep learning applications. However, the model lacked support for sampling. Here, we introduce a method inspired by discretization--interpolation methods common in Digital Signal Processing, which directly take advantage of the band-limited property. We review mathematical properties of the FBM, and prove quality bounds of the sampled distribution in terms of the total variation (TV) and Wasserstein--1 divergences from the model. These bounds can be used to inform the choice of hyperparameters to reach any desired sample quality. We discuss these results in comparison to a variety of other sampling techniques, highlighting tradeoffs between computational complexity and sampling quality.

Fourier Basis Density Model

Feb 23, 2024

Abstract:We introduce a lightweight, flexible and end-to-end trainable probability density model parameterized by a constrained Fourier basis. We assess its performance at approximating a range of multi-modal 1D densities, which are generally difficult to fit. In comparison to the deep factorized model introduced in [1], our model achieves a lower cross entropy at a similar computational budget. In addition, we also evaluate our method on a toy compression task, demonstrating its utility in learned compression.

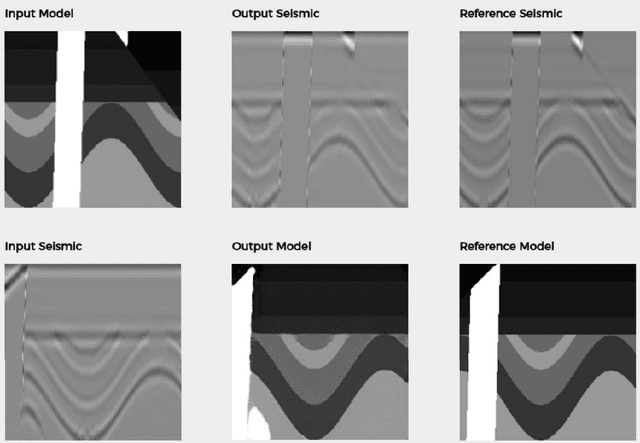

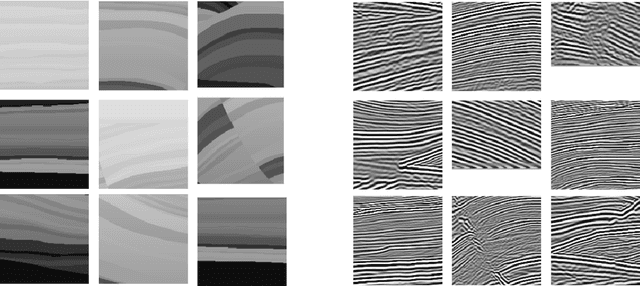

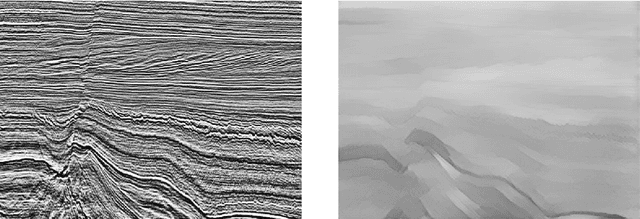

Rapid seismic domain transfer: Seismic velocity inversion and modeling using deep generative neural networks

May 22, 2018

Abstract:Traditional physics-based approaches to infer sub-surface properties such as full-waveform inversion or reflectivity inversion are time-consuming and computationally expensive. We present a deep-learning technique that eliminates the need for these computationally complex methods by posing the problem as one of domain transfer. Our solution is based on a deep convolutional generative adversarial network and dramatically reduces computation time. Training based on two different types of synthetic data produced a neural network that generates realistic velocity models when applied to a real dataset. The system's ability to generalize means it is robust against the inherent occurrence of velocity errors and artifacts in both training and test datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge