Alexander Neuwirth

Lymphocyte Classification in Hyperspectral Images of Ovarian Cancer Tissue Biopsy Samples

Mar 23, 2022

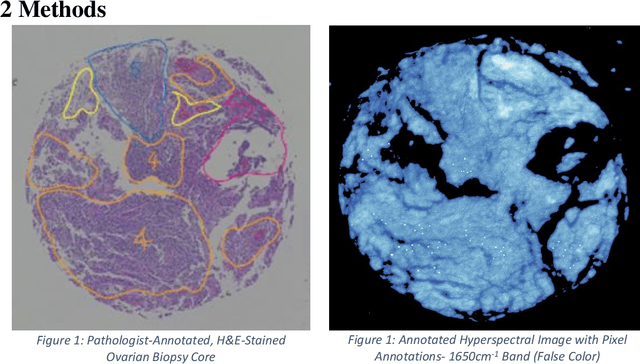

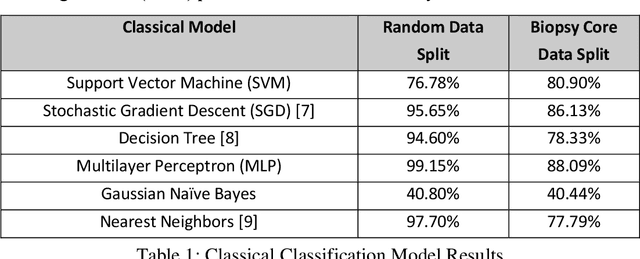

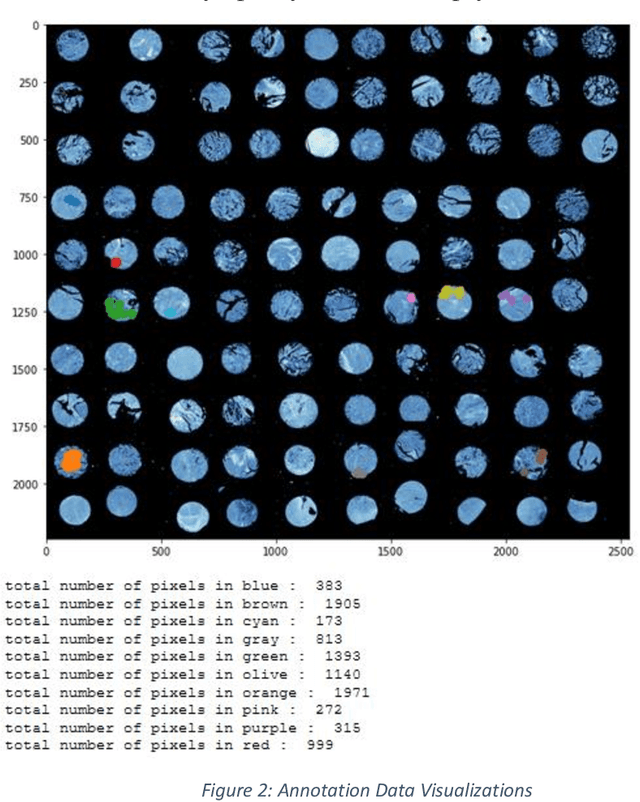

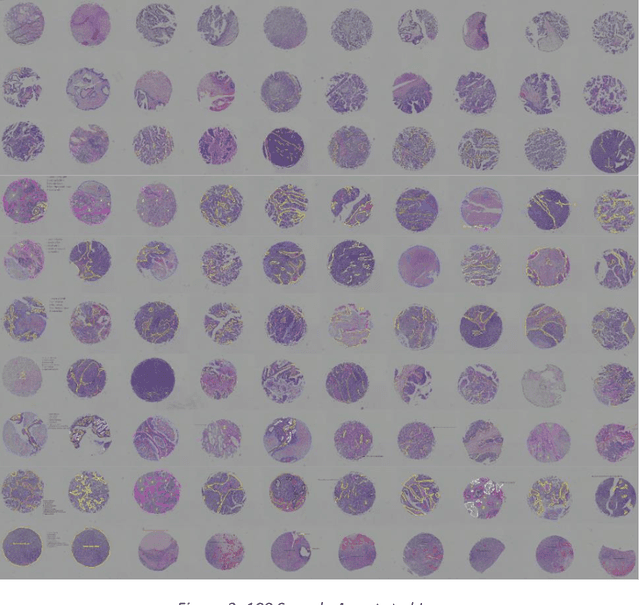

Abstract:Current methods for diagnosing the progression of multiple types of cancer within patients rely on interpreting stained needle biopsies. This process is time-consuming and susceptible to error throughout the paraffinization, Hematoxylin and Eosin (H&E) staining, deparaffinization, and annotation stages. Fourier Transform Infrared (FTIR) imaging has been shown to be a promising alternative to staining for appropriately annotating biopsy cores without the need for deparaffinization or H&E staining with the use of Fourier Transform Infrared (FTIR) images when combined with machine learning to interpret the dense spectral information. We present a machine learning pipeline to segment white blood cell (lymphocyte) pixels in hyperspectral images of biopsy cores. These cells are clinically important for diagnosis, but some prior work has struggled to incorporate them due to difficulty obtaining precise pixel labels. Evaluated methods include Support Vector Machine (SVM), Gaussian Naive Bayes, and Multilayer Perceptron (MLP), as well as analyzing the comparatively modern convolutional neural network (CNN).

Muscle Vision: Real Time Keypoint Based Pose Classification of Physical Exercises

Mar 23, 2022

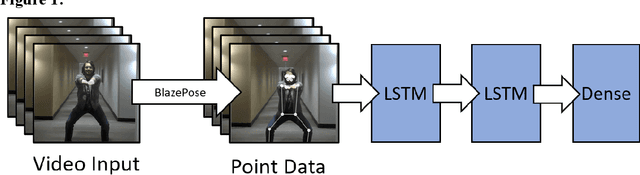

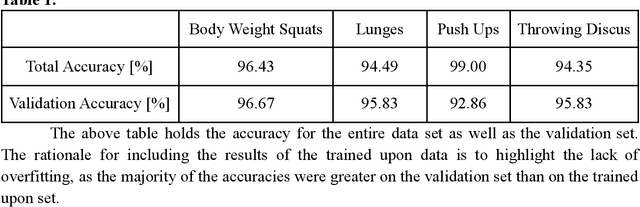

Abstract:Recent advances in machine learning technology have enabled highly portable and performant models for many common tasks, especially in image recognition. One emerging field, 3D human pose recognition extrapolated from video, has now advanced to the point of enabling real-time software applications with robust enough output to support downstream machine learning tasks. In this work we propose a new machine learning pipeline and web interface that performs human pose recognition on a live video feed to detect when common exercises are performed and classify them accordingly. We present a model interface capable of webcam input with live display of classification results. Our main contributions include a keypoint and time series based lightweight approach for classifying a selected set of fitness exercises and a web-based software application for obtaining and visualizing the results in real time.

Music Generation Using an LSTM

Mar 23, 2022Abstract:Over the past several years, deep learning for sequence modeling has grown in popularity. To achieve this goal, LSTM network structures have proven to be very useful for making predictions for the next output in a series. For instance, a smartphone predicting the next word of a text message could use an LSTM. We sought to demonstrate an approach of music generation using Recurrent Neural Networks (RNN). More specifically, a Long Short-Term Memory (LSTM) neural network. Generating music is a notoriously complicated task, whether handmade or generated, as there are a myriad of components involved. Taking this into account, we provide a brief synopsis of the intuition, theory, and application of LSTMs in music generation, develop and present the network we found to best achieve this goal, identify and address issues and challenges faced, and include potential future improvements for our network.

Architecting and Visualizing Deep Reinforcement Learning Models

Dec 02, 2021

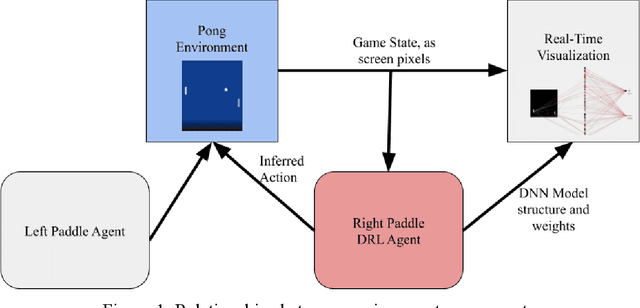

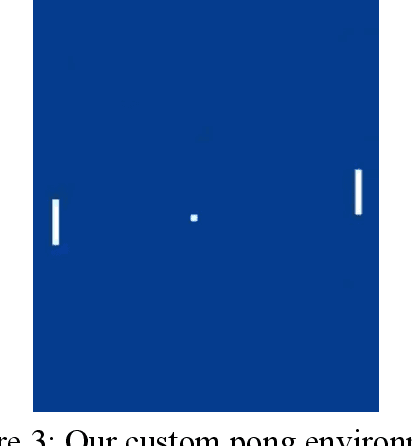

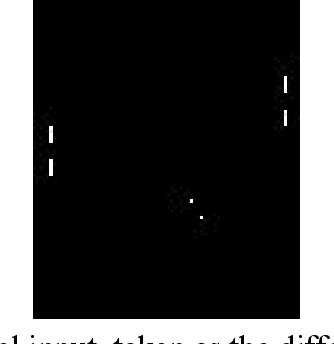

Abstract:To meet the growing interest in Deep Reinforcement Learning (DRL), we sought to construct a DRL-driven Atari Pong agent and accompanying visualization tool. Existing approaches do not support the flexibility required to create an interactive exhibit with easily-configurable physics and a human-controlled player. Therefore, we constructed a new Pong game environment, discovered and addressed a number of unique data deficiencies that arise when applying DRL to a new environment, architected and tuned a policy gradient based DRL model, developed a real-time network visualization, and combined these elements into an interactive display to help build intuition and awareness of the mechanics of DRL inference.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge