Alessandro Enrico Cesare Redondi

Intelligent Detection of Non-Essential IoT Traffic on the Home Gateway

Apr 22, 2025

Abstract:The rapid expansion of Internet of Things (IoT) devices, particularly in smart home environments, has introduced considerable security and privacy concerns due to their persistent connectivity and interaction with cloud services. Despite advancements in IoT security, effective privacy measures remain uncovered, with existing solutions often relying on cloud-based threat detection that exposes sensitive data or outdated allow-lists that inadequately restrict non-essential network traffic. This work presents ML-IoTrim, a system for detecting and mitigating non-essential IoT traffic (i.e., not influencing the device operations) by analyzing network behavior at the edge, leveraging Machine Learning to classify network destinations. Our approach includes building a labeled dataset based on IoT device behavior and employing a feature-extraction pipeline to enable a binary classification of essential vs. non-essential network destinations. We test our framework in a consumer smart home setup with IoT devices from five categories, demonstrating that the model can accurately identify and block non-essential traffic, including previously unseen destinations, without relying on traditional allow-lists. We implement our solution on a home access point, showing the framework has strong potential for scalable deployment, supporting near-real-time traffic classification in large-scale IoT environments with hundreds of devices. This research advances privacy-aware traffic control in smart homes, paving the way for future developments in IoT device privacy.

Unsatisfied Today, Satisfied Tomorrow: a simulation framework for performance evaluation of crowdsourcing-based network monitoring

Oct 30, 2020

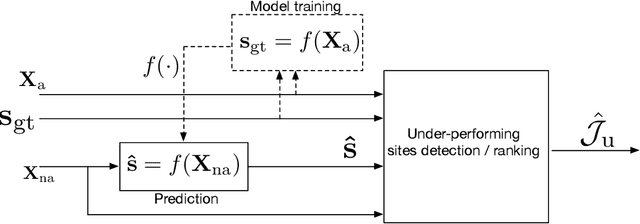

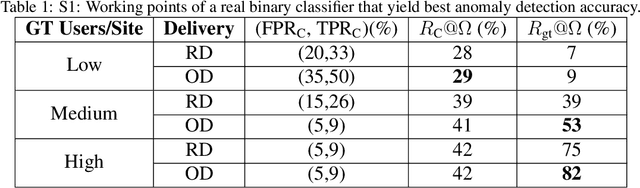

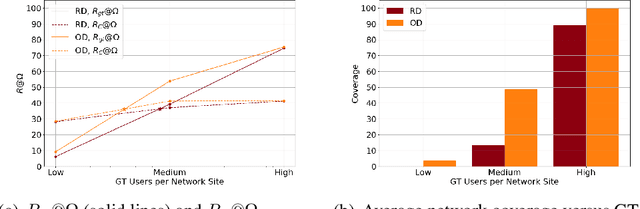

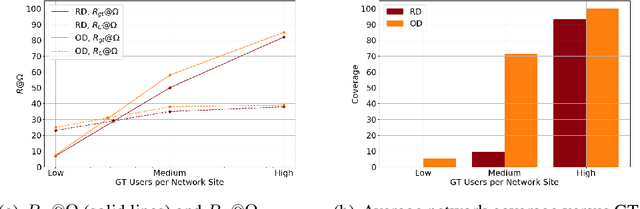

Abstract:Network operators need to continuosly upgrade their infrastructures in order to keep their customer satisfaction levels high. Crowdsourcing-based approaches are generally adopted, where customers are directly asked to answer surveys about their user experience. Since the number of collaborative users is generally low, network operators rely on Machine Learning models to predict the satisfaction levels/QoE of the users rather than directly measuring it through surveys. Finally, combining the true/predicted user satisfaction levels with information on each user mobility (e.g, which network sites each user has visited and for how long), an operator may reveal critical areas in the networks and drive/prioritize investments properly. In this work, we propose an empirical framework tailored to assess the quality of the detection of under-performing cells starting from subjective user experience grades. The framework allows to simulate diverse networking scenarios, where a network characterized by a small set of under-performing cells is visited by heterogeneous users moving through it according to realistic mobility models. The framework simulates both the processes of satisfaction surveys delivery and users satisfaction prediction, considering different delivery strategies and evaluating prediction algorithms characterized by different prediction performance. We use the simulation framework to test empirically the performance of under-performing sites detection in general scenarios characterized by different users density and mobility models to obtain insights which are generalizable and that provide interesting guidelines for network operators.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge