Akshay Subramaniam

ClimSim: An open large-scale dataset for training high-resolution physics emulators in hybrid multi-scale climate simulators

Jun 16, 2023Abstract:Modern climate projections lack adequate spatial and temporal resolution due to computational constraints. A consequence is inaccurate and imprecise prediction of critical processes such as storms. Hybrid methods that combine physics with machine learning (ML) have introduced a new generation of higher fidelity climate simulators that can sidestep Moore's Law by outsourcing compute-hungry, short, high-resolution simulations to ML emulators. However, this hybrid ML-physics simulation approach requires domain-specific treatment and has been inaccessible to ML experts because of lack of training data and relevant, easy-to-use workflows. We present ClimSim, the largest-ever dataset designed for hybrid ML-physics research. It comprises multi-scale climate simulations, developed by a consortium of climate scientists and ML researchers. It consists of 5.7 billion pairs of multivariate input and output vectors that isolate the influence of locally-nested, high-resolution, high-fidelity physics on a host climate simulator's macro-scale physical state. The dataset is global in coverage, spans multiple years at high sampling frequency, and is designed such that resulting emulators are compatible with downstream coupling into operational climate simulators. We implement a range of deterministic and stochastic regression baselines to highlight the ML challenges and their scoring. The data (https://huggingface.co/datasets/LEAP/ClimSim_high-res) and code (https://leap-stc.github.io/ClimSim) are released openly to support the development of hybrid ML-physics and high-fidelity climate simulations for the benefit of science and society.

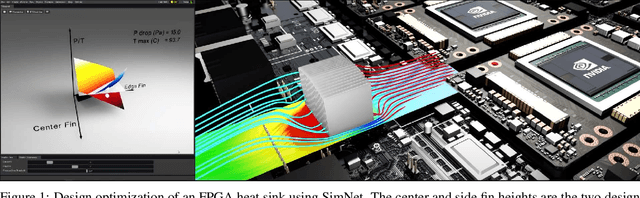

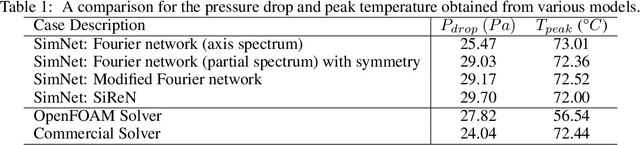

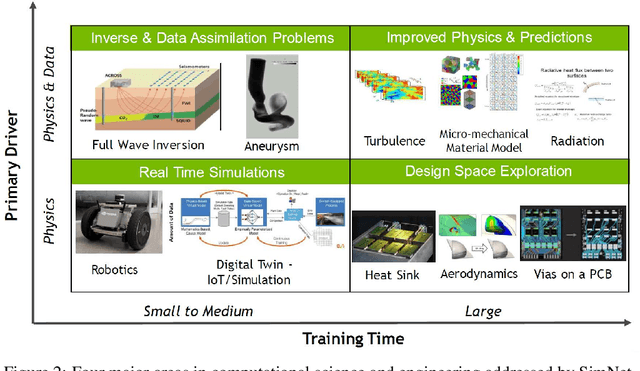

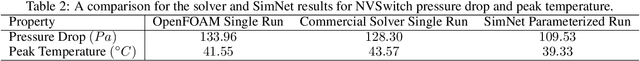

NVIDIA SimNet^{TM}: an AI-accelerated multi-physics simulation framework

Dec 14, 2020

Abstract:We present SimNet, an AI-driven multi-physics simulation framework, to accelerate simulations across a wide range of disciplines in science and engineering. Compared to traditional numerical solvers, SimNet addresses a wide range of use cases - coupled forward simulations without any training data, inverse and data assimilation problems. SimNet offers fast turnaround time by enabling parameterized system representation that solves for multiple configurations simultaneously, as opposed to the traditional solvers that solve for one configuration at a time. SimNet is integrated with parameterized constructive solid geometry as well as STL modules to generate point clouds. Furthermore, it is customizable with APIs that enable user extensions to geometry, physics and network architecture. It has advanced network architectures that are optimized for high-performance GPU computing, and offers scalable performance for multi-GPU and multi-Node implementation with accelerated linear algebra as well as FP32, FP64 and TF32 computations. In this paper we review the neural network solver methodology, the SimNet architecture, and the various features that are needed for effective solution of the PDEs. We present real-world use cases that range from challenging forward multi-physics simulations with turbulence and complex 3D geometries, to industrial design optimization and inverse problems that are not addressed efficiently by the traditional solvers. Extensive comparisons of SimNet results with open source and commercial solvers show good correlation.

ContainerStress: Autonomous Cloud-Node Scoping Framework for Big-Data ML Use Cases

Mar 18, 2020

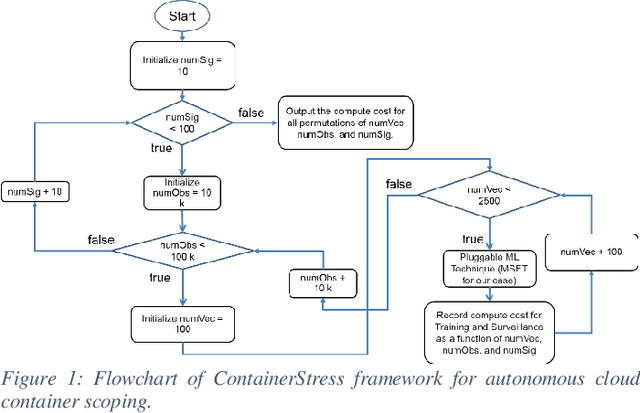

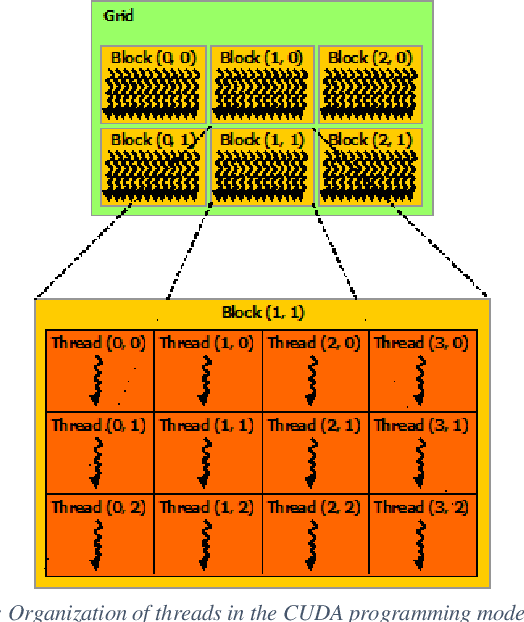

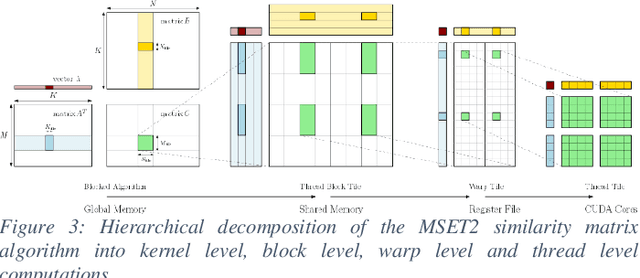

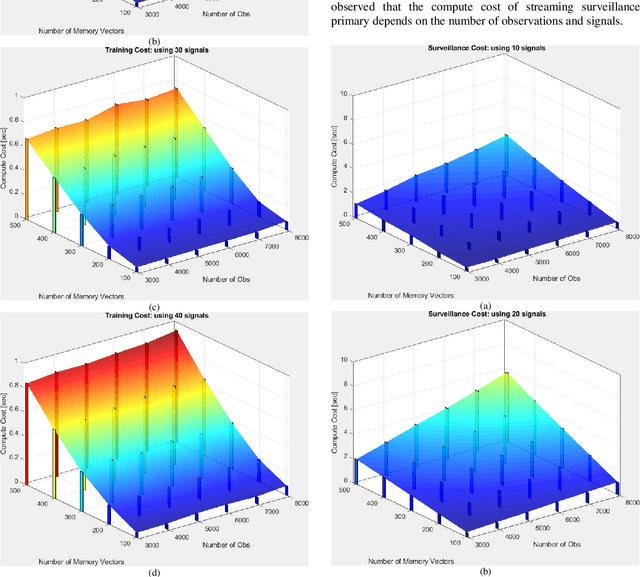

Abstract:Deploying big-data Machine Learning (ML) services in a cloud environment presents a challenge to the cloud vendor with respect to the cloud container configuration sizing for any given customer use case. OracleLabs has developed an automated framework that uses nested-loop Monte Carlo simulation to autonomously scale any size customer ML use cases across the range of cloud CPU-GPU "Shapes" (configurations of CPUs and/or GPUs in Cloud containers available to end customers). Moreover, the OracleLabs and NVIDIA authors have collaborated on a ML benchmark study which analyzes the compute cost and GPU acceleration of any ML prognostic algorithm and assesses the reduction of compute cost in a cloud container comprising conventional CPUs and NVIDIA GPUs.

Turbulence Enrichment using Physics-informed Generative Adversarial Networks

Mar 06, 2020

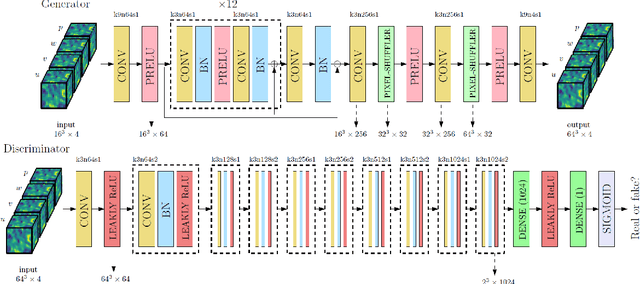

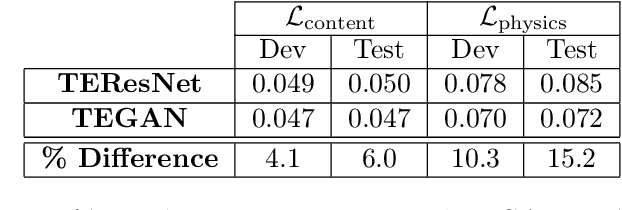

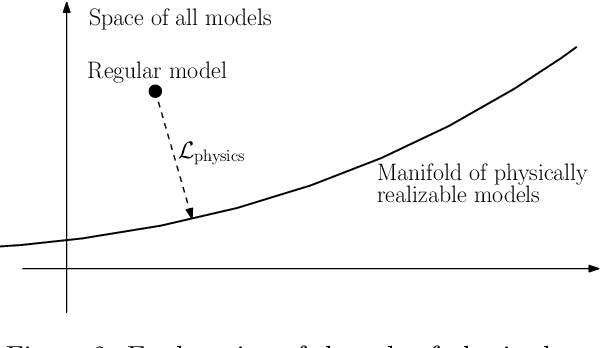

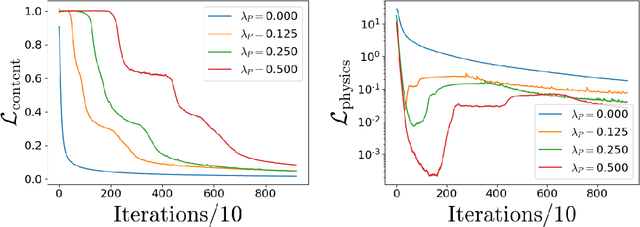

Abstract:Generative Adversarial Networks (GANs) have been widely used for generating photo-realistic images. A variant of GANs called super-resolution GAN (SRGAN) has already been used successfully for image super-resolution where low resolution images can be upsampled to a $4\times$ larger image that is perceptually more realistic. However, when such generative models are used for data describing physical processes, there are additional known constraints that models must satisfy including governing equations and boundary conditions. In general, these constraints may not be obeyed by the generated data. In this work, we develop physics-based methods for generative enrichment of turbulence. We incorporate a physics-informed learning approach by a modification to the loss function to minimize the residuals of the governing equations for the generated data. We have analyzed two trained physics-informed models: a supervised model based on convolutional neural networks (CNN) and a generative model based on SRGAN: Turbulence Enrichment GAN (TEGAN), and show that they both outperform simple bicubic interpolation in turbulence enrichment. We have also shown that using the physics-informed learning can also significantly improve the model's ability in generating data that satisfies the physical governing equations. Finally, we compare the enriched data from TEGAN to show that it is able to recover statistical metrics of the flow field including energy metrics and well as inter-scale energy dynamics and flow morphology.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge