Aditya Nigam

Semantic Features Aided Multi-Scale Reconstruction of Inter-Modality Magnetic Resonance Images

Jun 22, 2020

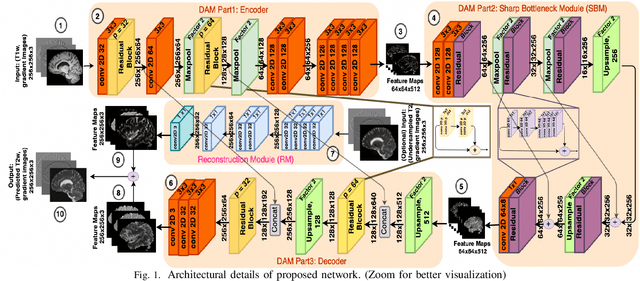

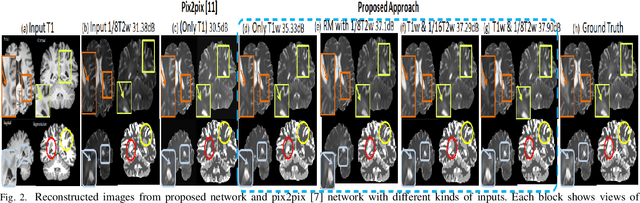

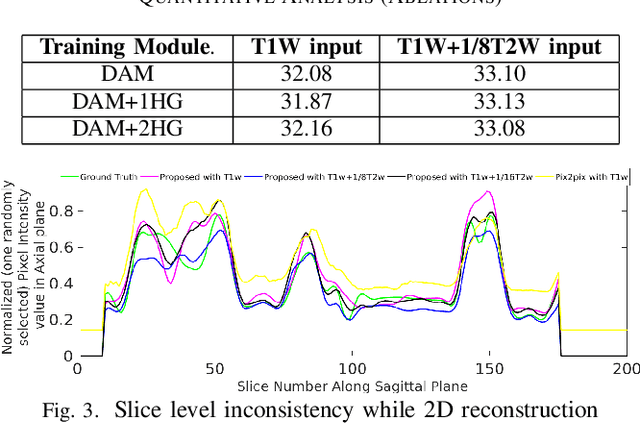

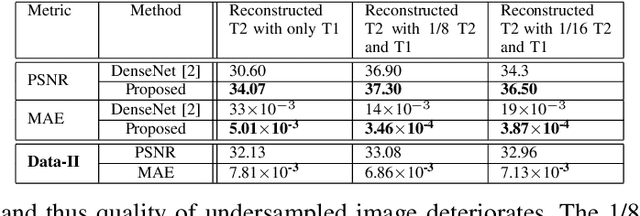

Abstract:Long acquisition time (AQT) due to series acquisition of multi-modality MR images (especially T2 weighted images (T2WI) with longer AQT), though beneficial for disease diagnosis, is practically undesirable. We propose a novel deep network based solution to reconstruct T2W images from T1W images (T1WI) using an encoder-decoder architecture. The proposed learning is aided with semantic features by using multi-channel input with intensity values and gradient of image in two orthogonal directions. A reconstruction module (RM) augmenting the network along with a domain adaptation module (DAM) which is an encoder-decoder model built-in with sharp bottleneck module (SBM) is trained via modular training. The proposed network significantly reduces the total AQT with negligible qualitative artifacts and quantitative loss (reconstructs one volume in approximately 1 second). The testing is done on publicly available dataset with real MR images, and the proposed network shows (approximately 1dB) increase in PSNR over SOTA.

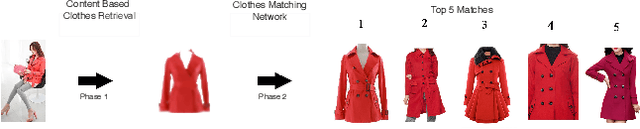

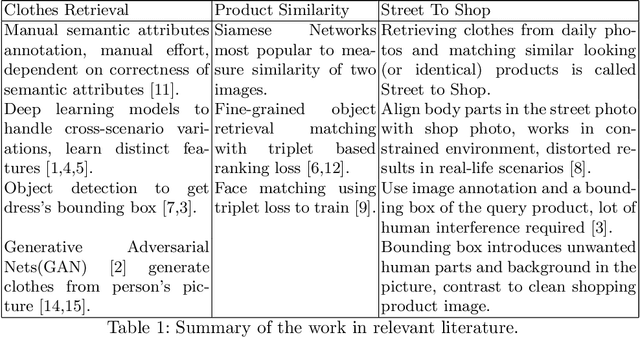

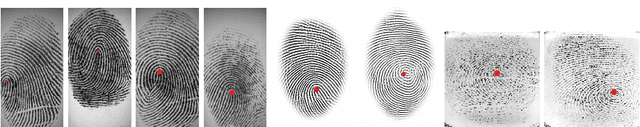

PoshakNet: Framework for matching dresses from real-life photos using GAN and Siamese Network

Nov 11, 2019

Abstract:Online garment shopping has gained many customers in recent years. Describing a dress using keywords does not always yield the proper results, which in turn leads to dissatisfaction of customers. A visual search based system will be enormously beneficent to the industry. Hence, we propose a framework that can retrieve similar clothes that can be found in an image. The first task is to extract the garment from the input image (street photo). There are various challenges for that, including pose, illumination, and background clutter. We use a Generative Adversarial Network for the task of retrieving the garment that the person in the image was wearing. It has been shown that GAN can retrieve the garment very efficiently despite the challenges of street photos. Finally, a siamese based matching system takes the retrieved cloth image and matches it with the clothes in the dataset, giving us the top k matches. We take a pre-trained inception-ResNet v1 module as a siamese network (trained using triplet loss for face detection) and fine-tune it on the shopping dataset using center loss. The dataset has been collected inhouse. For training the GAN, we use the LookBook dataset, which is publically available.

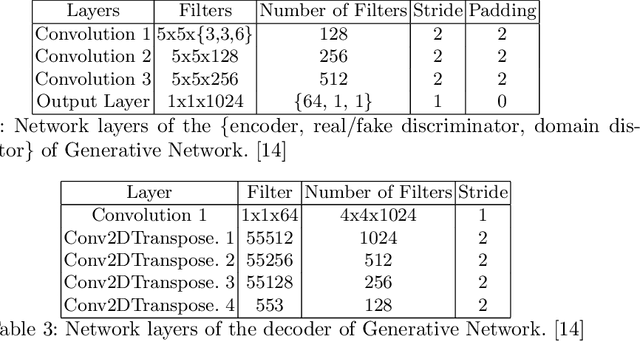

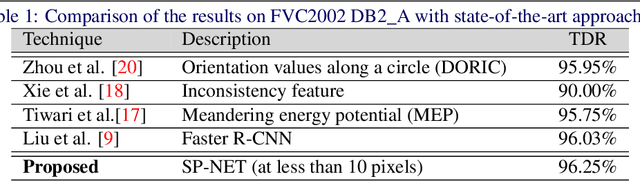

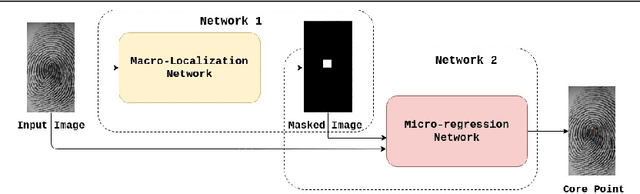

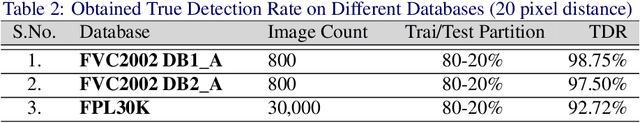

SP-NET: One Shot Fingerprint Singular-Point Detector

Aug 13, 2019

Abstract:Singular points of a fingerprint image are special locations having high curvature properties. They can play a pivotal role in fingerprint normalization and reliable feature extraction. Accurate and efficient extraction of a singular point plays a major role in successful fingerprint recognition and indexing. In this paper, a novel deep learning based architecture is proposed for one shot (end-to-end) singular point detection from an input fingerprint image. The model consists of a Macro-Localization Network and a Micro-Regression Network along with three stacked hourglass as a bottleneck. The proposed model has been tested on three databases viz. FVC2002 DB1_A, FVC2002 DB2_A and FPL30K and has been found to achieve true detection rate of 98.75%, 97.5% and 92.72% respectively, which is better than any other state-of-the-art technique.

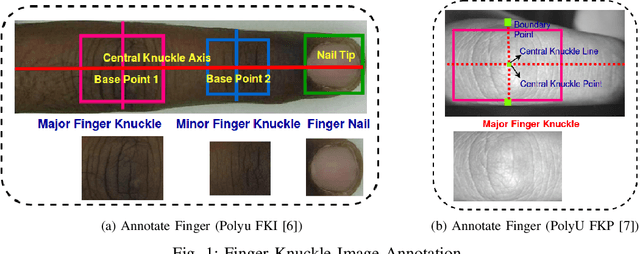

FKIMNet: A Finger Dorsal Image Matching Network Comparing Component (Major, Minor and Nail) Matching with Holistic (Finger Dorsal) Matching

Apr 02, 2019

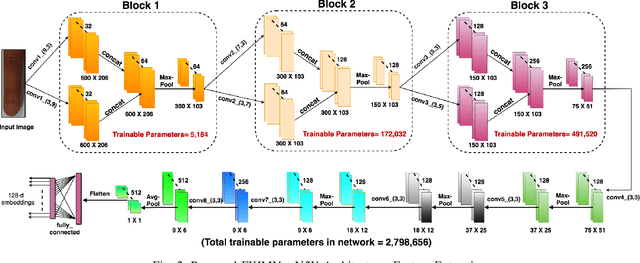

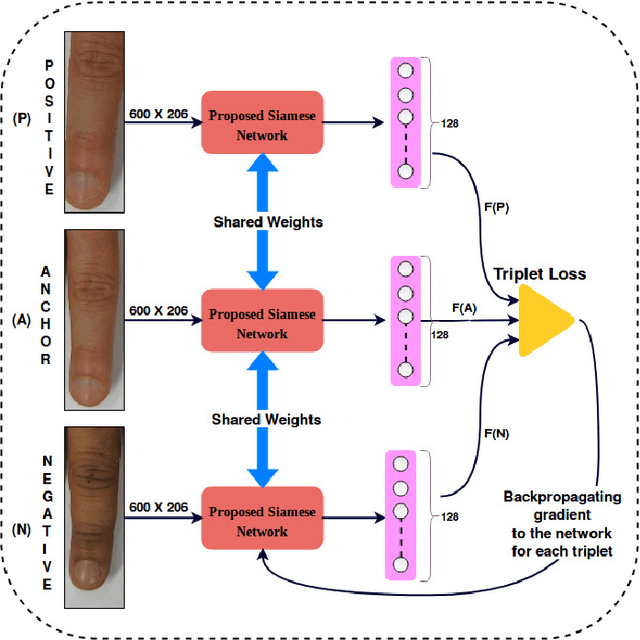

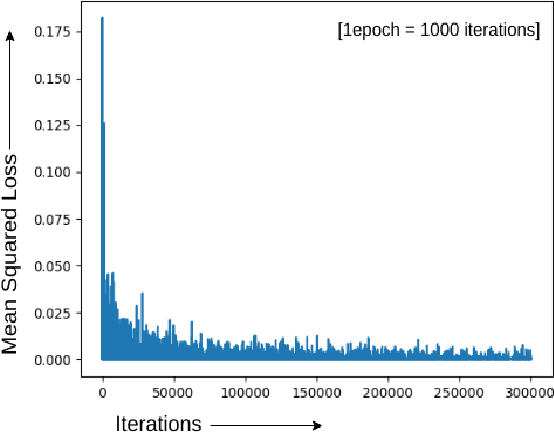

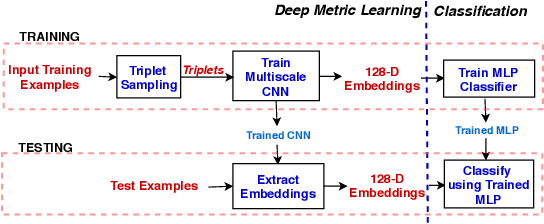

Abstract:Current finger knuckle image recognition systems, often require users to place fingers' major or minor joints flatly towards the capturing sensor. To extend these systems for user non-intrusive application scenarios, such as consumer electronics, forensic, defence etc, we suggest matching the full dorsal fingers, rather than the major/ minor region of interest (ROI) alone. In particular, this paper makes a comprehensive study on the comparisons between full finger and fusion of finger ROI's for finger knuckle image recognition. These experiments suggest that using full-finger, provides a more elegant solution. Addressing the finger matching problem, we propose a CNN (convolutional neural network) which creates a $128$-D feature embedding of an image. It is trained via. triplet loss function, which enforces the L2 distance between the embeddings of the same subject to be approaching zero, whereas the distance between any 2 embeddings of different subjects to be at least a margin. For precise training of the network, we use dynamic adaptive margin, data augmentation, and hard negative mining. In distinguished experiments, the individual performance of finger, as well as weighted sum score level fusion of major knuckle, minor knuckle, and nail modalities have been computed, justifying our assumption to consider full finger as biometrics instead of its counterparts. The proposed method is evaluated using two publicly available finger knuckle image datasets i.e., PolyU FKP dataset and PolyU Contactless FKI Datasets.

Multiscale CNN based Deep Metric Learning for Bioacoustic Classification: Overcoming Training Data Scarcity Using Dynamic Triplet Loss

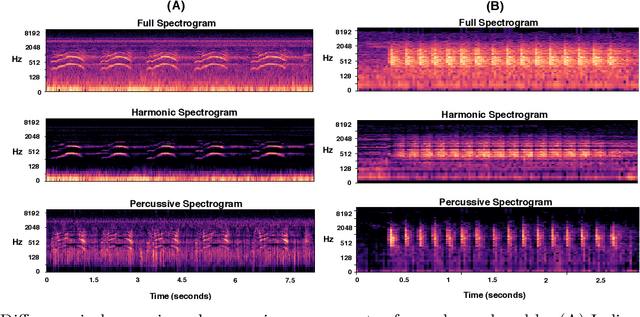

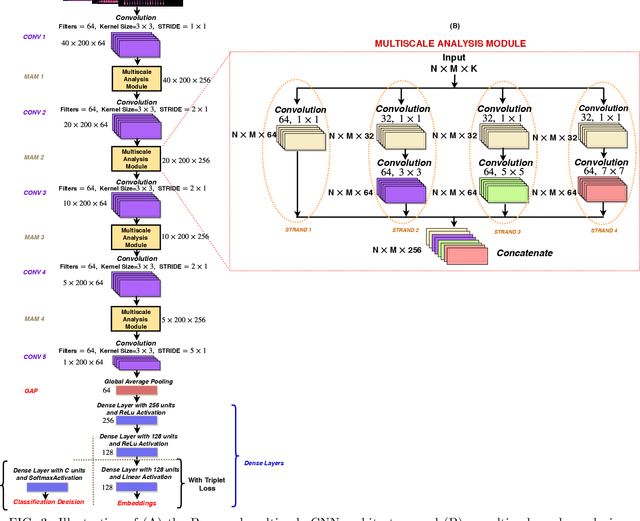

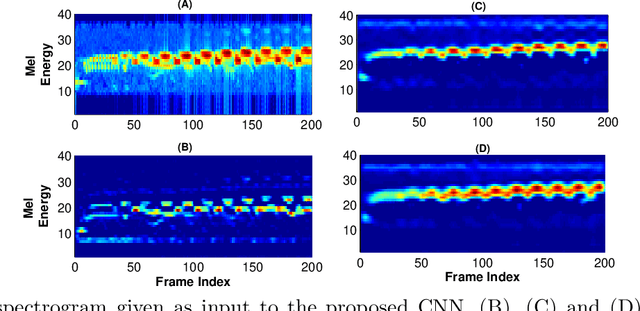

Mar 27, 2019

Abstract:This paper proposes multiscale convolutional neural network (CNN)-based deep metric learning for bioacoustic classification, under low training data conditions. The proposed CNN is characterized by the utilization of four different filter sizes at each level to analyze input feature maps. This multiscale nature helps in describing different bioacoustic events effectively: smaller filters help in learning the finer details of bioacoustic events, whereas, larger filters help in analyzing a larger context leading to global details. A dynamic triplet loss is employed in the proposed CNN architecture to learn a transformation from the input space to the embedding space, where classification is performed. The triplet loss helps in learning this transformation by analyzing three examples, referred to as triplets, at a time where intra-class distance is minimized while maximizing the inter-class separation by a dynamically increasing margin. The number of possible triplets increases cubically with the dataset size, making triplet loss more suitable than the softmax cross-entropy loss in low training data conditions. Experiments on three different publicly available datasets show that the proposed framework performs better than existing bioacoustic classification frameworks. Experimental results also confirm the superiority of the triplet loss over the cross-entropy loss in low training data conditions

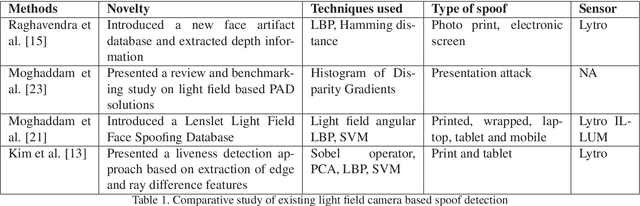

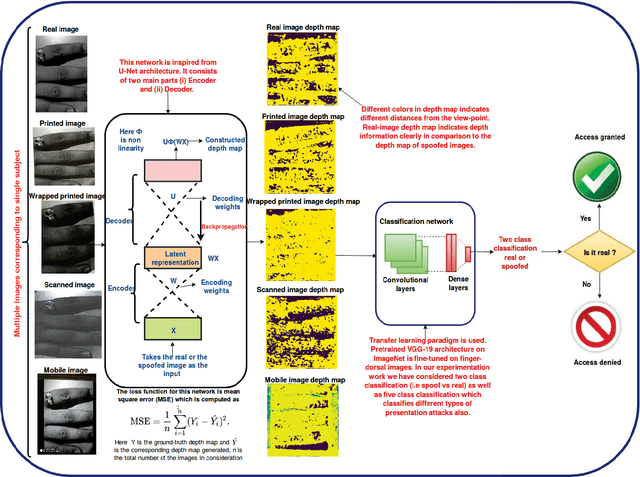

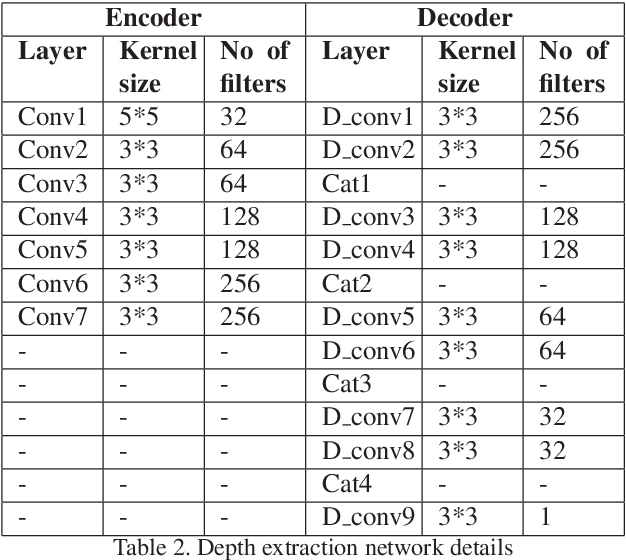

FDSNet: Finger dorsal image spoof detection network using light field camera

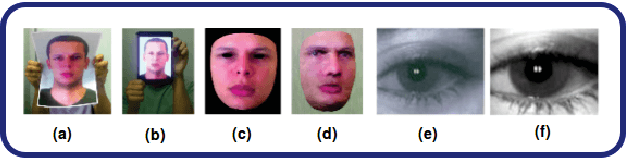

Dec 18, 2018

Abstract:At present spoofing attacks via which biometric system is potentially vulnerable against a fake biometric characteristic, introduces a great challenge to recognition performance. Despite the availability of a broad range of presentation attack detection (PAD) or liveness detection algorithms, fingerprint sensors are vulnerable to spoofing via fake fingers. In such situations, finger dorsal images can be thought of as an alternative which can be captured without much user cooperation and are more appropriate for outdoor security applications. In this paper, we present a first feasibility study of spoofing attack scenarios on finger dorsal authentication system, which include four types of presentation attacks such as printed paper, wrapped printed paper, scan and mobile. This study also presents a CNN based spoofing attack detection method which employ state-of-the-art deep learning techniques along with transfer learning mechanism. We have collected 196 finger dorsal real images from 33 subjects, captured with a Lytro camera and also created a set of 784 finger dorsal spoofing images. Extensive experimental results have been performed that demonstrates the superiority of the proposed approach for various spoofing attacks.

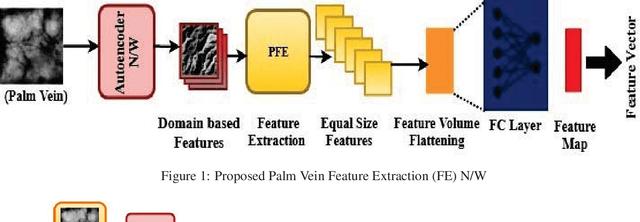

PVSNet: Palm Vein Authentication Siamese Network Trained using Triplet Loss and Adaptive Hard Mining by Learning Enforced Domain Specific Features

Dec 15, 2018

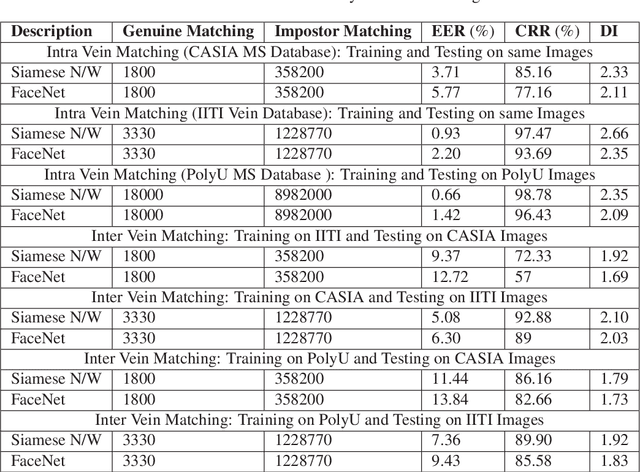

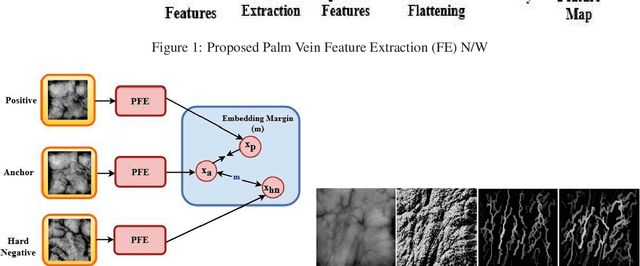

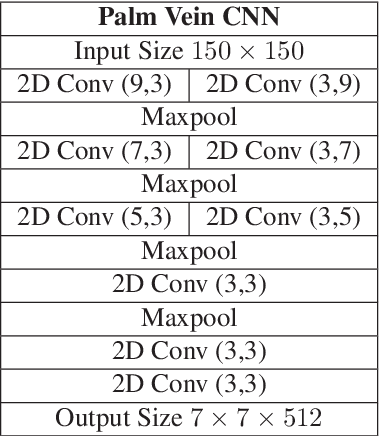

Abstract:Designing an end-to-end deep learning network to match the biometric features with limited training samples is an extremely challenging task. To address this problem, we propose a new way to design an end-to-end deep CNN framework i.e., PVSNet that works in two major steps: first, an encoder-decoder network is used to learn generative domain-specific features followed by a Siamese network in which convolutional layers are pre-trained in an unsupervised fashion as an autoencoder. The proposed model is trained via triplet loss function that is adjusted for learning feature embeddings in a way that minimizes the distance between embedding-pairs from the same subject and maximizes the distance with those from different subjects, with a margin. In particular, a triplet Siamese matching network using an adaptive margin based hard negative mining has been suggested. The hyper-parameters associated with the training strategy, like the adaptive margin, have been tuned to make the learning more effective on biometric datasets. In extensive experimentation, the proposed network outperforms most of the existing deep learning solutions on three type of typical vein datasets which clearly demonstrates the effectiveness of our proposed method.

FDFNet : A Secure Cancelable Deep Finger Dorsal Template Generation Network Secured via. Bio-Hashing

Dec 13, 2018

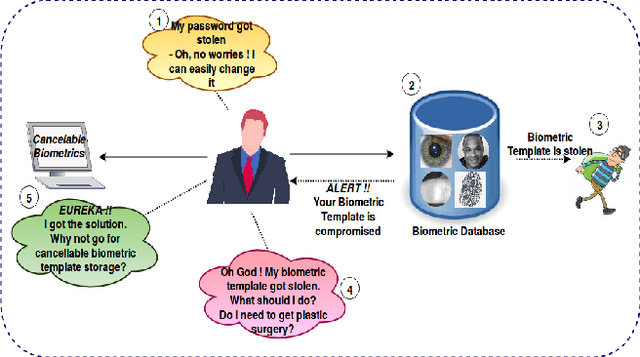

Abstract:Present world has already been consistently exploring the fine edges of online and digital world by imposing multiple challenging problems/scenarios. Similar to physical world, personal identity management is very crucial in-order to provide any secure online system. Last decade has seen a lot of work in this area using biometrics such as face, fingerprint, iris etc. Still there exist several vulnerabilities and one should have to address the problem of compromised biometrics much more seriously, since they cannot be modified easily once compromised. In this work, we have proposed a secure cancelable finger dorsal template generation network (learning domain specific features) secured via. Bio-Hashing. Proposed system effectively protects the original finger dorsal images by withdrawing compromised template and reassigning the new one. A novel Finger-Dorsal Feature Extraction Net (FDFNet) has been proposed for extracting the discriminative features. This network is exclusively trained on trait specific features without using any kind of pre-trained architecture. Later Bio-Hashing, a technique based on assigning a tokenized random number to each user, has been used to hash the features extracted from FDFNet. To test the performance of the proposed architecture, we have tested it over two benchmark public finger knuckle datasets: PolyU FKP and PolyU Contactless FKI. The experimental results shows the effectiveness of the proposed system in terms of security and accuracy.

Learning to Decode 7T-like MR Image Reconstruction from 3T MR Images

Jun 18, 2018

Abstract:Increasing demand for high field magnetic resonance (MR) scanner indicates the need for high-quality MR images for accurate medical diagnosis. However, cost constraints, instead, motivate a need for algorithms to enhance images from low field scanners. We propose an approach to process the given low field (3T) MR image slices to reconstruct the corresponding high field (7T-like) slices. Our framework involves a novel architecture of a merged convolutional autoencoder with a single encoder and multiple decoders. Specifically, we employ three decoders with random initializations, and the proposed training approach involves selection of a particular decoder in each weight-update iteration for back propagation. We demonstrate that the proposed algorithm outperforms some related contemporary methods in terms of performance and reconstruction time.

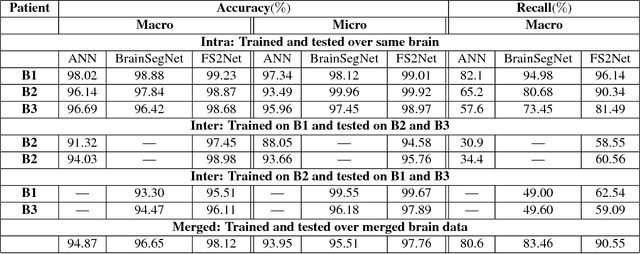

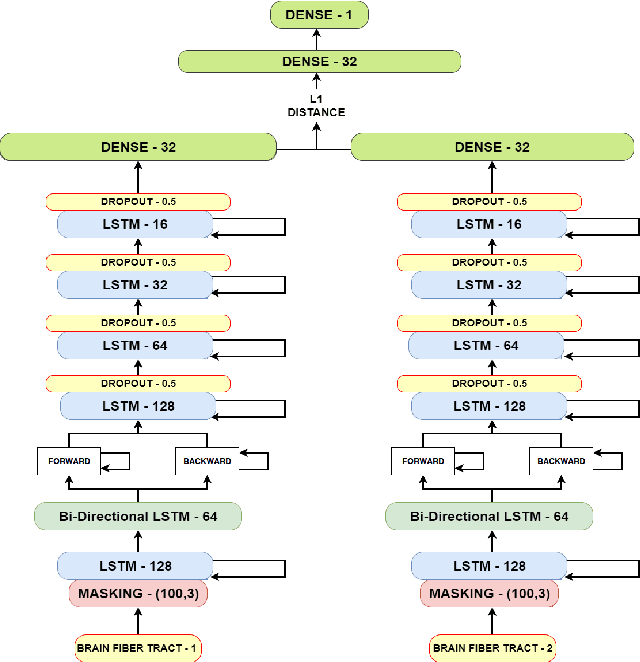

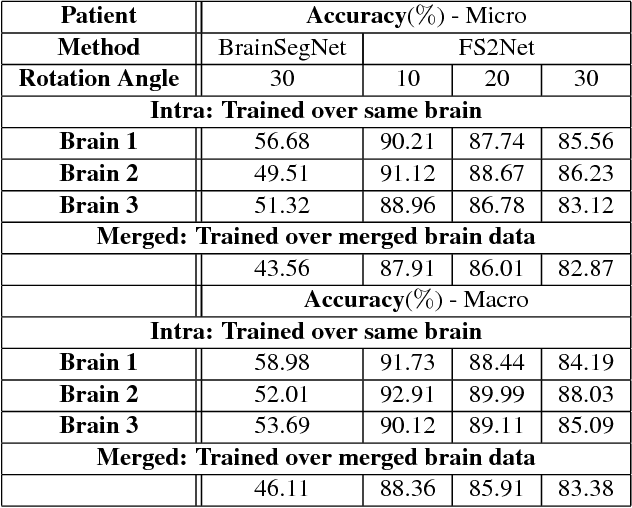

Siamese LSTM based Fiber Structural Similarity Network (FS2Net) for Rotation Invariant Brain Tractography Segmentation

Dec 28, 2017

Abstract:In this paper, we propose a novel deep learning architecture combining stacked Bi-directional LSTM and LSTMs with the Siamese network architecture for segmentation of brain fibers, obtained from tractography data, into anatomically meaningful clusters. The proposed network learns the structural difference between fibers of different classes, which enables it to classify fibers with high accuracy. Importantly, capturing such deep inter and intra class structural relationship also ensures that the segmentation is robust to relative rotation among test and training data, hence can be used with unregistered data. Our extensive experimentation over order of hundred-thousands of fibers show that the proposed model achieves state-of-the-art results, even in cases of large relative rotations between test and training data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge