Öznur Özkasap

FedBWO: Enhancing Communication Efficiency in Federated Learning

May 07, 2025

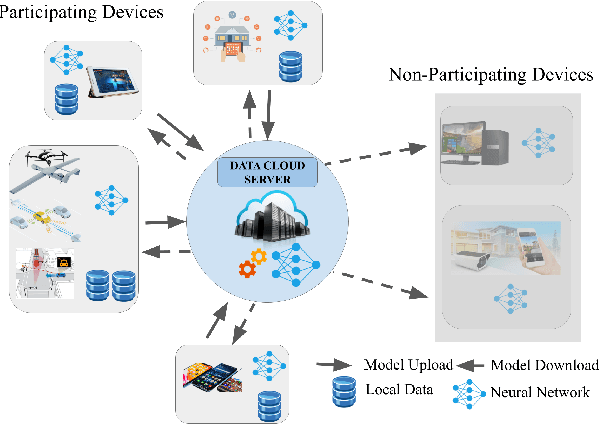

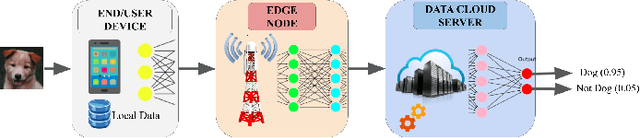

Abstract:Federated Learning (FL) is a distributed Machine Learning (ML) setup, where a shared model is collaboratively trained by various clients using their local datasets while keeping the data private. Considering resource-constrained devices, FL clients often suffer from restricted transmission capacity. Aiming to enhance the system performance, the communication between clients and server needs to be diminished. Current FL strategies transmit a tremendous amount of data (model weights) within the FL process, which needs a high communication bandwidth. Considering resource constraints, increasing the number of clients and, consequently, the amount of data (model weights) can lead to a bottleneck. In this paper, we introduce the Federated Black Widow Optimization (FedBWO) technique to decrease the amount of transmitted data by transmitting only a performance score rather than the local model weights from clients. FedBWO employs the BWO algorithm to improve local model updates. The conducted experiments prove that FedBWO remarkably improves the performance of the global model and the communication efficiency of the overall system. According to the experimental outcomes, FedBWO enhances the global model accuracy by an average of 21% over FedAvg, and 12% over FedGWO. Furthermore, FedBWO dramatically decreases the communication cost compared to other methods.

DiabML: AI-assisted diabetes diagnosis method with meta-heuristic-based feature selection

Oct 30, 2024

Abstract:Diabetes is a chronic disorder identified by the high sugar level in the blood that can cause various different disorders such as kidney failure, heart attack, sightlessness, and stroke. Developments in the healthcare domain by facilitating the early detection of diabetes risk can help not only caregivers but also patients. AIoMT is a recent technology that integrates IoT and machine learning methods to give services for medical purposes, which is a powerful technology for the early detection of diabetes. In this paper, we take advantage of AIoMT and propose a hybrid diabetes risk detection method, DiabML, which uses the BWO algorithm and ML methods. BWO is utilized for feature selection and SMOTE for imbalance handling in the pre-processing procedure. The simulation results prove the superiority of the proposed DiabML method compared to the existing works. DiabML achieves 86.1\% classification accuracy by AdaBoost classifier outperforms the relevant existing methods.

Blockchain-based Federated Learning for Decentralized Energy Management Systems

Jun 23, 2023

Abstract:The Internet of Energy (IoE) is a distributed paradigm that leverages smart networks and distributed system technologies to enable decentralized energy systems. In contrast to the traditional centralized energy systems, distributed Energy Internet systems comprise multiple components and communication requirements that demand innovative technologies for decentralization, reliability, efficiency, and security. Recent advances in blockchain architectures, smart contracts, and distributed federated learning technologies have opened up new opportunities for realizing decentralized Energy Internet services. In this paper, we present a comprehensive analysis and classification of state-of-the-art solutions that employ blockchain, smart contracts, and federated learning for the IoE domains. Specifically, we identify four representative system models and discuss their key aspects. These models demonstrate the diverse ways in which blockchain, smart contracts, and federated learning can be integrated to support the main domains of IoE, namely distributed energy trading and sharing, smart microgrid energy networks, and electric and connected vehicle management. Furthermore, we provide a detailed comparison of the different levels of decentralization, the advantages of federated learning, and the benefits of using blockchain for the IoE systems. Additionally, we identify open issues and areas for future research for integrating federated learning and blockchain in the Internet of Energy domains.

FLAGS Framework for Comparative Analysis of Federated Learning Algorithms

Dec 14, 2022Abstract:Federated Learning (FL) has become a key choice for distributed machine learning. Initially focused on centralized aggregation, recent works in FL have emphasized greater decentralization to adapt to the highly heterogeneous network edge. Among these, Hierarchical, Device-to-Device and Gossip Federated Learning (HFL, D2DFL \& GFL respectively) can be considered as foundational FL algorithms employing fundamental aggregation strategies. A number of FL algorithms were subsequently proposed employing multiple fundamental aggregation schemes jointly. Existing research, however, subjects the FL algorithms to varied conditions and gauges the performance of these algorithms mainly against Federated Averaging (FedAvg) only. This work consolidates the FL landscape and offers an objective analysis of the major FL algorithms through a comprehensive cross-evaluation for a wide range of operating conditions. In addition to the three foundational FL algorithms, this work also analyzes six derived algorithms. To enable a uniform assessment, a multi-FL framework named FLAGS: Federated Learning AlGorithms Simulation has been developed for rapid configuration of multiple FL algorithms. Our experiments indicate that fully decentralized FL algorithms achieve comparable accuracy under multiple operating conditions, including asynchronous aggregation and the presence of stragglers. Furthermore, decentralized FL can also operate in noisy environments and with a comparably higher local update rate. However, the impact of extremely skewed data distributions on decentralized FL is much more adverse than on centralized variants. The results indicate that it may not be necessary to restrict the devices to a single FL algorithm; rather, multi-FL nodes may operate with greater efficiency.

* 39 pages, 10 figures. Accepted for publication in Elsevier 'Internet of Things'

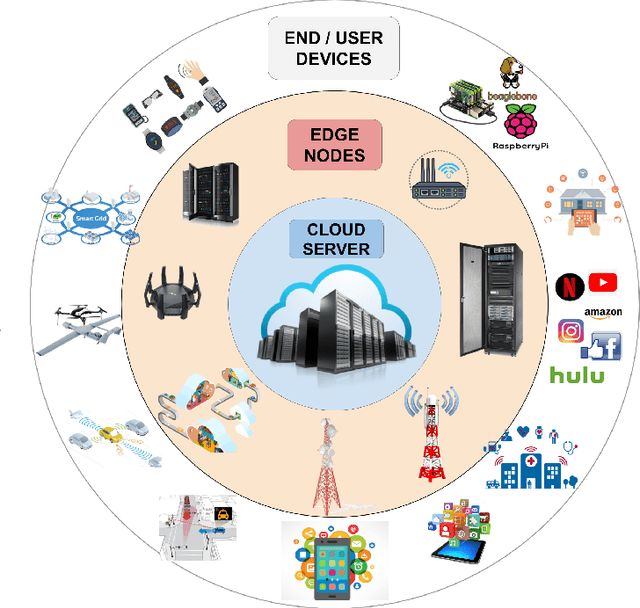

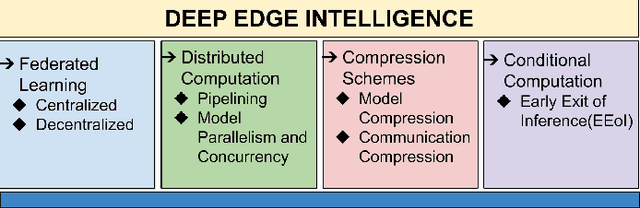

State-of-the-art Techniques in Deep Edge Intelligence

Aug 04, 2020

Abstract:The potential held by the gargantuan volumes of data being generated across networks worldwide has been truly unlocked by machine learning techniques and more recently Deep Learning. The advantages offered by the latter have seen it rapidly becoming a framework of choice for various applications. However, the centralization of computational resources and the need for data aggregation have long been limiting factors in the democratization of Deep Learning applications. Edge Computing is an emerging paradigm that aims to utilize the hitherto untapped processing resources available at the network periphery. Edge Intelligence (EI) has quickly emerged as a powerful alternative to enable learning using the concepts of Edge Computing. Deep Learning-based Edge Intelligence or Deep Edge Intelligence (DEI) lies in this rapidly evolving domain. In this article, we provide an overview of the major constraints in operationalizing DEI. The major research avenues in DEI have been consolidated under Federated Learning, Distributed Computation, Compression Schemes and Conditional Computation. We also present some of the prevalent challenges and highlight prospective research avenues.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge