Simultaneous Meta-Learning with Diverse Task Spaces

Paper and Code

May 28, 2021

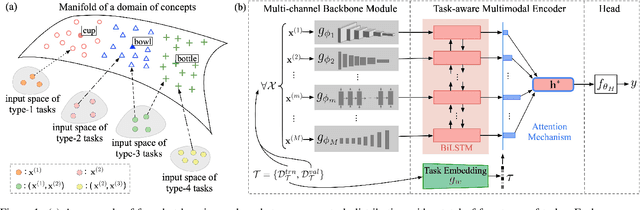

In this paper, we study the problem of how to learn a model-agnostic meta-learner that simultaneously learning from different feature spaces. The reason that most of model-agnostic meta-learner methods cannot handle multiple task spaces is due to less common knowledge for the task instances. The reduction of shared knowledge is because different tasks with different example-level manifolds cannot entirely share the same model architecture. Actually, various tasks only share partial meta-parameters. For example, for two multi-feature tasks whose example-level manifolds contain a same subspace but their remaining subspaces are not the same, one can imagine that the common knowledge can be the feature extractor for that common subspace, but other subspaces' feature extractors cannot be used between the two tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge