Reinforcement Learning for Feedback-Enabled Cyber Resilience

Paper and Code

Jul 02, 2021

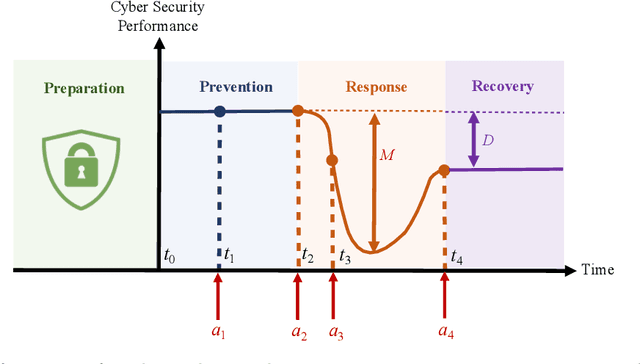

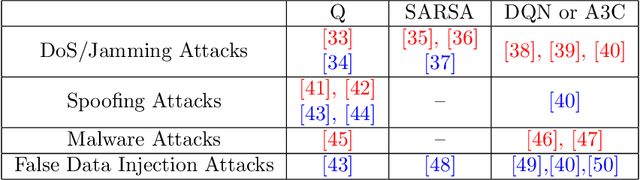

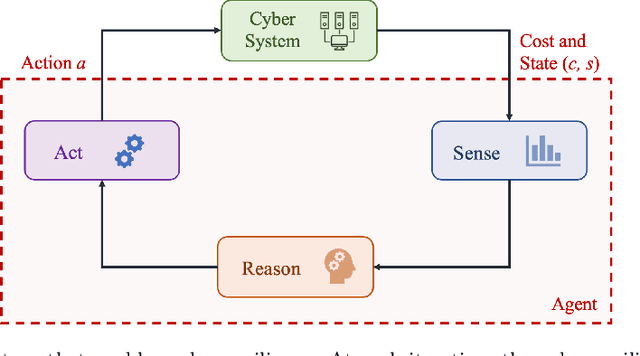

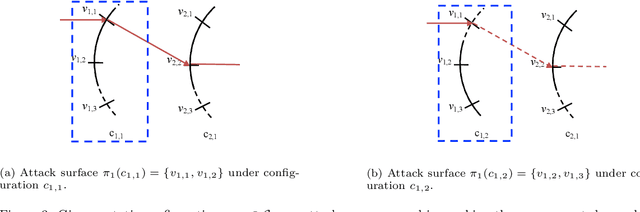

The rapid growth in the number of devices and their connectivity has enlarged the attack surface and weakened cyber systems. As attackers become increasingly sophisticated and resourceful, mere reliance on traditional cyber protection, such as intrusion detection, firewalls, and encryption, is insufficient to secure cyber systems. Cyber resilience provides a new security paradigm that complements inadequate protection with resilience mechanisms. A Cyber-Resilient Mechanism (CRM) adapts to the known or zero-day threats and uncertainties in real-time and strategically responds to them to maintain the critical functions of the cyber systems. Feedback architectures play a pivotal role in enabling the online sensing, reasoning, and actuation of the CRM. Reinforcement Learning (RL) is an important class of algorithms that epitomize the feedback architectures for cyber resiliency, allowing the CRM to provide dynamic and sequential responses to attacks with limited prior knowledge of the attacker. In this work, we review the literature on RL for cyber resiliency and discuss the cyber-resilient defenses against three major types of vulnerabilities, i.e., posture-related, information-related, and human-related vulnerabilities. We introduce moving target defense, defensive cyber deception, and assistive human security technologies as three application domains of CRMs to elaborate on their designs. The RL technique also has vulnerabilities itself. We explain the major vulnerabilities of RL and present several attack models in which the attacks target the rewards, the measurements, and the actuators. We show that the attacker can trick the RL agent into learning a nefarious policy with minimum attacking effort, which shows serious security concerns for RL-enabled systems. Finally, we discuss the future challenges of RL for cyber security and resiliency and emerging applications of RL-based CRMs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge