Optimal Uniform OPE and Model-based Offline Reinforcement Learning in Time-Homogeneous, Reward-Free and Task-Agnostic Settings

Paper and Code

May 21, 2021

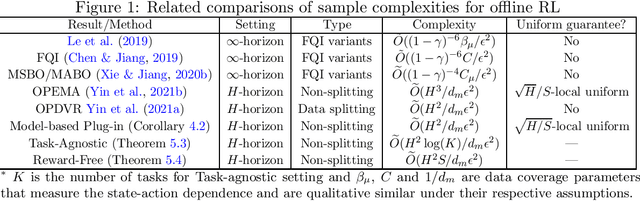

This work studies the statistical limits of uniform convergence for offline policy evaluation (OPE) problems with model-based methods (for finite horizon MDP) and provides a unified view towards optimal learning for several well-motivated offline tasks. Uniform OPE $\sup_\Pi|Q^\pi-\hat{Q}^\pi|<\epsilon$ (initiated by \citet{yin2021near}) is a stronger measure than the point-wise (fixed policy) OPE and ensures offline policy learning when $\Pi$ contains all policies (global policy class). In this paper, we establish an $\Omega(H^2 S/d_m\epsilon^2)$ lower bound (over model-based family) for the global uniform OPE, where $d_m$ is the minimal state-action probability induced by the behavior policy. Next, our main result establishes an episode complexity of $\tilde{O}(H^2/d_m\epsilon^2)$ for \emph{local} uniform convergence that applies to all \emph{near-empirically optimal} policies for the MDPs with \emph{stationary} transition. This result implies the optimal sample complexity for offline learning and separates the local uniform OPE from the global case due to the extra $S$ factor. Paramountly, the model-based method combining with our new analysis technique (singleton absorbing MDP) can be adapted to the new settings: offline task-agnostic and the offline reward-free with optimal complexity $\tilde{O}(H^2\log(K)/d_m\epsilon^2)$ ($K$ is the number of tasks) and $\tilde{O}(H^2S/d_m\epsilon^2)$ respectively, which provides a unified framework for simultaneously solving different offline RL problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge