Leveraging inductive bias of neural networks for learning without explicit human annotations

Paper and Code

Oct 31, 2019

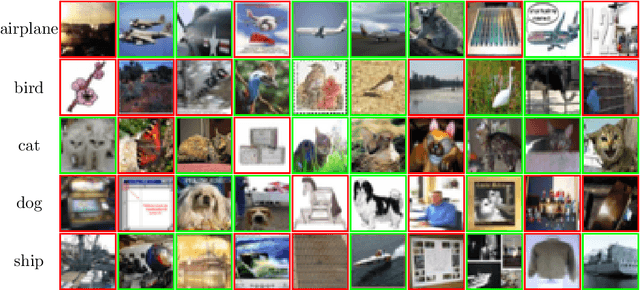

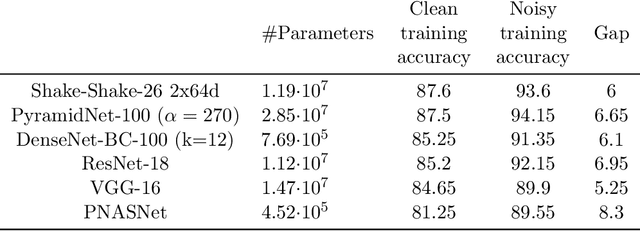

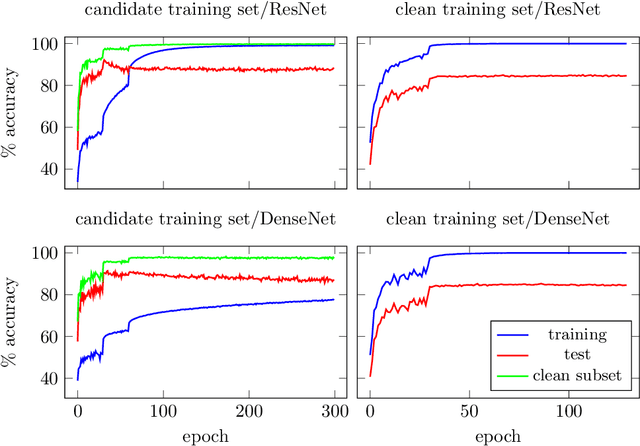

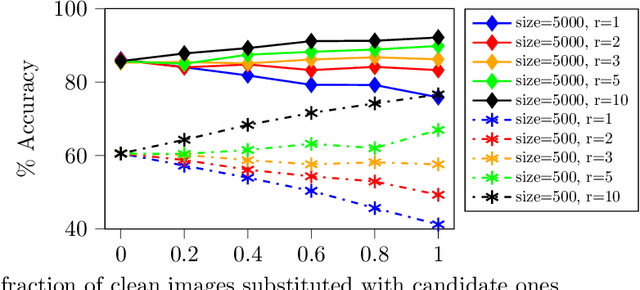

Classification problems today are often solved by first collecting examples along with candidate labels, second obtaining clean labels from workers, and third training a large, overparameterized deep neural network on the clean examples. The second, manual labeling step is often the most expensive one as it requires manually going through all examples. In this paper we propose to i) skip the manual labeling step entirely, ii) directly train the deep neural network on the noisy candidate labels, and iii) early stop the training to avoid overfitting. With this procedure we exploit an intriguing property of large overparameterized neural networks: While they are capable of perfectly fitting the noisy data, gradient descent fits clean labels faster than noisy ones. Thus, training and early stopping on noisy labels resembles training on clean labels only. Our results show that early stopping the training of standard deep networks (such as ResNet-18) on a subset of the Tiny Images dataset (which is obtained without any explicit human labels and only about half of the labels are correct), gives a significantly higher test performance than when trained on the clean CIFAR-10 training dataset (which is obtained by labeling a subset of the Tiny Images dataset). In addition, our results show that the noise generated through the label collection process is not nearly as adversarial for learning as the noise generated by randomly flipping labels, which is the noise most prevalent in works demonstrating noise robustness of neural networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge