Analysis of hidden feedback loops in continuous machine learning systems

Paper and Code

Jan 17, 2021

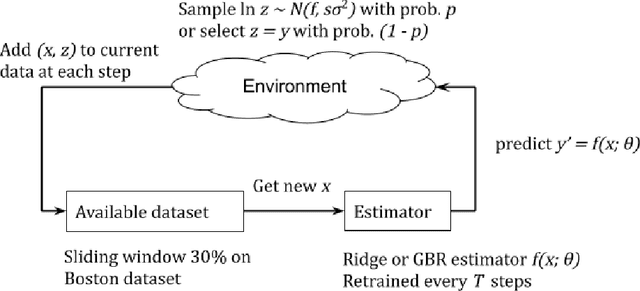

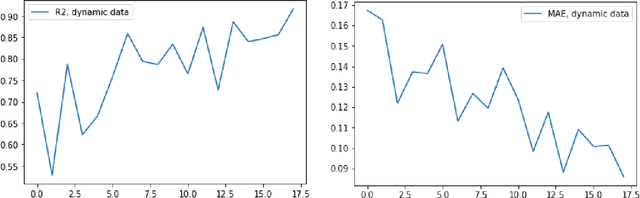

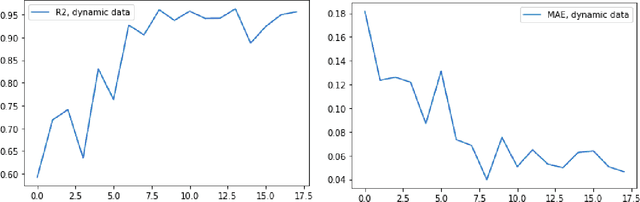

In this concept paper, we discuss intricacies of specifying and verifying the quality of continuous and lifelong learning artificial intelligence systems as they interact with and influence their environment causing a so-called concept drift. We signify a problem of implicit feedback loops, demonstrate how they intervene with user behavior on an exemplary housing prices prediction system. Based on a preliminary model, we highlight conditions when such feedback loops arise and discuss possible solution approaches.

* Soft. Qual.: Fut. Persp. on Soft. Eng. Q. SWQD 2021. LNBIP, V. 404 * 7 pages, 9 figures; added more experiments, minor stylistic fixes and

typos

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge