Adaptive Estimation of Multivariate Piecewise Polynomials and Bounded Variation Functions by Optimal Decision Trees

Paper and Code

Dec 14, 2019

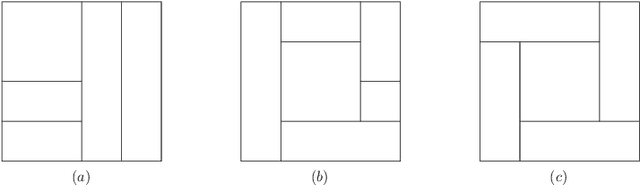

Proposed by \cite{donoho1997cart}, Dyadic CART is a nonparametric regression method which computes a globally optimal dyadic decision tree and fits piecewise constant functions in two dimensions. In this article we define and study Dyadic CART and a closely related estimator, namely Optimal Regression Tree (ORT), in the context of estimating piecewise smooth functions in general dimensions. More precisely, these optimal decision tree estimators fit piecewise polynomials of any given degree. Like Dyadic CART in two dimensions, we reason that these estimators can also be computed in polynomial time in the sample size via dynamic programming. We prove oracle inequalities for the finite sample risk of Dyadic CART and ORT which imply tight {risk} bounds for several function classes of interest. Firstly, they imply that the finite sample risk of ORT of {\em order} $r \geq 0$ is always bounded by $C k \frac{\log N}{N}$ ($N$ is the sample size) whenever the regression function is piecewise polynomial of degree $r$ on some reasonably regular axis aligned rectangular partition of the domain with at most $k$ rectangles. Beyond the univariate case, such guarantees are scarcely available in the literature for computationally efficient estimators. Secondly, our oracle inequalities uncover minimax rate optimality and adaptivity of the Dyadic CART estimator for function spaces with bounded variation. We consider two function spaces of recent interest where multivariate total variation denoising and univariate trend filtering are the state of the art methods. We show that Dyadic CART enjoys certain advantages over these estimators while still maintaining all their known guarantees.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge