Zeyun Yu

Department of Computer Science, University of Wisconsin-Milwaukee, Milwaukee, WI, USA

Automatic tracing of mandibular canal pathways using deep learning

Nov 30, 2021

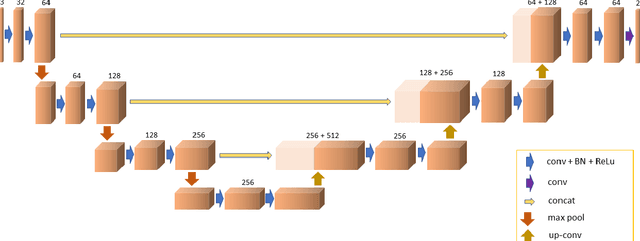

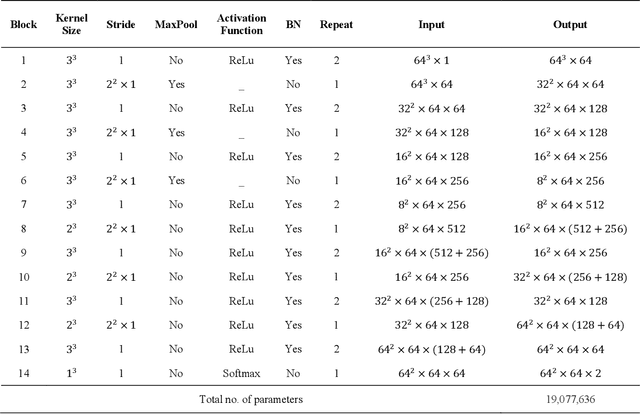

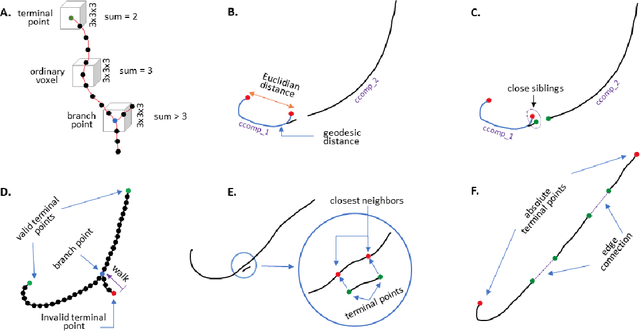

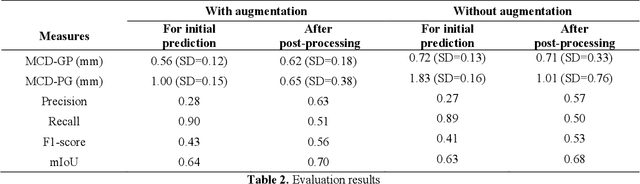

Abstract:There is an increasing demand in medical industries to have automated systems for detection and localization which are manually inefficient otherwise. In dentistry, it bears great interest to trace the pathway of mandibular canals accurately. Proper localization of the position of the mandibular canals, which surrounds the inferior alveolar nerve (IAN), reduces the risk of damaging it during dental implantology. Manual detection of canal paths is not an efficient way in terms of time and labor. Here, we propose a deep learning-based framework to detect mandibular canals from CBCT data. It is a 3-stage process fully automatic end-to-end. Ground truths are generated in the preprocessing stage. Instead of using commonly used fixed diameter tubular-shaped ground truth, we generate centerlines of the mandibular canals and used them as ground truths in the training process. A 3D U-Net architecture is used for model training. An efficient post-processing stage is developed to rectify the initial prediction. The precision, recall, F1-score, and IoU are measured to analyze the voxel-level segmentation performance. However, to analyze the distance-based measurements, mean curve distance (MCD) both from ground truth to prediction and prediction to ground truth is calculated. Extensive experiments are conducted to demonstrate the effectiveness of the model.

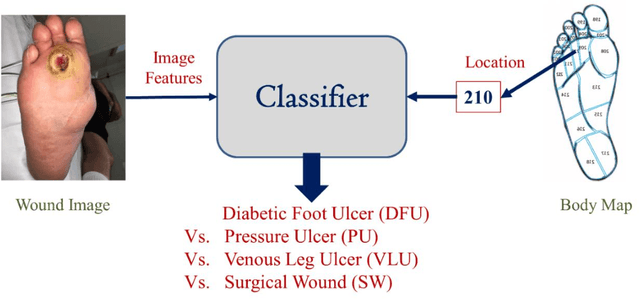

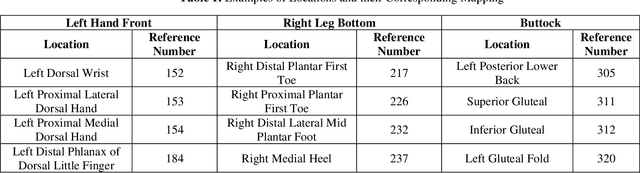

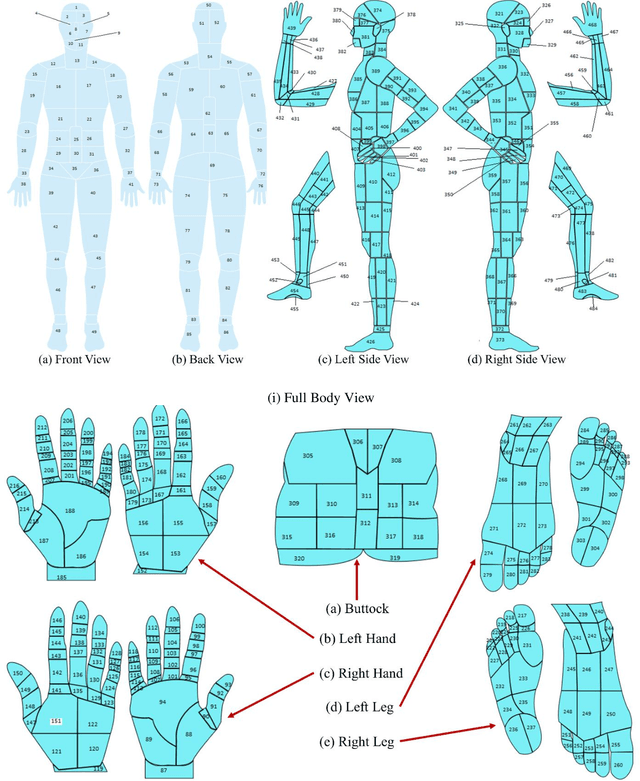

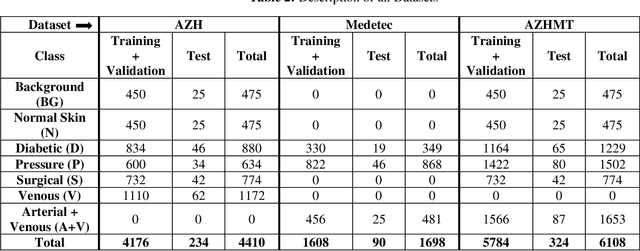

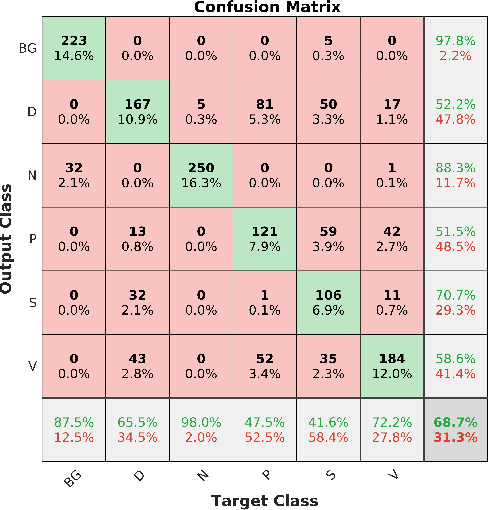

Multi-modal Wound Classification using Wound Image and Location by Deep Neural Network

Sep 14, 2021

Abstract:Wound classification is an essential step of wound diagnosis. An efficient classifier can assist wound specialists in classifying wound types with less financial and time costs and help them decide an optimal treatment procedure. This study developed a deep neural network-based multi-modal classifier using wound images and their corresponding locations to categorize wound images into multiple classes, including diabetic, pressure, surgical, and venous ulcers. A body map is also developed to prepare the location data, which can help wound specialists tag wound locations more efficiently. Three datasets containing images and their corresponding location information are designed with the help of wound specialists. The multi-modal network is developed by concatenating the image-based and location-based classifier's outputs with some other modifications. The maximum accuracy on mixed-class classifications (containing background and normal skin) varies from 77.33% to 100% on different experiments. The maximum accuracy on wound-class classifications (containing only diabetic, pressure, surgical, and venous) varies from 72.95% to 98.08% on different experiments. The proposed multi-modal network also shows a significant improvement in results from the previous works of literature.

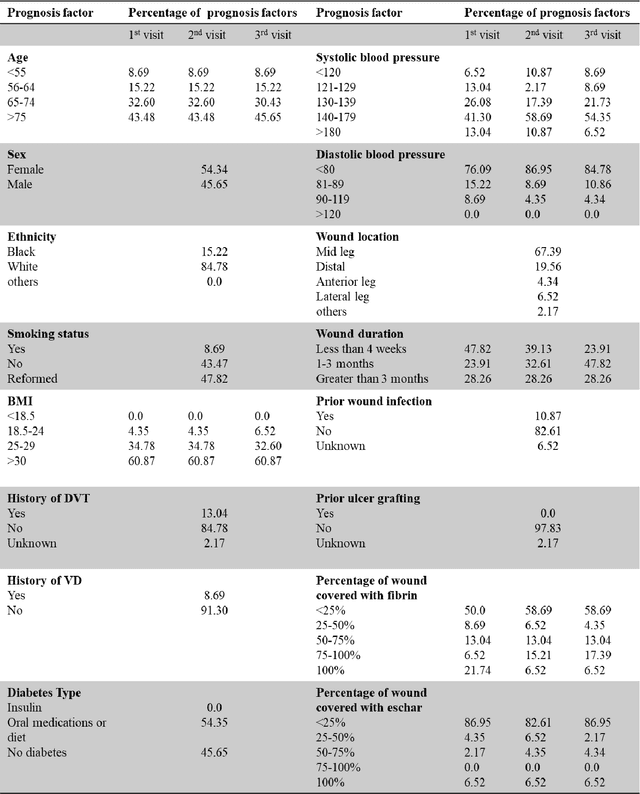

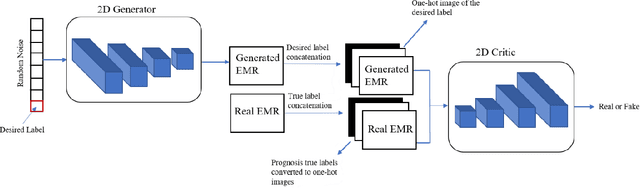

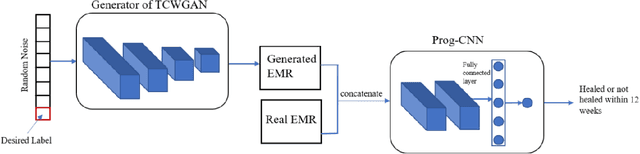

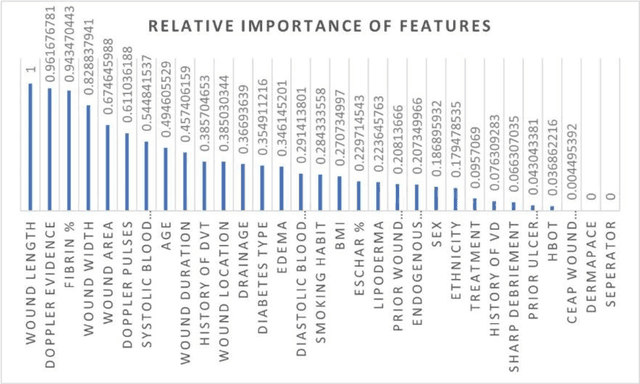

Synthesizing time-series wound prognosis factors from electronic medical records using generative adversarial networks

May 03, 2021

Abstract:Wound prognostic models not only provide an estimate of wound healing time to motivate patients to follow up their treatments but also can help clinicians to decide whether to use a standard care or adjuvant therapies and to assist them with designing clinical trials. However, collecting prognosis factors from Electronic Medical Records (EMR) of patients is challenging due to privacy, sensitivity, and confidentiality. In this study, we developed time series medical generative adversarial networks (GANs) to generate synthetic wound prognosis factors using very limited information collected during routine care in a specialized wound care facility. The generated prognosis variables are used in developing a predictive model for chronic wound healing trajectory. Our novel medical GAN can produce both continuous and categorical features from EMR. Moreover, we applied temporal information to our model by considering data collected from the weekly follow-ups of patients. Conditional training strategies were utilized to enhance training and generate classified data in terms of healing or non-healing. The ability of the proposed model to generate realistic EMR data was evaluated by TSTR (test on the synthetic, train on the real), discriminative accuracy, and visualization. We utilized samples generated by our proposed GAN in training a prognosis model to demonstrate its real-life application. Using the generated samples in training predictive models improved the classification accuracy by 6.66-10.01% compared to the previous EMR-GAN. Additionally, the suggested prognosis classifier has achieved the area under the curve (AUC) of 0.975, 0.968, and 0.849 when training the network using data from the first three visits, first two visits, and first visit, respectively. These results indicate a significant improvement in wound healing prediction compared to the previous prognosis models.

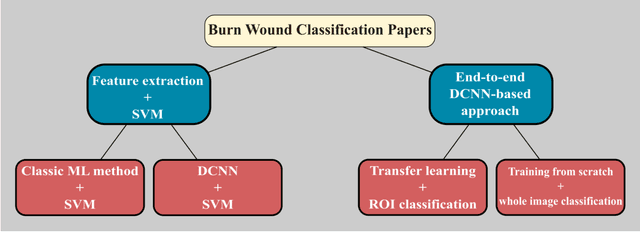

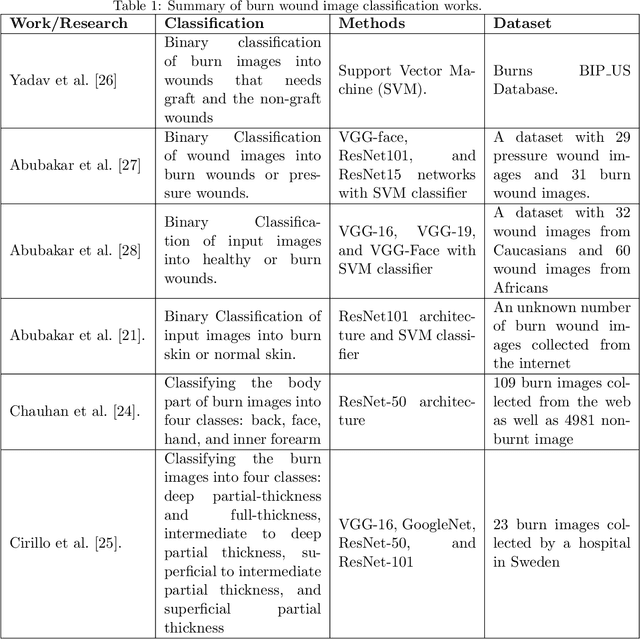

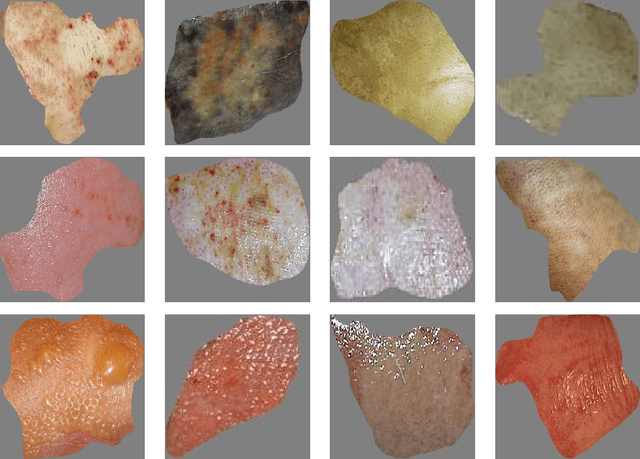

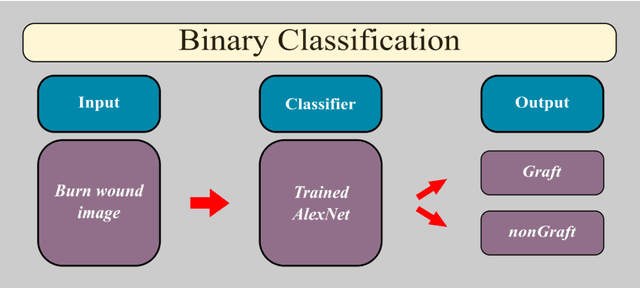

Multiclass Burn Wound Image Classification Using Deep Convolutional Neural Networks

Mar 01, 2021

Abstract:Millions of people are affected by acute and chronic wounds yearly across the world. Continuous wound monitoring is important for wound specialists to allow more accurate diagnosis and optimization of management protocols. Machine Learning-based classification approaches provide optimal care strategies resulting in more reliable outcomes, cost savings, healing time reduction, and improved patient satisfaction. In this study, we use a deep learning-based method to classify burn wound images into two or three different categories based on the wound conditions. A pre-trained deep convolutional neural network, AlexNet, is fine-tuned using a burn wound image dataset and utilized as the classifier. The classifier's performance is evaluated using classification metrics such as accuracy, precision, and recall as well as confusion matrix. A comparison with previous works that used the same dataset showed that our designed classifier improved the classification accuracy by more than 8%.

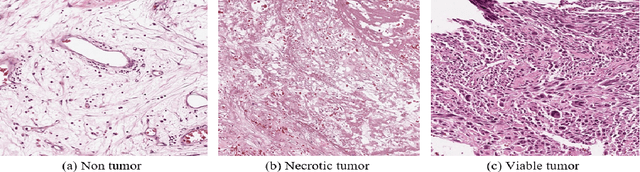

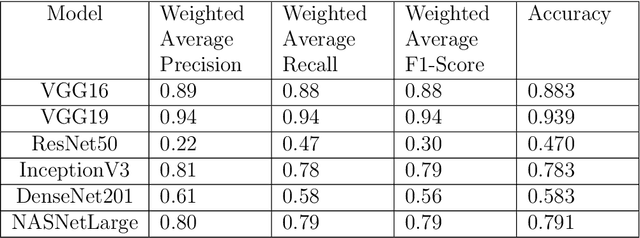

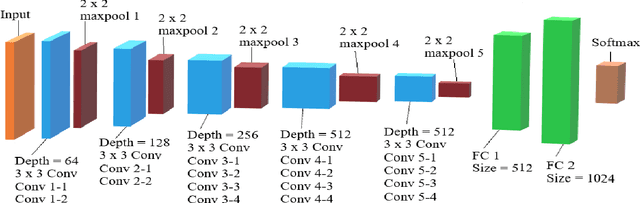

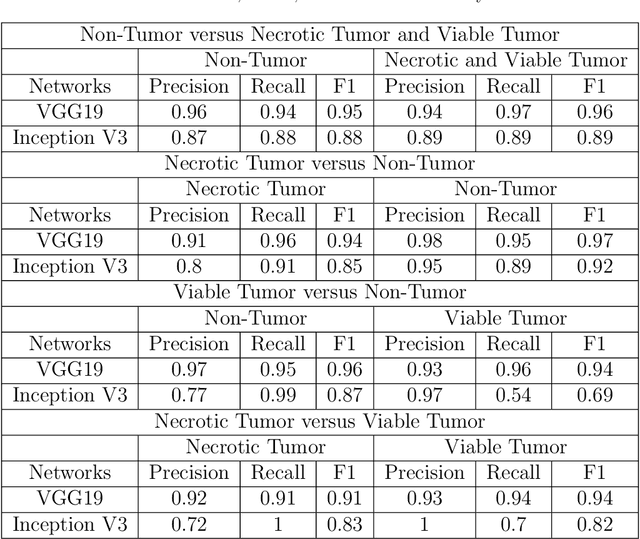

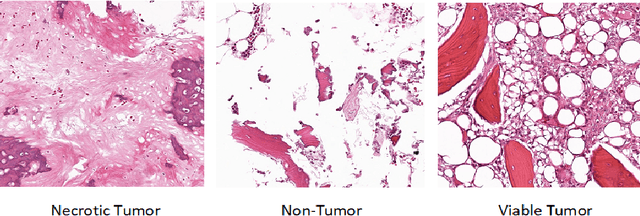

A Deep Learning Study on Osteosarcoma Detection from Histological Images

Nov 02, 2020

Abstract:In the U.S, 5-10\% of new pediatric cases of cancer are primary bone tumors. The most common type of primary malignant bone tumor is osteosarcoma. The intention of the present work is to improve the detection and diagnosis of osteosarcoma using computer-aided detection (CAD) and diagnosis (CADx). Such tools as convolutional neural networks (CNNs) can significantly decrease the surgeon's workload and make a better prognosis of patient conditions. CNNs need to be trained on a large amount of data in order to achieve a more trustworthy performance. In this study, transfer learning techniques, pre-trained CNNs, are adapted to a public dataset on osteosarcoma histological images to detect necrotic images from non-necrotic and healthy tissues. First, the dataset was preprocessed, and different classifications are applied. Then, Transfer learning models including VGG19 and Inception V3 are used and trained on Whole Slide Images (WSI) with no patches, to improve the accuracy of the outputs. Finally, the models are applied to different classification problems, including binary and multi-class classifiers. Experimental results show that the accuracy of the VGG19 has the highest, 96\%, performance amongst all binary classes and multiclass classification. Our fine-tuned model demonstrates state-of-the-art performance on detecting malignancy of Osteosarcoma based on histologic images.

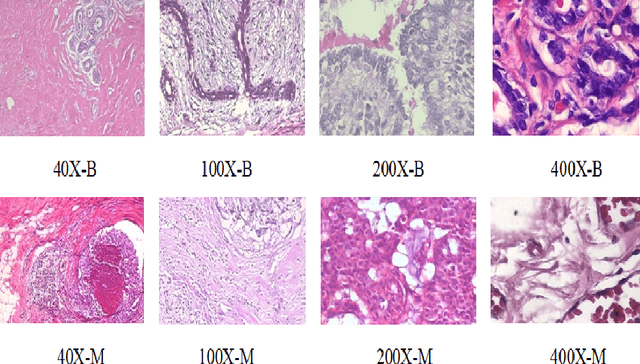

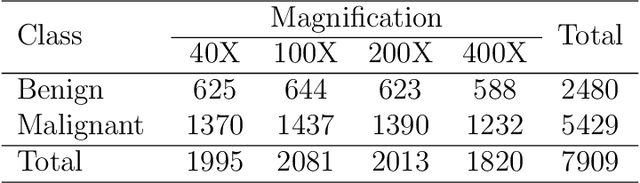

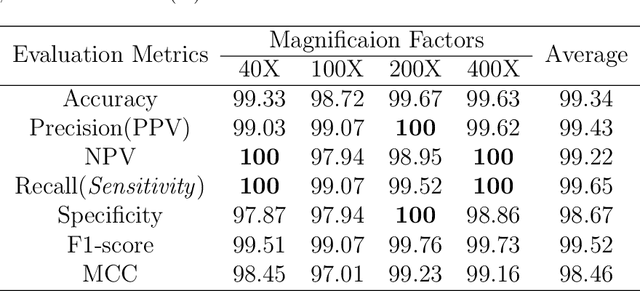

C-Net: A Reliable Convolutional Neural Network for Biomedical Image Classification

Oct 30, 2020

Abstract:Cancers are the leading cause of death in many developed countries. Early diagnosis plays a crucial role in having proper treatment for this debilitating disease. The automated classification of the type of cancer is a challenging task since pathologists must examine a huge number of histopathological images to detect infinitesimal abnormalities. In this study, we propose a novel convolutional neural network (CNN) architecture composed of a Concatenation of multiple Networks, called C-Net, to classify biomedical images. In contrast to conventional deep learning models in biomedical image classification, which utilize transfer learning to solve the problem, no prior knowledge is employed. The model incorporates multiple CNNs including Outer, Middle, and Inner. The first two parts of the architecture contain six networks that serve as feature extractors to feed into the Inner network to classify the images in terms of malignancy and benignancy. The C-Net is applied for histopathological image classification on two public datasets, including BreakHis and Osteosarcoma. To evaluate the performance, the model is tested using several evaluation metrics for its reliability. The C-Net model outperforms all other models on the individual metrics for both datasets and achieves zero misclassification.

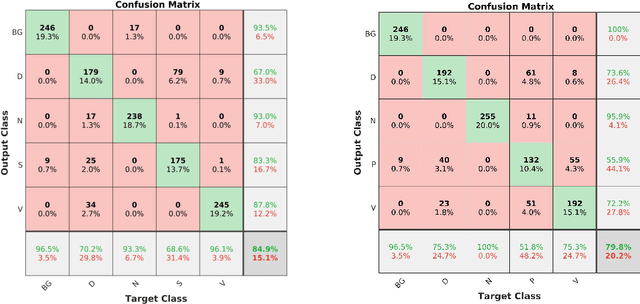

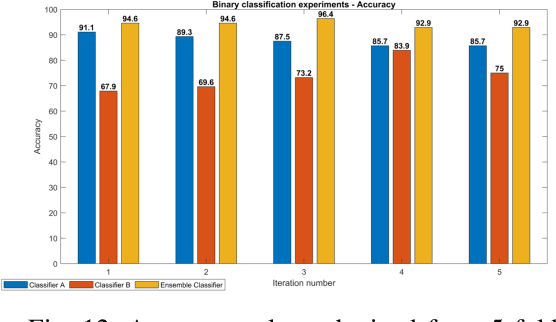

Multiclass Wound Image Classification using an Ensemble Deep CNN-based Classifier

Oct 19, 2020

Abstract:Acute and chronic wounds are a challenge to healthcare systems around the world and affect many people's lives annually. Wound classification is a key step in wound diagnosis that would help clinicians to identify an optimal treatment procedure. Hence, having a high-performance classifier assists the specialists in the field to classify the wounds with less financial and time costs. Different machine learning and deep learning-based wound classification methods have been proposed in the literature. In this study, we have developed an ensemble Deep Convolutional Neural Network-based classifier to classify wound images including surgical, diabetic, and venous ulcers, into multi-classes. The output classification scores of two classifiers (patch-wise and image-wise) are fed into a Multi-Layer Perceptron to provide a superior classification performance. A 5-fold cross-validation approach is used to evaluate the proposed method. We obtained maximum and average classification accuracy values of 96.4% and 94.28% for binary and 91.9\% and 87.7\% for 3-class classification problems. The results show that our proposed method can be used effectively as a decision support system in classification of wound images or other related clinical applications.

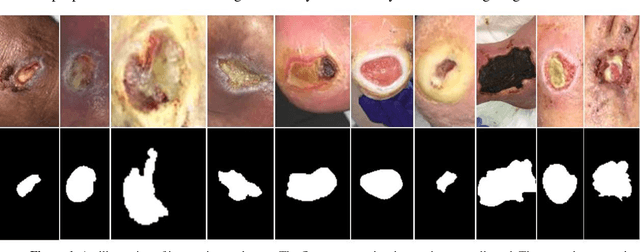

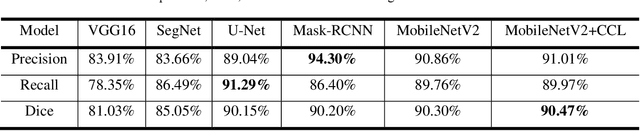

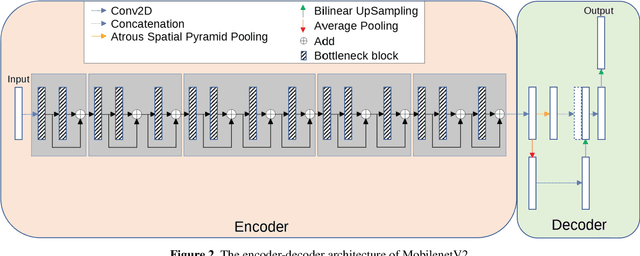

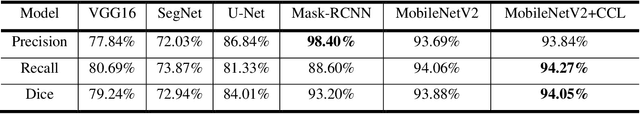

Fully Automatic Wound Segmentation with Deep Convolutional Neural Networks

Oct 12, 2020

Abstract:Acute and chronic wounds have varying etiologies and are an economic burden to healthcare systems around the world. The advanced wound care market is expected to exceed $22 billion by 2024. Wound care professionals rely heavily on images and image documentation for proper diagnosis and treatment. Unfortunately lack of expertise can lead to improper diagnosis of wound etiology and inaccurate wound management and documentation. Fully automatic segmentation of wound areas in natural images is an important part of the diagnosis and care protocol since it is crucial to measure the area of the wound and provide quantitative parameters in the treatment. Various deep learning models have gained success in image analysis including semantic segmentation. Particularly, MobileNetV2 stands out among others due to its lightweight architecture and uncompromised performance. This manuscript proposes a novel convolutional framework based on MobileNetV2 and connected component labelling to segment wound regions from natural images. We build an annotated wound image dataset consisting of 1,109 foot ulcer images from 889 patients to train and test the deep learning models. We demonstrate the effectiveness and mobility of our method by conducting comprehensive experiments and analyses on various segmentation neural networks.

Fully Automatic Intervertebral Disc Segmentation Using Multimodal 3D U-Net

Sep 28, 2020

Abstract:Intervertebral discs (IVDs), as small joints lying between adjacent vertebrae, have played an important role in pressure buffering and tissue protection. The fully-automatic localization and segmentation of IVDs have been discussed in the literature for many years since they are crucial to spine disease diagnosis and provide quantitative parameters in the treatment. Traditionally hand-crafted features are derived based on image intensities and shape priors to localize and segment IVDs. With the advance of deep learning, various neural network models have gained great success in image analysis including the recognition of intervertebral discs. Particularly, U-Net stands out among other approaches due to its outstanding performance on biomedical images with a relatively small set of training data. This paper proposes a novel convolutional framework based on 3D U-Net to segment IVDs from multi-modality MRI images. We first localize the centers of intervertebral discs in each spine sample and then train the network based on the cropped small volumes centered at the localized intervertebral discs. A detailed comprehensive analysis of the results using various combinations of multi-modalities is presented. Furthermore, experiments conducted on 2D and 3D U-Nets with augmented and non-augmented datasets are demonstrated and compared in terms of Dice coefficient and Hausdorff distance. Our method has proved to be effective with a mean segmentation Dice coefficient of 89.0% and a standard deviation of 1.4%.

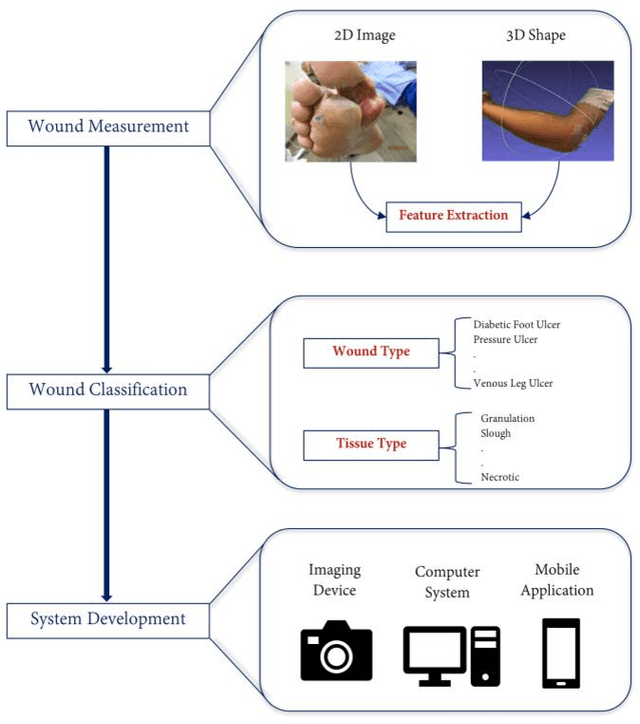

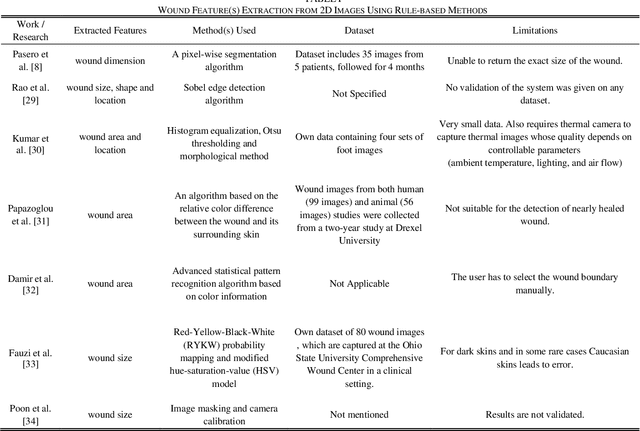

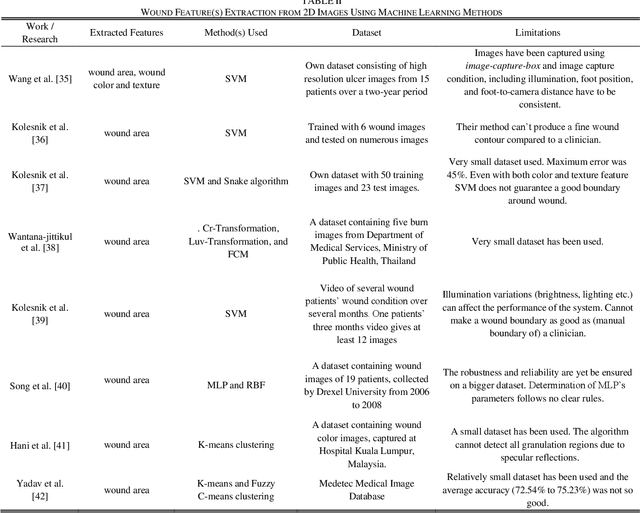

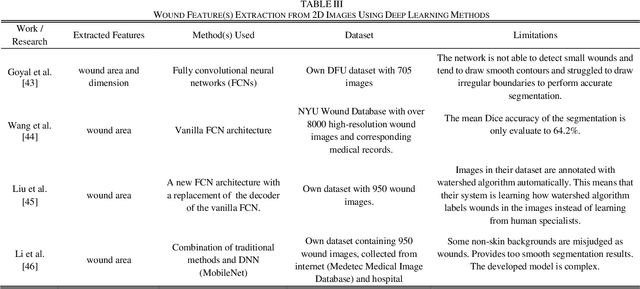

Image Based Artificial Intelligence in Wound Assessment: A Systematic Review

Sep 15, 2020

Abstract:Efficient and effective assessment of acute and chronic wounds can help wound care teams in clinical practice to greatly improve wound diagnosis, optimize treatment plans, ease the workload and achieve health related quality of life to the patient population. While artificial intelligence (AI) has found wide applications in health-related sciences and technology, AI-based systems remain to be developed clinically and computationally for high-quality wound care. To this end, we have carried out a systematic review of intelligent image-based data analysis and system developments for wound assessment. Specifically, we provide an extensive review of research methods on wound measurement (segmentation) and wound diagnosis (classification). We also reviewed recent work on wound assessment systems (including hardware, software, and mobile apps). More than 250 articles were retrieved from various publication databases and online resources, and 115 of them were carefully selected to cover the breadth and depth of most recent and relevant work to convey the current review to its fulfillment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge