Yukiyasu Kamitani

Visual Image Reconstruction from Brain Activity via Latent Representation

May 13, 2025Abstract:Visual image reconstruction, the decoding of perceptual content from brain activity into images, has advanced significantly with the integration of deep neural networks (DNNs) and generative models. This review traces the field's evolution from early classification approaches to sophisticated reconstructions that capture detailed, subjective visual experiences, emphasizing the roles of hierarchical latent representations, compositional strategies, and modular architectures. Despite notable progress, challenges remain, such as achieving true zero-shot generalization for unseen images and accurately modeling the complex, subjective aspects of perception. We discuss the need for diverse datasets, refined evaluation metrics aligned with human perceptual judgments, and compositional representations that strengthen model robustness and generalizability. Ethical issues, including privacy, consent, and potential misuse, are underscored as critical considerations for responsible development. Visual image reconstruction offers promising insights into neural coding and enables new psychological measurements of visual experiences, with applications spanning clinical diagnostics and brain-machine interfaces.

Sound reconstruction from human brain activity via a generative model with brain-like auditory features

Jun 20, 2023Abstract:The successful reconstruction of perceptual experiences from human brain activity has provided insights into the neural representations of sensory experiences. However, reconstructing arbitrary sounds has been avoided due to the complexity of temporal sequences in sounds and the limited resolution of neuroimaging modalities. To overcome these challenges, leveraging the hierarchical nature of brain auditory processing could provide a path toward reconstructing arbitrary sounds. Previous studies have indicated a hierarchical homology between the human auditory system and deep neural network (DNN) models. Furthermore, advancements in audio-generative models enable to transform compressed representations back into high-resolution sounds. In this study, we introduce a novel sound reconstruction method that combines brain decoding of auditory features with an audio-generative model. Using fMRI responses to natural sounds, we found that the hierarchical sound features of a DNN model could be better decoded than spectrotemporal features. We then reconstructed the sound using an audio transformer that disentangled compressed temporal information in the decoded DNN features. Our method shows unconstrained sounds reconstruction capturing sound perceptual contents and quality and generalizability by reconstructing sound categories not included in the training dataset. Reconstructions from different auditory regions remain similar to actual sounds, highlighting the distributed nature of auditory representations. To see whether the reconstructions mirrored actual subjective perceptual experiences, we performed an experiment involving selective auditory attention to one of overlapping sounds. The results tended to resemble the attended sound than the unattended. These findings demonstrate that our proposed model provides a means to externalize experienced auditory contents from human brain activity.

Generic decoding of seen and imagined objects using hierarchical visual features

Sep 27, 2016

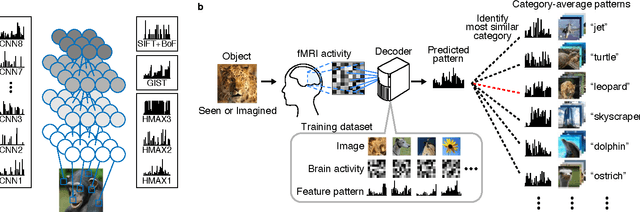

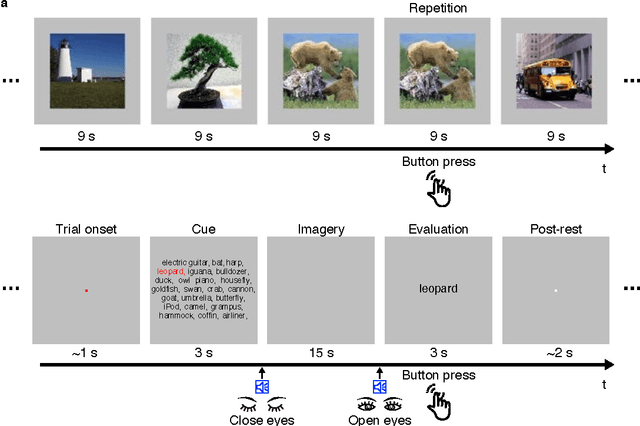

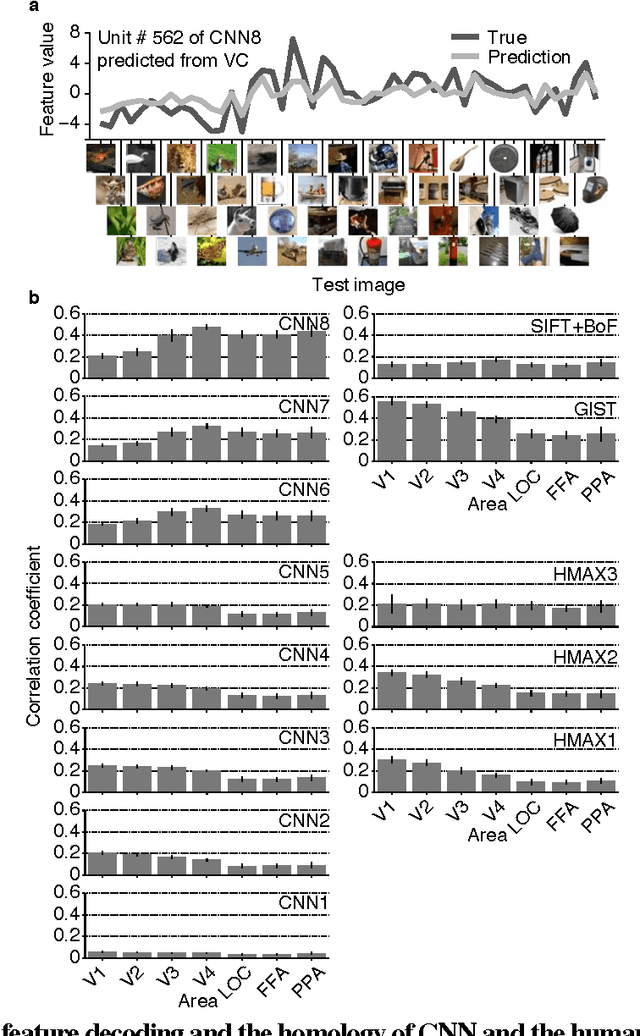

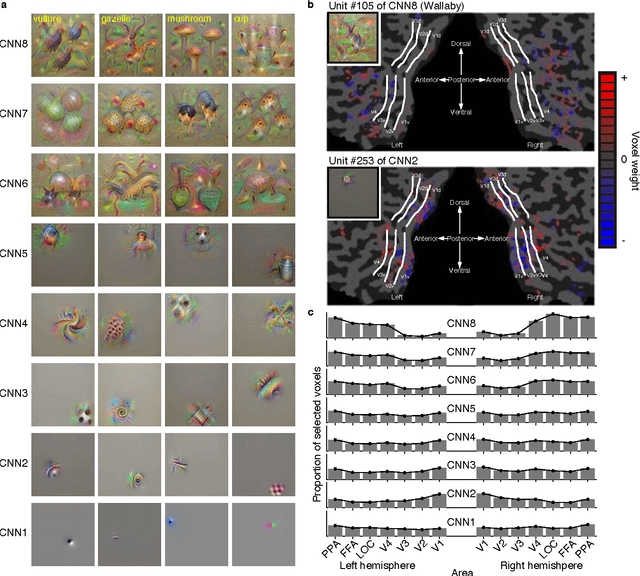

Abstract:Object recognition is a key function in both human and machine vision. While recent studies have achieved fMRI decoding of seen and imagined contents, the prediction is limited to training examples. We present a decoding approach for arbitrary objects, using the machine vision principle that an object category is represented by a set of features rendered invariant through hierarchical processing. We show that visual features including those from a convolutional neural network can be predicted from fMRI patterns and that greater accuracy is achieved for low/high-level features with lower/higher-level visual areas, respectively. Predicted features are used to identify seen/imagined object categories (extending beyond decoder training) from a set of computed features for numerous object images. Furthermore, the decoding of imagined objects reveals progressive recruitment of higher to lower visual representations. Our results demonstrate a homology between human and machine vision and its utility for brain-based information retrieval.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge