You-Jin Jong

Review of feedback in Automated Essay Scoring

Jul 09, 2023Abstract:The first automated essay scoring system was developed 50 years ago. Automated essay scoring systems are developing into systems with richer functions than the previous simple scoring systems. Its purpose is not only to score essays but also as a learning tool to improve the writing skill of users. Feedback is the most important aspect of making an automated essay scoring system useful in real life. The importance of feedback was already emphasized in the first AES system. This paper reviews research on feedback including different feedback types and essay traits on automated essay scoring. We also reviewed the latest case studies of the automated essay scoring system that provides feedback.

Improving Performance of Automated Essay Scoring by using back-translation essays and adjusted scores

Mar 04, 2022

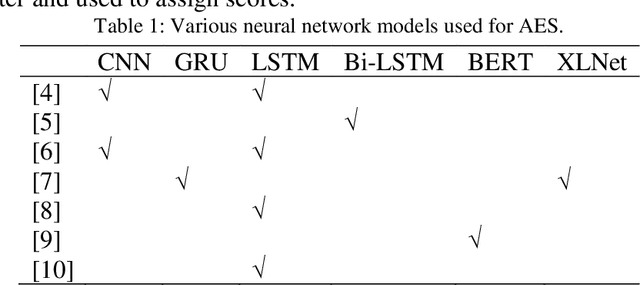

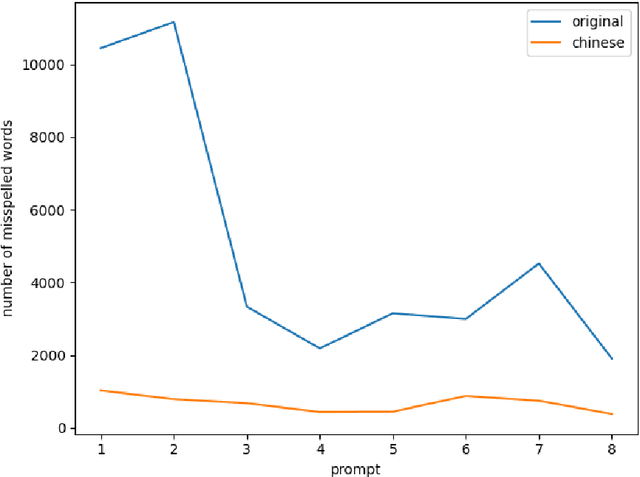

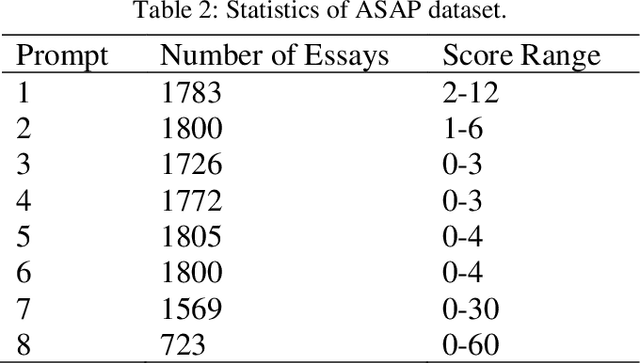

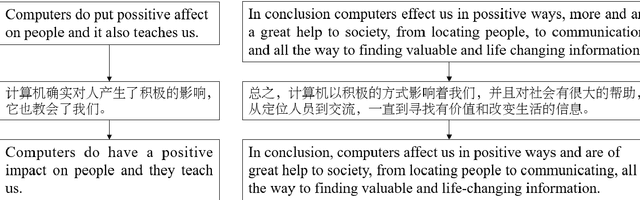

Abstract:Automated essay scoring plays an important role in judging students' language abilities in education. Traditional approaches use handcrafted features to score and are time-consuming and complicated. Recently, neural network approaches have improved performance without any feature engineering. Unlike other natural language processing tasks, only a small number of datasets are publicly available for automated essay scoring, and the size of the dataset is not sufficiently large. Considering that the performance of a neural network is closely related to the size of the dataset, the lack of data limits the performance improvement of the automated essay scoring model. In this paper, we proposed a method to increase the number of essay-score pairs using back-translation and score adjustment and applied it to the Automated Student Assessment Prize dataset for augmentation. We evaluated the effectiveness of the augmented data using models from prior work. In addition, performance was evaluated in a model using long short-term memory, which is widely used for automated essay scoring. The performance of the models was improved by using augmented data to train the models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge