Yony Bresler

Knowledge Distillation with Structured Chain-of-Thought for Text-to-SQL

Dec 18, 2025Abstract:Deploying accurate Text-to-SQL systems at the enterprise level faces a difficult trilemma involving cost, security and performance. Current solutions force enterprises to choose between expensive, proprietary Large Language Models (LLMs) and low-performing Small Language Models (SLMs). Efforts to improve SLMs often rely on distilling reasoning from large LLMs using unstructured Chain-of-Thought (CoT) traces, a process that remains inherently ambiguous. Instead, we hypothesize that a formal, structured reasoning representation provides a clearer, more reliable teaching signal, as the Text-to-SQL task requires explicit and precise logical steps. To evaluate this hypothesis, we propose Struct-SQL, a novel Knowledge Distillation (KD) framework that trains an SLM to emulate a powerful large LLM. Consequently, we adopt a query execution plan as a formal blueprint to derive this structured reasoning. Our SLM, distilled with structured CoT, achieves an absolute improvement of 8.1% over an unstructured CoT distillation baseline. A detailed error analysis reveals that a key factor in this gain is a marked reduction in syntactic errors. This demonstrates that teaching a model to reason using a structured logical blueprint is beneficial for reliable SQL generation in SLMs.

Improved Batching Strategy For Irregular Time-Series ODE

Jul 12, 2022

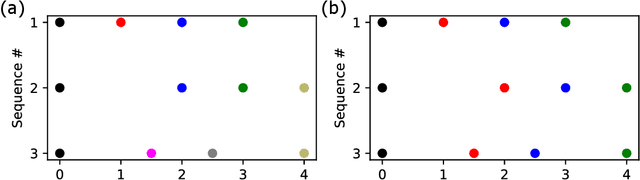

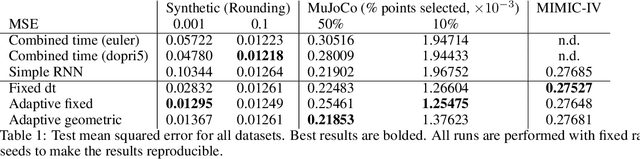

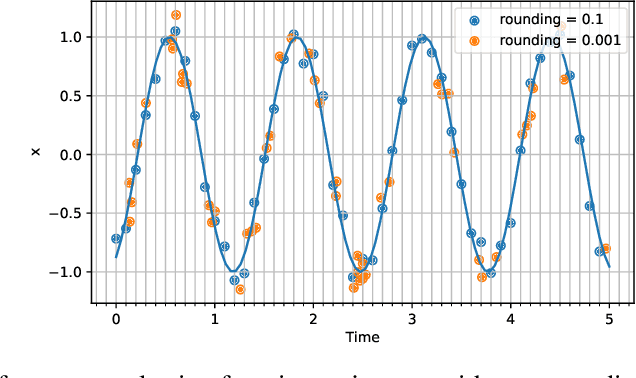

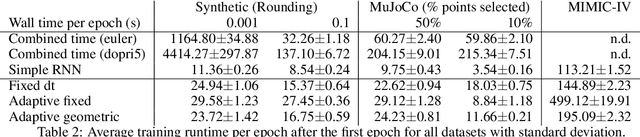

Abstract:Irregular time series data are prevalent in the real world and are challenging to model with a simple recurrent neural network (RNN). Hence, a model that combines the use of ordinary differential equations (ODE) and RNN was proposed (ODE-RNN) to model irregular time series with higher accuracy, but it suffers from high computational costs. In this paper, we propose an improvement in the runtime on ODE-RNNs by using a different efficient batching strategy. Our experiments show that the new models reduce the runtime of ODE-RNN significantly ranging from 2 times up to 49 times depending on the irregularity of the data while maintaining comparable accuracy. Hence, our model can scale favorably for modeling larger irregular data sets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge