Yijun Zuo

Non-asymptotic analysis of the performance of the penalized least trimmed squares in sparse models

Jan 09, 2025Abstract:The least trimmed squares (LTS) estimator is a renowned robust alternative to the classic least squares estimator and is popular in location, regression, machine learning, and AI literature. Many studies exist on LTS, including its robustness, computation algorithms, extension to non-linear cases, asymptotics, etc. The LTS has been applied in the penalized regression in a high-dimensional real-data sparse-model setting where dimension $p$ (in thousands) is much larger than sample size $n$ (in tens, or hundreds). In such a practical setting, the sample size $n$ often is the count of sub-population that has a special attribute (e.g. the count of patients of Alzheimer's, Parkinson's, Leukemia, or ALS, etc.) among a population with a finite fixed size N. Asymptotic analysis assuming that $n$ tends to infinity is not practically convincing and legitimate in such a scenario. A non-asymptotic or finite sample analysis will be more desirable and feasible. This article establishes some finite sample (non-asymptotic) error bounds for estimating and predicting based on LTS with high probability for the first time.

Robust penalized least squares of depth trimmed residuals regression for high-dimensional data

Sep 04, 2023Abstract:Challenges with data in the big-data era include (i) the dimension $p$ is often larger than the sample size $n$ (ii) outliers or contaminated points are frequently hidden and more difficult to detect. Challenge (i) renders most conventional methods inapplicable. Thus, it attracts tremendous attention from statistics, computer science, and bio-medical communities. Numerous penalized regression methods have been introduced as modern methods for analyzing high-dimensional data. Disproportionate attention has been paid to the challenge (ii) though. Penalized regression methods can do their job very well and are expected to handle the challenge (ii) simultaneously. Most of them, however, can break down by a single outlier (or single adversary contaminated point) as revealed in this article. The latter systematically examines leading penalized regression methods in the literature in terms of their robustness, provides quantitative assessment, and reveals that most of them can break down by a single outlier. Consequently, a novel robust penalized regression method based on the least sum of squares of depth trimmed residuals is proposed and studied carefully. Experiments with simulated and real data reveal that the newly proposed method can outperform some leading competitors in estimation and prediction accuracy in the cases considered.

Non-asymptotic analysis and inference for an outlyingness induced winsorized mean

May 05, 2021

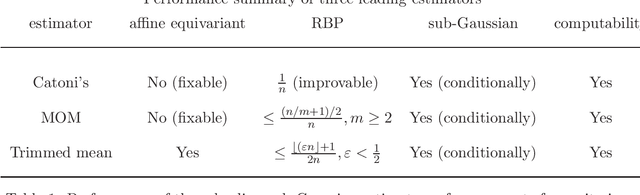

Abstract:Robust estimation of a mean vector, a topic regarded as obsolete in the traditional robust statistics community, has recently surged in machine learning literature in the last decade. The latest focus is on the sub-Gaussian performance and computability of the estimators in a non-asymptotic setting. Numerous traditional robust estimators are computationally intractable, which partly contributes to the renewal of the interest in the robust mean estimation. Robust centrality estimators, however, include the trimmed mean and the sample median. The latter has the best robustness but suffers a low-efficiency drawback. Trimmed mean and median of means, %as robust alternatives to the sample mean, and achieving sub-Gaussian performance have been proposed and studied in the literature. This article investigates the robustness of leading sub-Gaussian estimators of mean and reveals that none of them can resist greater than $25\%$ contamination in data and consequently introduces an outlyingness induced winsorized mean which has the best possible robustness (can resist up to $50\%$ contamination without breakdown) meanwhile achieving high efficiency. Furthermore, it has a sub-Gaussian performance for uncontaminated samples and a bounded estimation error for contaminated samples at a given confidence level in a finite sample setting. It can be computed in linear time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge