Toshio Aoyagi

Neuronal correlations shape the scaling behavior of memory capacity and nonlinear computational capability of recurrent neural networks

Apr 28, 2025

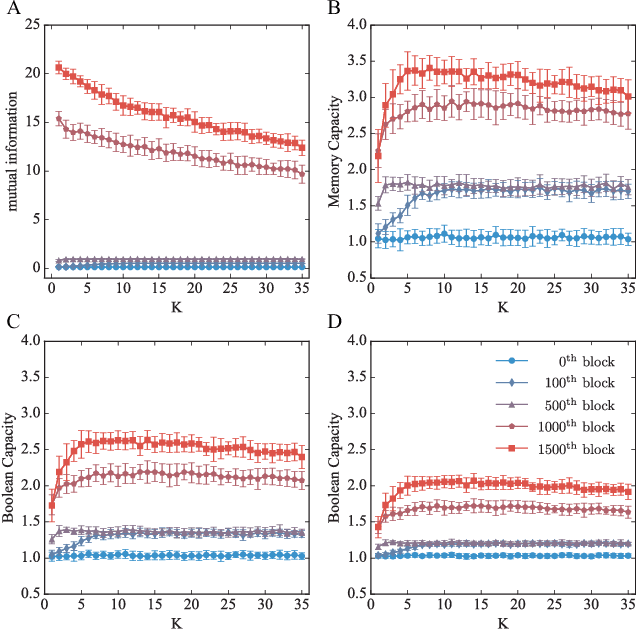

Abstract:Reservoir computing is a powerful framework for real-time information processing, characterized by its high computational ability and quick learning, with applications ranging from machine learning to biological systems. In this paper, we demonstrate that the memory capacity of a reservoir recurrent neural network scales sublinearly with the number of readout neurons. To elucidate this phenomenon, we develop a theoretical framework for analytically deriving memory capacity, attributing the decaying growth of memory capacity to neuronal correlations. In addition, numerical simulations reveal that once memory capacity becomes sublinear, increasing the number of readout neurons successively enables nonlinear processing at progressively higher polynomial orders. Furthermore, our theoretical framework suggests that neuronal correlations govern not only memory capacity but also the sequential growth of nonlinear computational capabilities. Our findings establish a foundation for designing scalable and cost-effective reservoir computing, providing novel insights into the interplay among neuronal correlations, linear memory, and nonlinear processing.

Use of recurrent infomax to improve the memory capability of input-driven recurrent neural networks

Feb 14, 2018

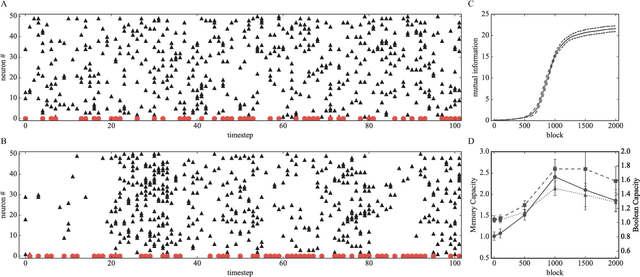

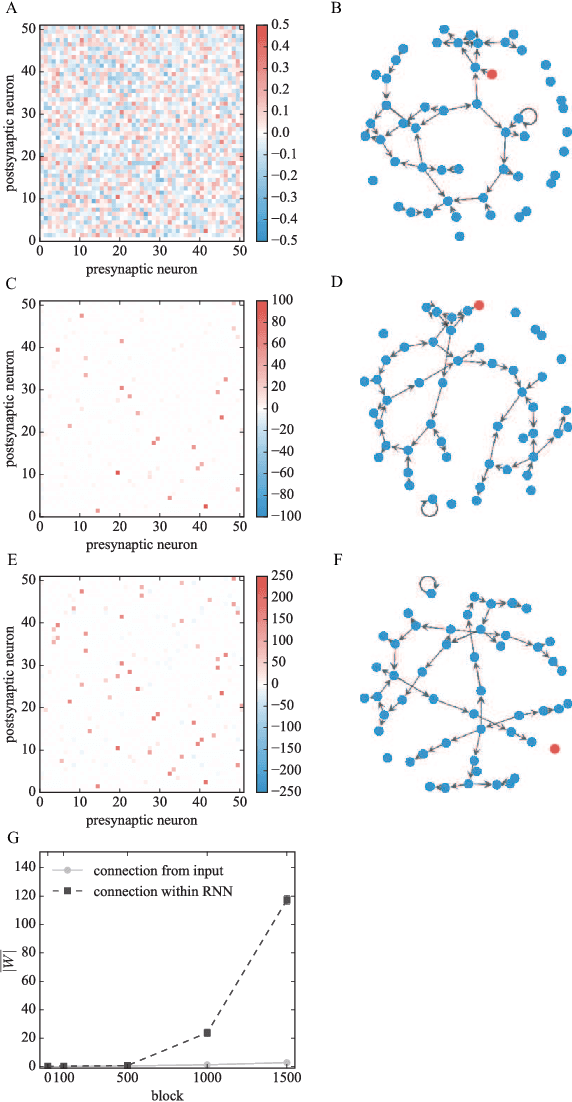

Abstract:The inherent transient dynamics of recurrent neural networks (RNNs) have been exploited as a computational resource in input-driven RNNs. However, the information processing capability varies from RNN to RNN, depending on their properties. Many authors have investigated the dynamics of RNNs and their relevance to the information processing capability. In this study, we present a detailed analysis of the information processing capability of an RNN optimized by recurrent infomax (RI), which is an unsupervised learning scheme that maximizes the mutual information of RNNs by adjusting the connection strengths of the network. Thus, we observe that a delay-line structure emerges from the RI and the network optimized by the RI possesses superior short-term memory, which is the ability to store the temporal information of the input stream in its transient dynamics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge