Takuma Sumi

Graphon Signal Processing for Spiking and Biological Neural Networks

Aug 24, 2025Abstract:Graph Signal Processing (GSP) extends classical signal processing to signals defined on graphs, enabling filtering, spectral analysis, and sampling of data generated by networks of various kinds. Graphon Signal Processing (GnSP) develops this framework further by employing the theory of graphons. Graphons are measurable functions on the unit square that represent graphs and limits of convergent graph sequences. The use of graphons provides stability of GSP methods to stochastic variability in network data and improves computational efficiency for very large networks. We use GnSP to address the stimulus identification problem (SIP) in computational and biological neural networks. The SIP is an inverse problem that aims to infer the unknown stimulus s from the observed network output f. We first validate the approach in spiking neural network simulations and then analyze calcium imaging recordings. Graphon-based spectral projections yield trial-invariant, lowdimensional embeddings that improve stimulus classification over Principal Component Analysis and discrete GSP baselines. The embeddings remain stable under variations in network stochasticity, providing robustness to different network sizes and noise levels. To the best of our knowledge, this is the first application of GnSP to biological neural networks, opening new avenues for graphon-based analysis in neuroscience.

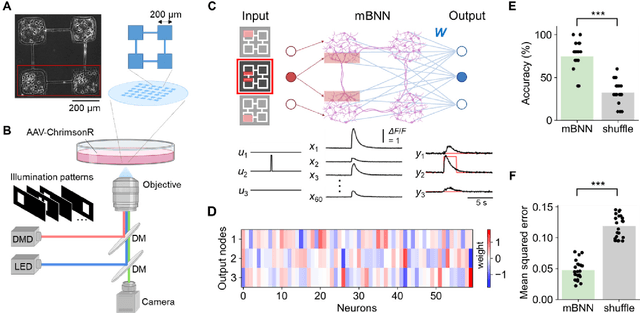

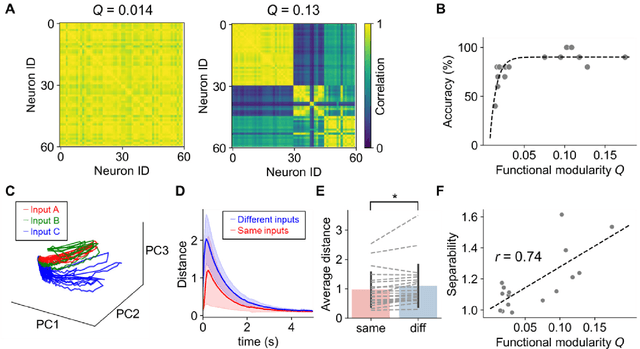

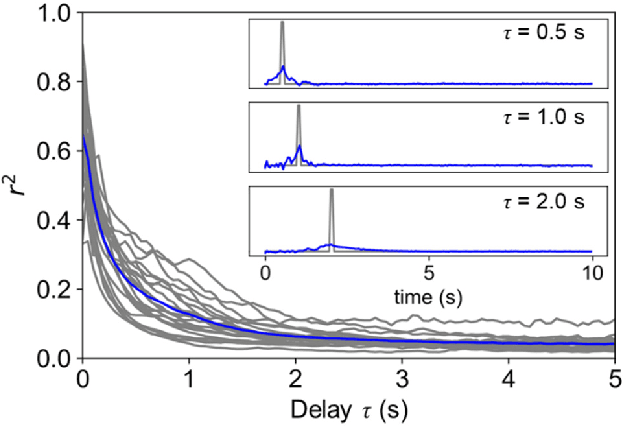

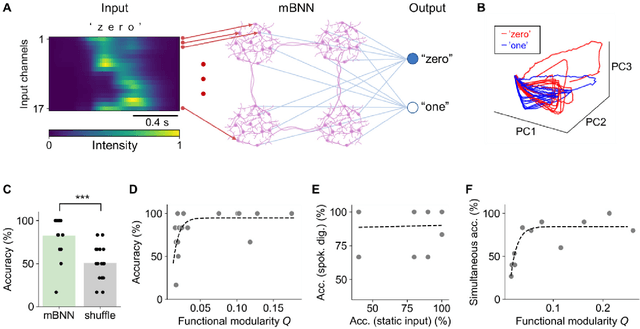

Biological neurons act as generalization filters in reservoir computing

Oct 06, 2022

Abstract:Reservoir computing is a machine learning paradigm that transforms the transient dynamics of high-dimensional nonlinear systems for processing time-series data. Although reservoir computing was initially proposed to model information processing in the mammalian cortex, it remains unclear how the non-random network architecture, such as the modular architecture, in the cortex integrates with the biophysics of living neurons to characterize the function of biological neuronal networks (BNNs). Here, we used optogenetics and fluorescent calcium imaging to record the multicellular responses of cultured BNNs and employed the reservoir computing framework to decode their computational capabilities. Micropatterned substrates were used to embed the modular architecture in the BNNs. We first show that modular BNNs can be used to classify static input patterns with a linear decoder and that the modularity of the BNNs positively correlates with the classification accuracy. We then used a timer task to verify that BNNs possess a short-term memory of ~1 s and finally show that this property can be exploited for spoken digit classification. Interestingly, BNN-based reservoirs allow transfer learning, wherein a network trained on one dataset can be used to classify separate datasets of the same category. Such classification was not possible when the input patterns were directly decoded by a linear decoder, suggesting that BNNs act as a generalization filter to improve reservoir computing performance. Our findings pave the way toward a mechanistic understanding of information processing within BNNs and, simultaneously, build future expectations toward the realization of physical reservoir computing systems based on BNNs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge