Steven Cheung

Interpreting Agent Behaviors in Reinforcement-Learning-Based Cyber-Battle Simulation Platforms

Jun 09, 2025Abstract:We analyze two open source deep reinforcement learning agents submitted to the CAGE Challenge 2 cyber defense challenge, where each competitor submitted an agent to defend a simulated network against each of several provided rules-based attack agents. We demonstrate that one can gain interpretability of agent successes and failures by simplifying the complex state and action spaces and by tracking important events, shedding light on the fine-grained behavior of both the defense and attack agents in each experimental scenario. By analyzing important events within an evaluation episode, we identify patterns in infiltration and clearing events that tell us how well the attacker and defender played their respective roles; for example, defenders were generally able to clear infiltrations within one or two timesteps of a host being exploited. By examining transitions in the environment's state caused by the various possible actions, we determine which actions tended to be effective and which did not, showing that certain important actions are between 40% and 99% ineffective. We examine how decoy services affect exploit success, concluding for instance that decoys block up to 94% of exploits that would directly grant privileged access to a host. Finally, we discuss the realism of the challenge and ways that the CAGE Challenge 4 has addressed some of our concerns.

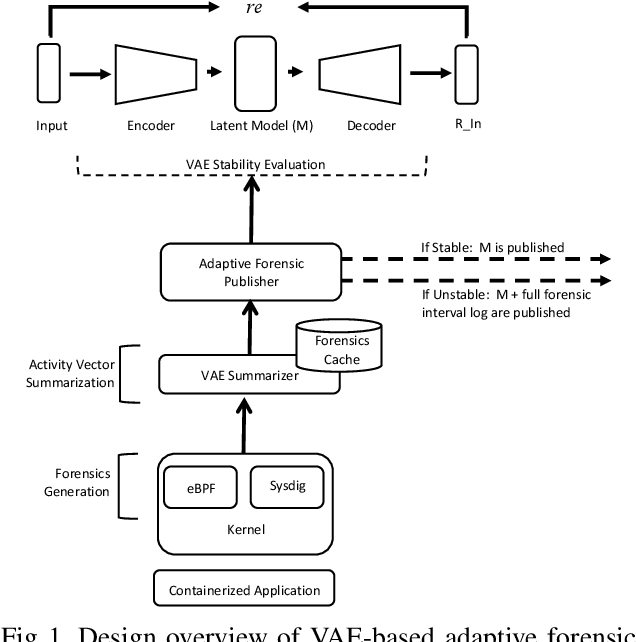

Scalable Microservice Forensics and Stability Assessment Using Variational Autoencoders

Apr 23, 2021

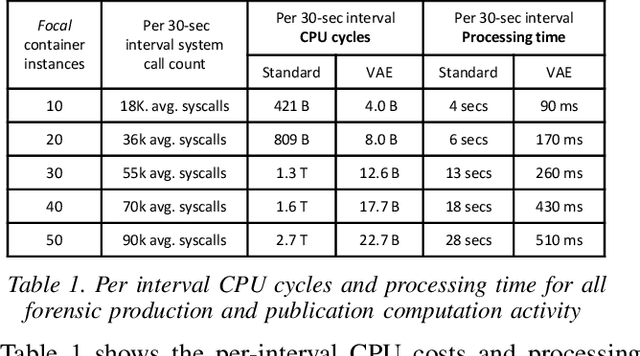

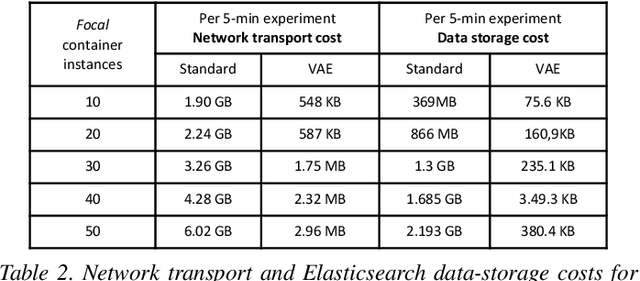

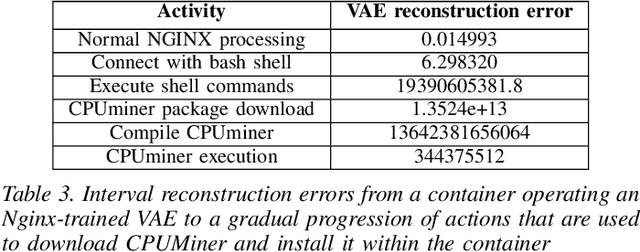

Abstract:We present a deep learning based approach to containerized application runtime stability analysis, and an intelligent publishing algorithm that can dynamically adjust the depth of process-level forensics published to a backend incident analysis repository. The approach applies variational autoencoders (VAEs) to learn the stable runtime patterns of container images, and then instantiates these container-specific VAEs to implement stability detection and adaptive forensics publishing. In performance comparisons using a 50-instance container workload, a VAE-optimized service versus a conventional eBPF-based forensic publisher demonstrates 2 orders of magnitude (OM) CPU performance improvement, a 3 OM reduction in network transport volume, and a 4 OM reduction in Elasticsearch storage costs. We evaluate the VAE-based stability detection technique against two attacks, CPUMiner and HTTP-flood attack, finding that it is effective in isolating both anomalies. We believe this technique provides a novel approach to integrating fine-grained process monitoring and digital-forensic services into large container ecosystems that today simply cannot be monitored by conventional techniques

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge