Stefano Tracà

Reducing Exploration of Dying Arms in Mortal Bandits

Jul 04, 2019

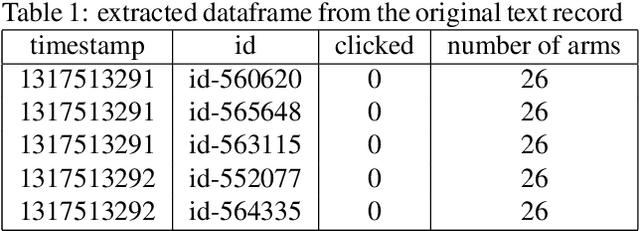

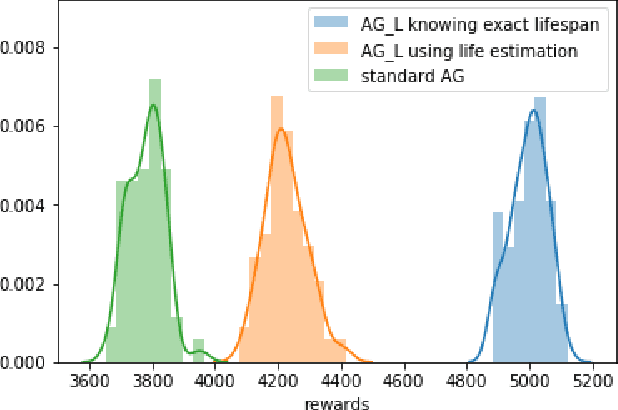

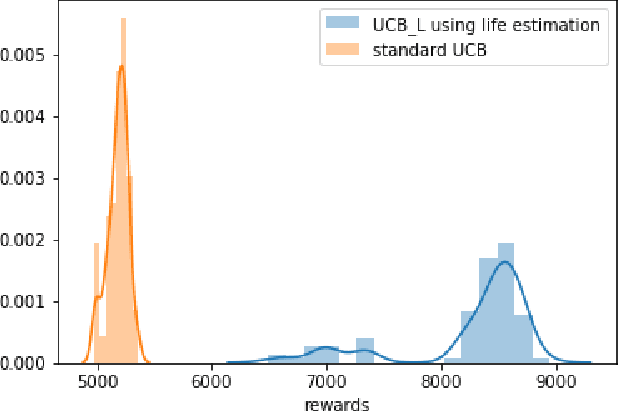

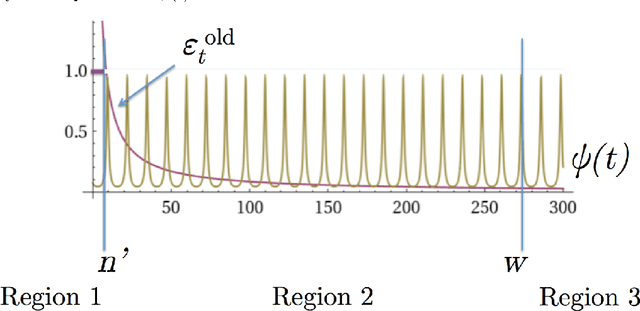

Abstract:Mortal bandits have proven to be extremely useful for providing news article recommendations, running automated online advertising campaigns, and for other applications where the set of available options changes over time. Previous work on this problem showed how to regulate exploration of new arms when they have recently appeared, but they do not adapt when the arms are about to disappear. Since in most applications we can determine either exactly or approximately when arms will disappear, we can leverage this information to improve performance: we should not be exploring arms that are about to disappear. We provide adaptations of algorithms, regret bounds, and experiments for this study, showing a clear benefit from regulating greed (exploration/exploitation) for arms that will soon disappear. We illustrate numerical performance on the Yahoo! Front Page Today Module User Click Log Dataset.

Regulating Greed Over Time

Dec 04, 2017

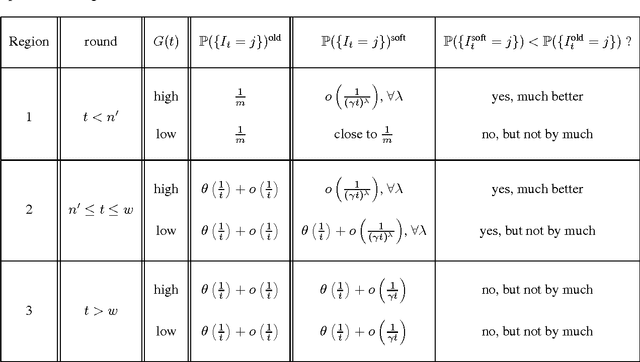

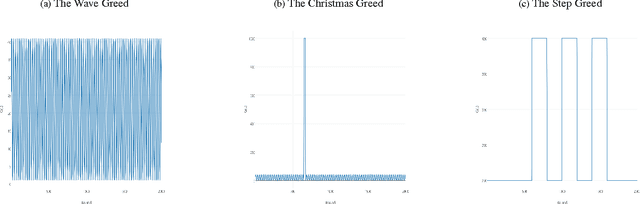

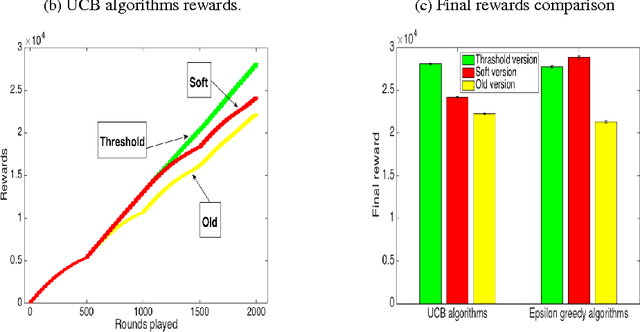

Abstract:In retail, there are predictable yet dramatic time-dependent patterns in customer behavior, such as periodic changes in the number of visitors, or increases in visitors just before major holidays. The current paradigm of multi-armed bandit analysis does not take these known patterns into account. This means that for applications in retail, where prices are fixed for periods of time, current bandit algorithms will not suffice. This work provides a remedy that takes the time-dependent patterns into account, and we show how this remedy is implemented in the UCB and {\epsilon}-greedy methods and we introduce a new policy called the variable arm pool method. In the corrected methods, exploitation (greed) is regulated over time, so that more exploitation occurs during higher reward periods, and more exploration occurs in periods of low reward. In order to understand why regret is reduced with the corrected methods, we present a set of bounds that provide insight into why we would want to exploit during periods of high reward, and discuss the impact on regret. Our proposed methods perform well in experiments, and were inspired by a high-scoring entry in the Exploration and Exploitation 3 contest using data from Yahoo! Front Page. That entry heavily used time-series methods to regulate greed over time, which was substantially more effective than other contextual bandit methods.

Supersparse Linear Integer Models for Interpretable Classification

Apr 11, 2014

Abstract:Scoring systems are classification models that only require users to add, subtract and multiply a few meaningful numbers to make a prediction. These models are often used because they are practical and interpretable. In this paper, we introduce an off-the-shelf tool to create scoring systems that both accurate and interpretable, known as a Supersparse Linear Integer Model (SLIM). SLIM is a discrete optimization problem that minimizes the 0-1 loss to encourage a high level of accuracy, regularizes the L0-norm to encourage a high level of sparsity, and constrains coefficients to a set of interpretable values. We illustrate the practical and interpretable nature of SLIM scoring systems through applications in medicine and criminology, and show that they are are accurate and sparse in comparison to state-of-the-art classification models using numerical experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge