Sparsh Gupta

Cognitive Weave: Synthesizing Abstracted Knowledge with a Spatio-Temporal Resonance Graph

Jun 09, 2025Abstract:The emergence of capable large language model (LLM) based agents necessitates memory architectures that transcend mere data storage, enabling continuous learning, nuanced reasoning, and dynamic adaptation. Current memory systems often grapple with fundamental limitations in structural flexibility, temporal awareness, and the ability to synthesize higher-level insights from raw interaction data. This paper introduces Cognitive Weave, a novel memory framework centered around a multi-layered spatio-temporal resonance graph (STRG). This graph manages information as semantically rich insight particles (IPs), which are dynamically enriched with resonance keys, signifiers, and situational imprints via a dedicated semantic oracle interface (SOI). These IPs are interconnected through typed relational strands, forming an evolving knowledge tapestry. A key component of Cognitive Weave is the cognitive refinement process, an autonomous mechanism that includes the synthesis of insight aggregates (IAs) condensed, higher-level knowledge structures derived from identified clusters of related IPs. We present comprehensive experimental results demonstrating Cognitive Weave's marked enhancement over existing approaches in long-horizon planning tasks, evolving question-answering scenarios, and multi-session dialogue coherence. The system achieves a notable 34% average improvement in task completion rates and a 42% reduction in mean query latency when compared to state-of-the-art baselines. Furthermore, this paper explores the ethical considerations inherent in such advanced memory systems, discusses the implications for long-term memory in LLMs, and outlines promising future research trajectories.

A Scalable Quantum Non-local Neural Network for Image Classification

Jul 26, 2024

Abstract:Non-local operations play a crucial role in computer vision enabling the capture of long-range dependencies through weighted sums of features across the input, surpassing the constraints of traditional convolution operations that focus solely on local neighborhoods. Non-local operations typically require computing pairwise relationships between all elements in a set, leading to quadratic complexity in terms of time and memory. Due to the high computational and memory demands, scaling non-local neural networks to large-scale problems can be challenging. This article introduces a hybrid quantum-classical scalable non-local neural network, referred to as Quantum Non-Local Neural Network (QNL-Net), to enhance pattern recognition. The proposed QNL-Net relies on inherent quantum parallelism to allow the simultaneous processing of a large number of input features enabling more efficient computations in quantum-enhanced feature space and involving pairwise relationships through quantum entanglement. We benchmark our proposed QNL-Net with other quantum counterparts to binary classification with datasets MNIST and CIFAR-10. The simulation findings showcase our QNL-Net achieves cutting-edge accuracy levels in binary image classification among quantum classifiers while utilizing fewer qubits.

Evaluating Nonlinear Decision Trees for Binary Classification Tasks with Other Existing Methods

Aug 25, 2020

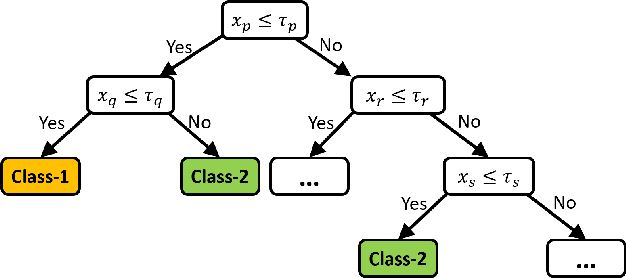

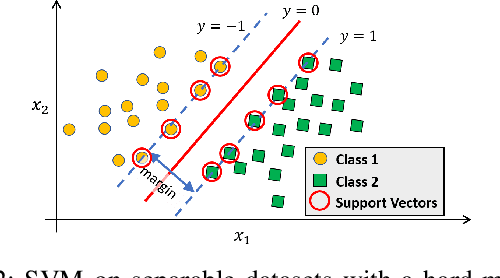

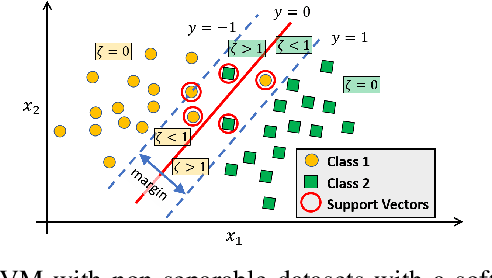

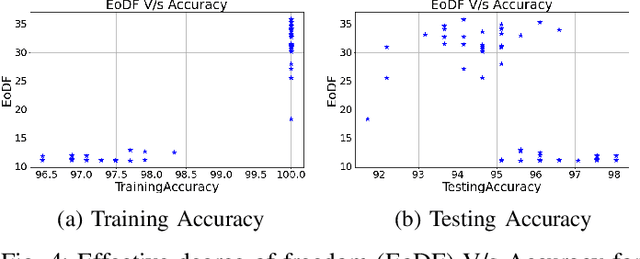

Abstract:Classification of datasets into two or more distinct classes is an important machine learning task. Many methods are able to classify binary classification tasks with a very high accuracy on test data, but cannot provide any easily interpretable explanation for users to have a deeper understanding of reasons for the split of data into two classes. In this paper, we highlight and evaluate a recently proposed nonlinear decision tree approach with a number of commonly used classification methods on a number of datasets involving a few to a large number of features. The study reveals key issues such as effect of classification on the method's parameter values, complexity of the classifier versus achieved accuracy, and interpretability of resulting classifiers.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge