Sophie Yacoub

Automatic retrieval of corresponding US views in longitudinal examinations

Jun 07, 2023

Abstract:Skeletal muscle atrophy is a common occurrence in critically ill patients in the intensive care unit (ICU) who spend long periods in bed. Muscle mass must be recovered through physiotherapy before patient discharge and ultrasound imaging is frequently used to assess the recovery process by measuring the muscle size over time. However, these manual measurements are subject to large variability, particularly since the scans are typically acquired on different days and potentially by different operators. In this paper, we propose a self-supervised contrastive learning approach to automatically retrieve similar ultrasound muscle views at different scan times. Three different models were compared using data from 67 patients acquired in the ICU. Results indicate that our contrastive model outperformed a supervised baseline model in the task of view retrieval with an AUC of 73.52% and when combined with an automatic segmentation model achieved 5.7%+/-0.24% error in cross-sectional area. Furthermore, a user study survey confirmed the efficacy of our model for muscle view retrieval.

B-line Detection in Lung Ultrasound Videos: Cartesian vs Polar Representation

Jul 26, 2021

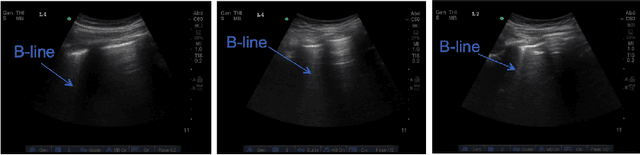

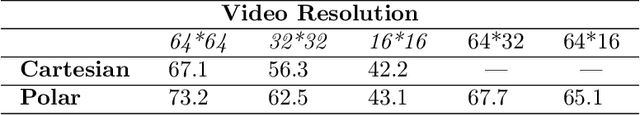

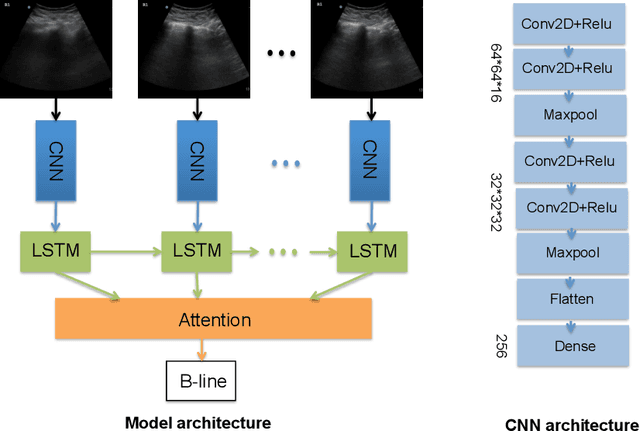

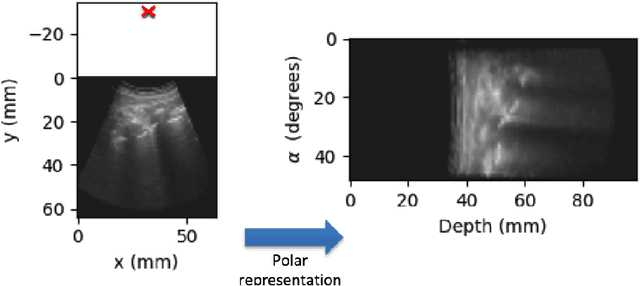

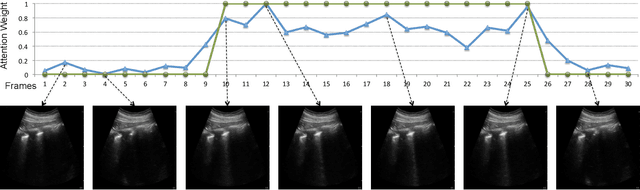

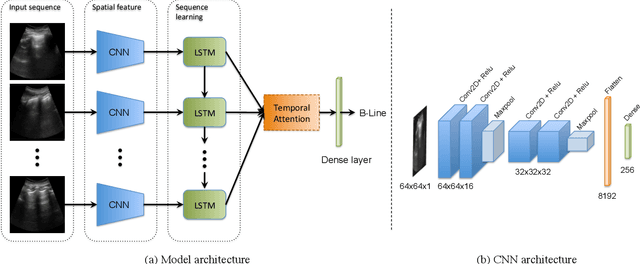

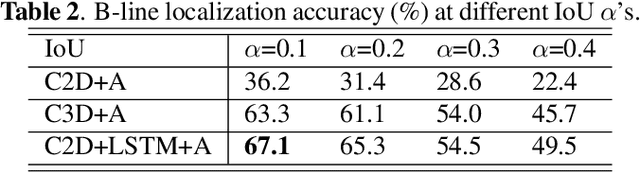

Abstract:Lung ultrasound (LUS) imaging is becoming popular in the intensive care units (ICU) for assessing lung abnormalities such as the appearance of B-line artefacts as a result of severe dengue. These artefacts appear in the LUS images and disappear quickly, making their manual detection very challenging. They also extend radially following the propagation of the sound waves. As a result, we hypothesize that a polar representation may be more adequate for automatic image analysis of these images. This paper presents an attention-based Convolutional+LSTM model to automatically detect B-lines in LUS videos, comparing performance when image data is taken in Cartesian and polar representations. Results indicate that the proposed framework with polar representation achieves competitive performance compared to the Cartesian representation for B-line classification and that attention mechanism can provide better localization.

Automatic Detection of B-lines in Lung Ultrasound Videos From Severe Dengue Patients

Feb 01, 2021

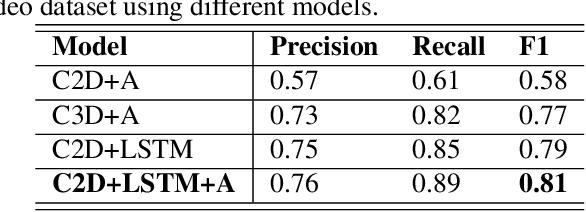

Abstract:Lung ultrasound (LUS) imaging is used to assess lung abnormalities, including the presence of B-line artefacts due to fluid leakage into the lungs caused by a variety of diseases. However, manual detection of these artefacts is challenging. In this paper, we propose a novel methodology to automatically detect and localize B-lines in LUS videos using deep neural networks trained with weak labels. To this end, we combine a convolutional neural network (CNN) with a long short-term memory (LSTM) network and a temporal attention mechanism. Four different models are compared using data from 60 patients. Results show that our best model can determine whether one-second clips contain B-lines or not with an F1 score of 0.81, and extracts a representative frame with B-lines with an accuracy of 87.5%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge