Siniša Šegvić

Dynamic loss balancing and sequential enhancement for road-safety assessment and traffic scene classification

Nov 08, 2022

Abstract:Road-safety inspection is an indispensable instrument for reducing road-accident fatalities contributed to road infrastructure. Recent work formalizes road-safety assessment in terms of carefully selected risk factors that are also known as road-safety attributes. In current practice, these attributes are manually annotated in geo-referenced monocular video for each road segment. We propose to reduce dependency on tedious human labor by automating recognition with a two-stage neural architecture. The first stage predicts more than forty road-safety attributes by observing a local spatio-temporal context. Our design leverages an efficient convolutional pipeline, which benefits from pre-training on semantic segmentation of street scenes. The second stage enhances predictions through sequential integration across a larger temporal window. Our design leverages per-attribute instances of a lightweight bidirectional LSTM architecture. Both stages alleviate extreme class imbalance by incorporating a multi-task variant of recall-based dynamic loss weighting. We perform experiments on the iRAP-BH dataset, which involves fully labeled geo-referenced video along 2,300 km of public roads in Bosnia and Herzegovina. We also validate our approach by comparing it with the related work on two road-scene classification datasets from the literature: Honda Scenes and FM3m. Experimental evaluation confirms the value of our contributions on all three datasets.

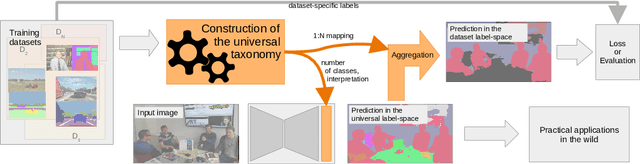

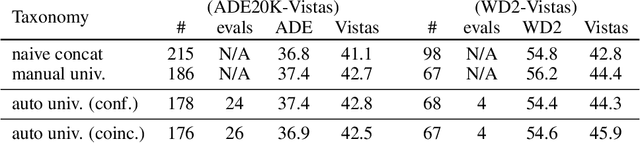

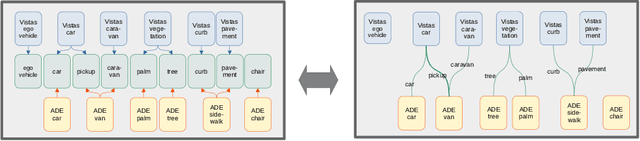

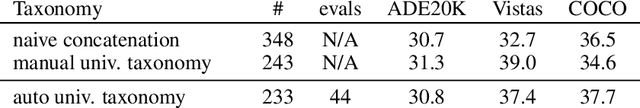

Automatic universal taxonomies for multi-domain semantic segmentation

Jul 18, 2022

Abstract:Training semantic segmentation models on multiple datasets has sparked a lot of recent interest in the computer vision community. This interest has been motivated by expensive annotations and a desire to achieve proficiency across multiple visual domains. However, established datasets have mutually incompatible labels which disrupt principled inference in the wild. We address this issue by automatic construction of universal taxonomies through iterative dataset integration. Our method detects subset-superset relationships between dataset-specific labels, and supports learning of sub-class logits by treating super-classes as partial labels. We present experiments on collections of standard datasets and demonstrate competitive generalization performance with respect to previous work.

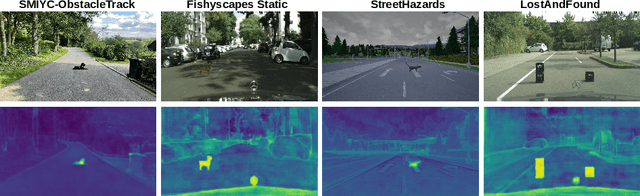

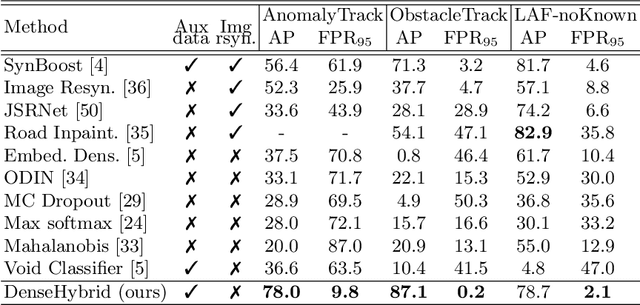

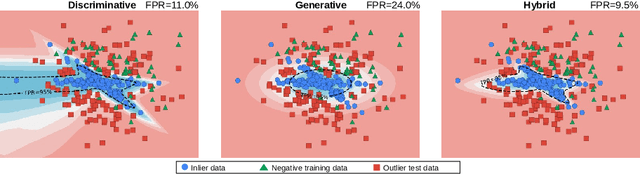

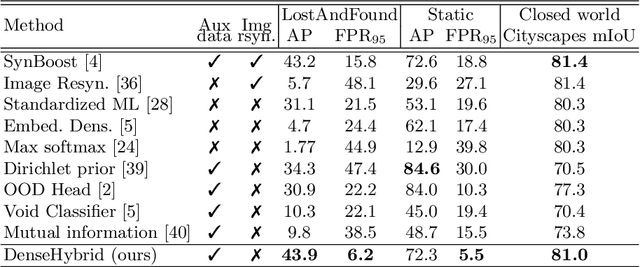

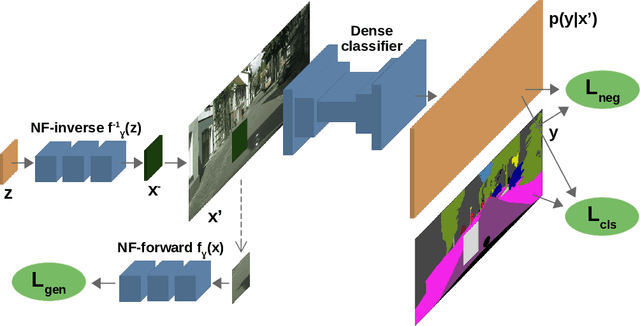

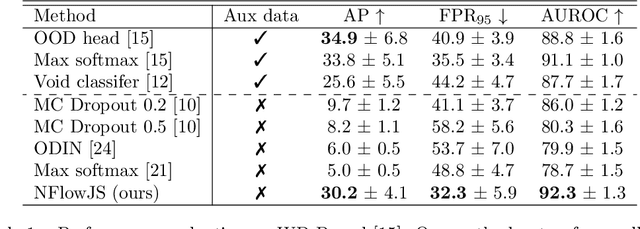

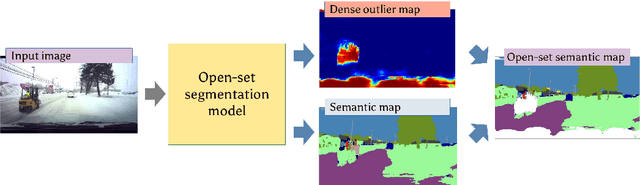

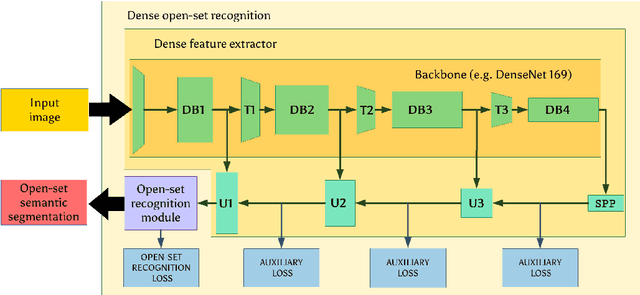

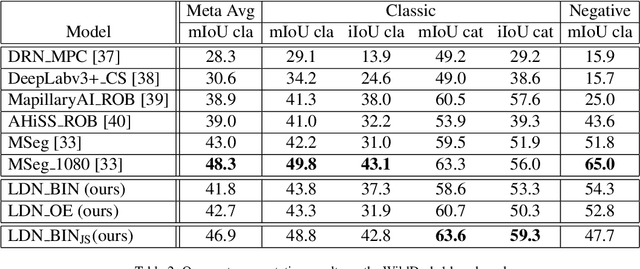

DenseHybrid: Hybrid Anomaly Detection for Dense Open-set Recognition

Jul 06, 2022

Abstract:Anomaly detection can be conceived either through generative modelling of regular training data or by discriminating with respect to negative training data. These two approaches exhibit different failure modes. Consequently, hybrid algorithms present an attractive research goal. Unfortunately, dense anomaly detection requires translational equivariance and very large input resolutions. These requirements disqualify all previous hybrid approaches to the best of our knowledge. We therefore design a novel hybrid algorithm based on reinterpreting discriminative logits as a logarithm of the unnormalized joint distribution $\hat{p}(\mathbf{x}, \mathbf{y})$. Our model builds on a shared convolutional representation from which we recover three dense predictions: i) the closed-set class posterior $P(\mathbf{y}|\mathbf{x})$, ii) the dataset posterior $P(d_{in}|\mathbf{x})$, iii) unnormalized data likelihood $\hat{p}(\mathbf{x})$. The latter two predictions are trained both on the standard training data and on a generic negative dataset. We blend these two predictions into a hybrid anomaly score which allows dense open-set recognition on large natural images. We carefully design a custom loss for the data likelihood in order to avoid backpropagation through the untractable normalizing constant $Z(\theta)$. Experiments evaluate our contributions on standard dense anomaly detection benchmarks as well as in terms of open-mIoU - a novel metric for dense open-set performance. Our submissions achieve state-of-the-art performance despite neglectable computational overhead over the standard semantic segmentation baseline.

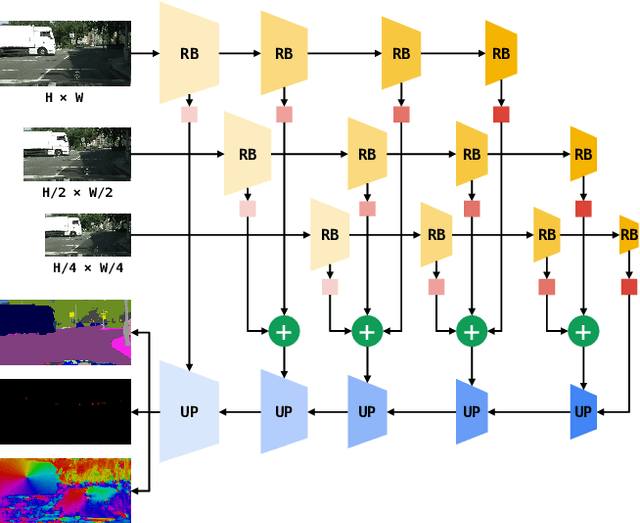

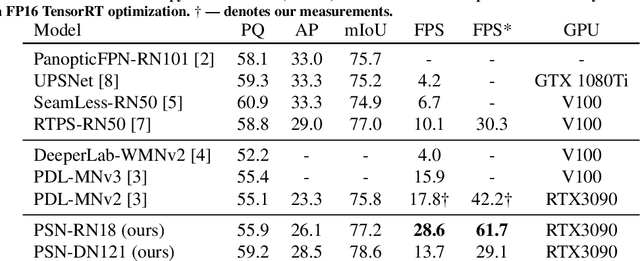

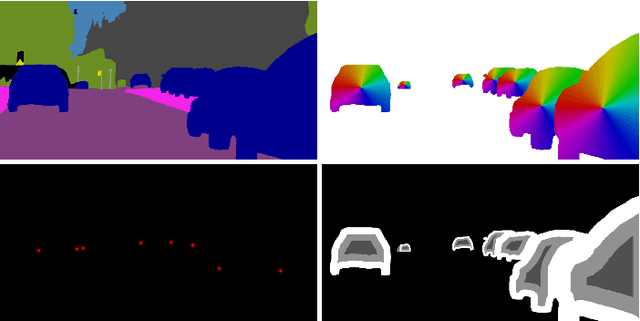

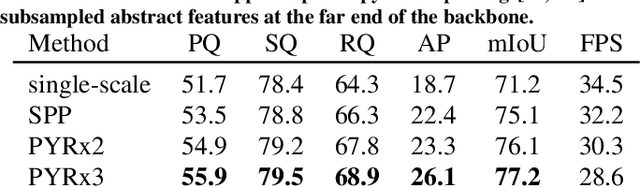

Panoptic SwiftNet: Pyramidal Fusion for Real-time Panoptic Segmentation

Mar 15, 2022

Abstract:Dense panoptic prediction is a key ingredient in many existing applications such as autonomous driving, automated warehouses or agri-robotics. However, most of these applications leverage the recovered dense semantics as an input to visual closed-loop control. Hence, practical deployments require real-time inference over large input resolutions on embedded hardware. These requirements call for computationally efficient approaches which deliver high accuracy with limited computational resources. We propose to achieve this goal by trading-off backbone capacity for multi-scale feature extraction. In comparison with contemporaneous approaches to panoptic segmentation, the main novelties of our method are scale-equivariant feature extraction and cross-scale upsampling through pyramidal fusion. Our best model achieves 55.9% PQ on Cityscapes val at 60 FPS on full resolution 2MPx images and RTX3090 with FP16 Tensor RT optimization.

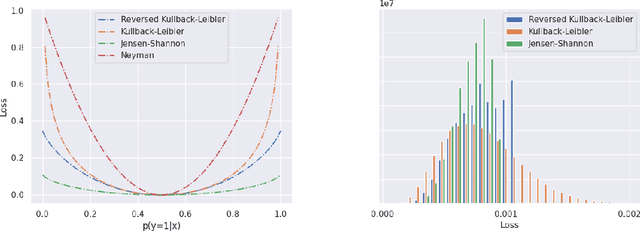

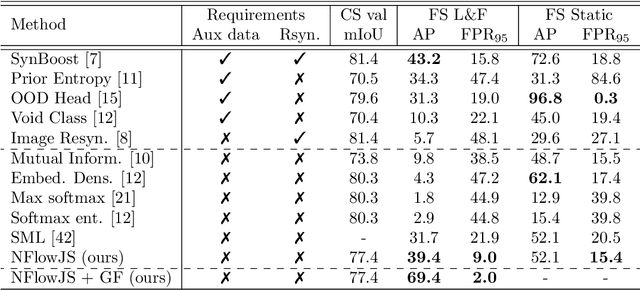

Dense anomaly detection by robust learning on synthetic negative data

Dec 31, 2021

Abstract:Standard machine learning is unable to accommodate inputs which do not belong to the training distribution. The resulting models often give rise to confident incorrect predictions which may lead to devastating consequences. This problem is especially demanding in the context of dense prediction since input images may be partially anomalous. Previous work has addressed dense anomaly detection by discriminative training on mixed-content images. We extend this approach with synthetic negative patches which simultaneously achieve high inlier likelihood and uniform discriminative prediction. We generate synthetic negatives with normalizing flows due to their outstanding distribution coverage and capability to generate samples at different resolutions. We also propose to detect anomalies according to a principled information-theoretic criterion which can be consistently applied through training and inference. The resulting models set the new state of the art on standard benchmarks and datasets in spite of minimal computational overhead and refraining from auxiliary negative data.

Multi-domain semantic segmentation with overlapping labels

Aug 25, 2021

Abstract:Deep supervised models have an unprecedented capacity to absorb large quantities of training data. Hence, training on many datasets becomes a method of choice towards graceful degradation in unusual scenes. Unfortunately, different datasets often use incompatible labels. For instance, the Cityscapes road class subsumes all driving surfaces, while Vistas defines separate classes for road markings, manholes etc. We address this challenge by proposing a principled method for seamless learning on datasets with overlapping classes based on partial labels and probabilistic loss. Our method achieves competitive within-dataset and cross-dataset generalization, as well as ability to learn visual concepts which are not separately labeled in any of the training datasets. Experiments reveal competitive or state-of-the-art performance on two multi-domain dataset collections and on the WildDash 2 benchmark.

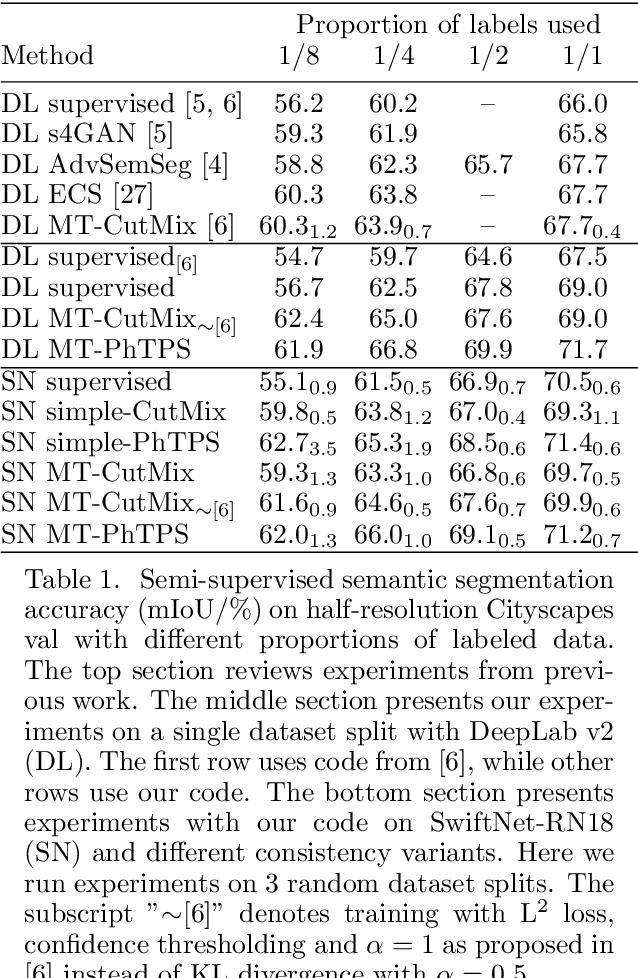

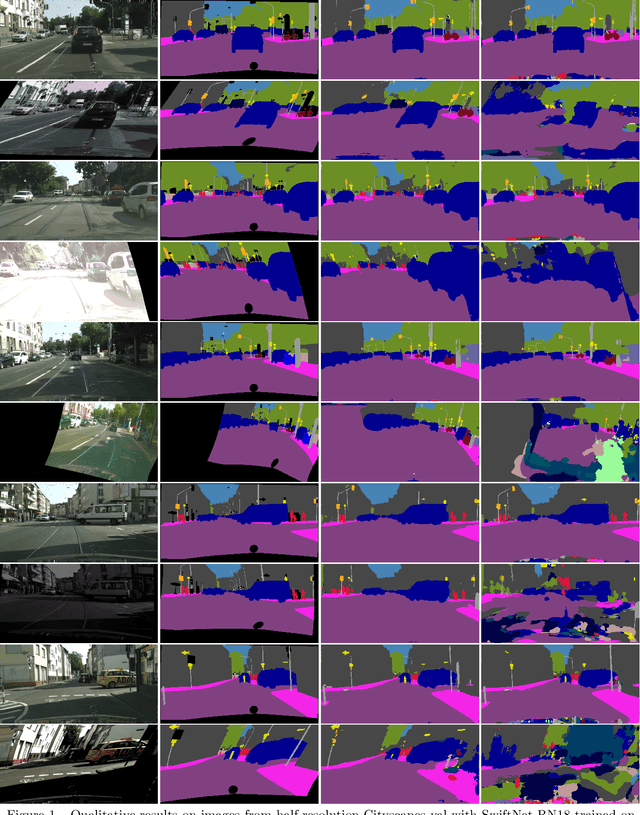

A baseline for semi-supervised learning of efficient semantic segmentation models

Jun 15, 2021

Abstract:Semi-supervised learning is especially interesting in the dense prediction context due to high cost of pixel-level ground truth. Unfortunately, most such approaches are evaluated on outdated architectures which hamper research due to very slow training and high requirements on GPU RAM. We address this concern by presenting a simple and effective baseline which works very well both on standard and efficient architectures. Our baseline is based on one-way consistency and non-linear geometric and photometric perturbations. We show advantage of perturbing only the student branch and present a plausible explanation of such behaviour. Experiments on Cityscapes and CIFAR-10 demonstrate competitive performance with respect to prior work.

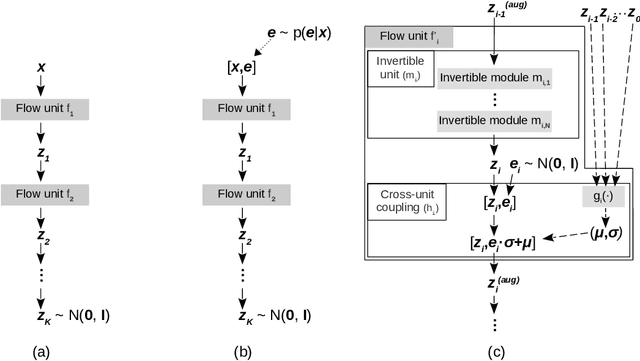

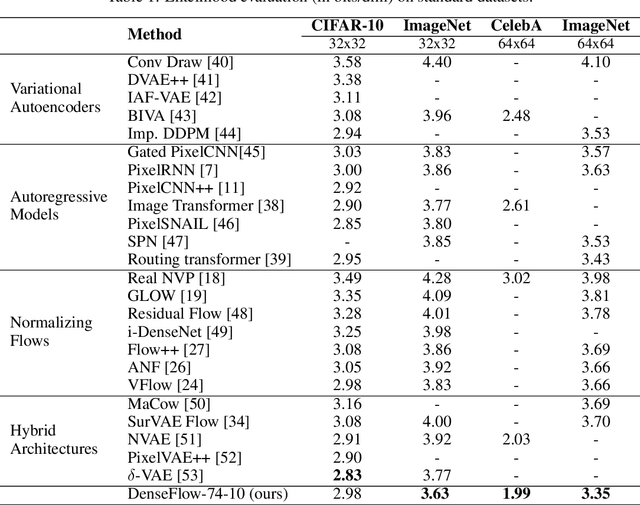

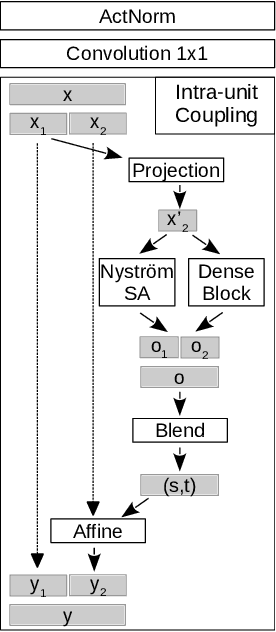

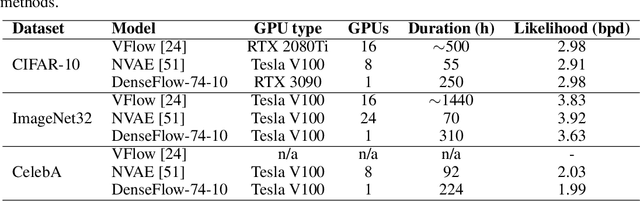

Densely connected normalizing flows

Jun 08, 2021

Abstract:Normalizing flows are bijective mappings between inputs and latent representations with a fully factorized distribution. They are very attractive due to exact likelihood evaluation and efficient sampling. However, their effective capacity is often insufficient since the bijectivity constraint limits the model width. We address this issue by incrementally padding intermediate representations with noise. We precondition the noise in accordance with previous invertible units, which we describe as cross-unit coupling. Our invertible glow-like modules express intra-unit affine coupling as a fusion of a densely connected block and Nystr\"om self-attention. We refer to our architecture as DenseFlow since both cross-unit and intra-unit couplings rely on dense connectivity. Experiments show significant improvements due to the proposed contributions, and reveal state-of-the-art density estimation among all generative models under moderate computing budgets.

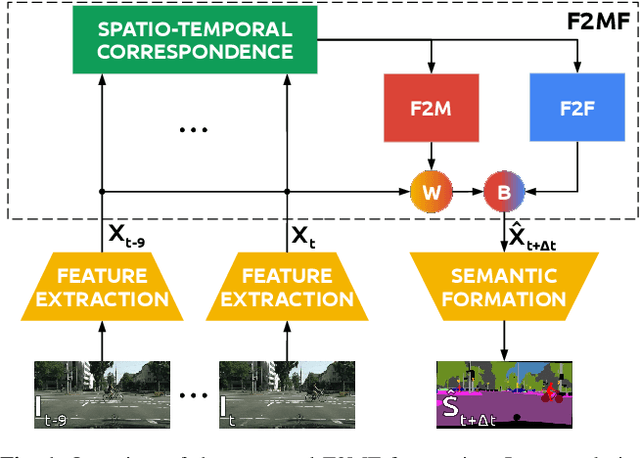

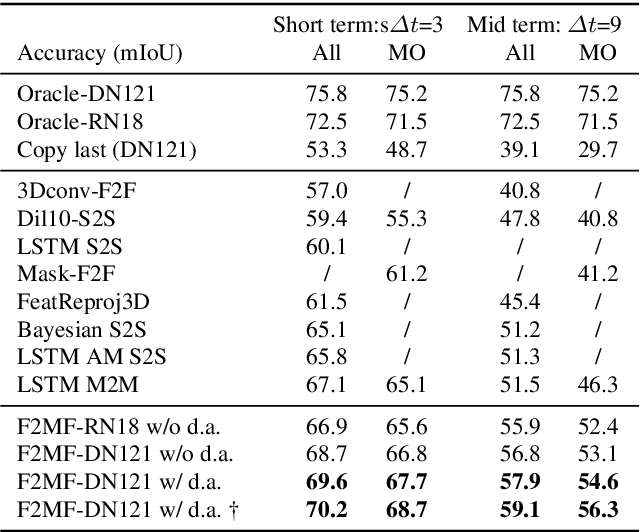

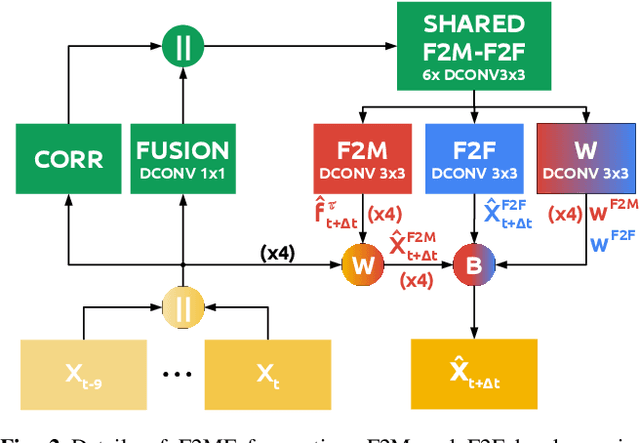

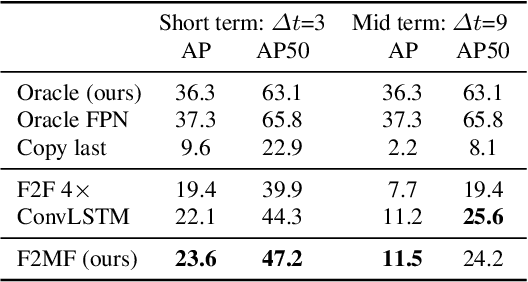

Joint Forecasting of Features and Feature Motion for Dense Semantic Future Prediction

Jan 26, 2021

Abstract:We present a novel dense semantic forecasting approach which is applicable to a variety of architectures and tasks. The approach consists of two modules. Feature-to-motion (F2M) module forecasts a dense deformation field which warps past features into their future positions. Feature-to-feature (F2F) module regresses the future features directly and is therefore able to account for emergent scenery. The compound F2MF approach decouples effects of motion from the effects of novelty in a task-agnostic manner. We aim to apply F2MF forecasting to the most subsampled and the most abstract representation of a desired single-frame model. Our implementations take advantage of deformable convolutions and pairwise correlation coefficients across neighbouring time instants. We perform experiments on three dense prediction tasks: semantic segmentation, instance-level segmentation, and panoptic segmentation. The results reveal state-of-the-art forecasting accuracy across all three modalities on the Cityscapes dataset.

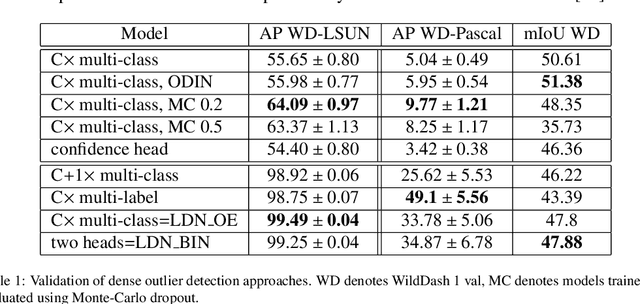

Dense outlier detection and open-set recognition based on training with noisy negative images

Jan 22, 2021

Abstract:Deep convolutional models often produce inadequate predictions for inputs foreign to the training distribution. Consequently, the problem of detecting outlier images has recently been receiving a lot of attention. Unlike most previous work, we address this problem in the dense prediction context in order to be able to locate outlier objects in front of in-distribution background. Our approach is based on two reasonable assumptions. First, we assume that the inlier dataset is related to some narrow application field (e.g.~road driving). Second, we assume that there exists a general-purpose dataset which is much more diverse than the inlier dataset (e.g.~ImageNet-1k). We consider pixels from the general-purpose dataset as noisy negative training samples since most (but not all) of them are outliers. We encourage the model to recognize borders between known and unknown by pasting jittered negative patches over inlier training images. Our experiments target two dense open-set recognition benchmarks (WildDash 1 and Fishyscapes) and one dense open-set recognition dataset (StreetHazard). Extensive performance evaluation indicates competitive potential of the proposed approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge