Sina Khanagha

Bone-conduction Guided Multimodal Speech Enhancement with Conditional Diffusion Models

Jan 18, 2026Abstract:Single-channel speech enhancement models face significant performance degradation in extremely noisy environments. While prior work has shown that complementary bone-conducted speech can guide enhancement, effective integration of this noise-immune modality remains a challenge. This paper introduces a novel multimodal speech enhancement framework that integrates bone-conduction sensors with air-conducted microphones using a conditional diffusion model. Our proposed model significantly outperforms previously established multimodal techniques and a powerful diffusion-based single-modal baseline across a wide range of acoustic conditions.

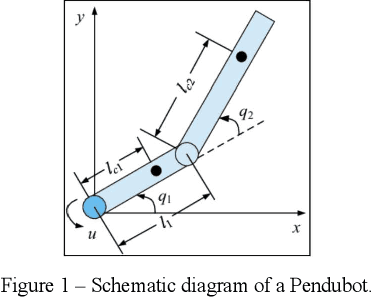

Transfer Learning for Estimation of Pendubot Angular Position Using Deep Neural Networks

Jan 29, 2022

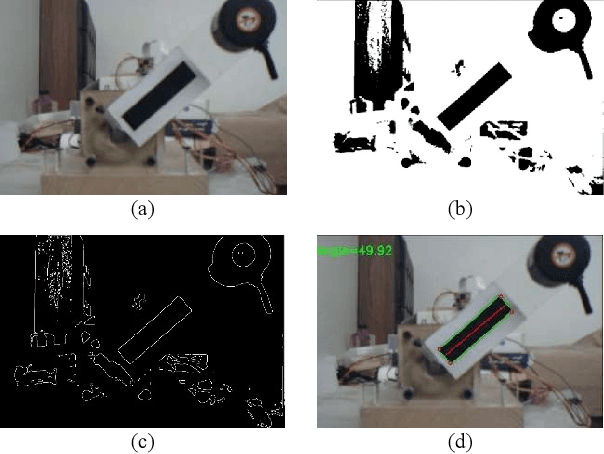

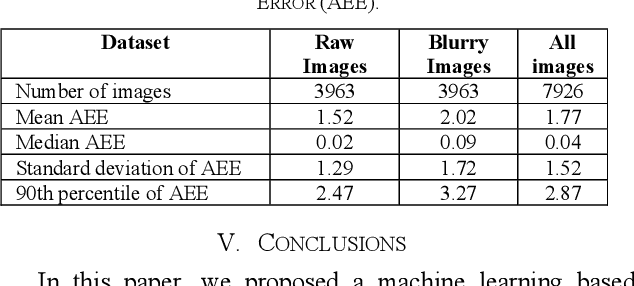

Abstract:In this paper, a machine learning based approach is introduced to estimate Pendubot angular position from its captured images. Initially, a baseline algorithm is introduced to estimate the angle using conventional image processing technique. The baseline algorithm performs well for the cases that the Pendubot is not moving fast. However, when moving quickly due to a free fall, the Pendubot appears as a blurred object in the captured image in a way that the baseline algorithm fails to estimate the angle. Consequently, a Deep Neural Network (DNN) based algorithm is introduced to cope with this challenge. The approach relies on the concept of transfer learning to allow the training of the DNN on a very small fine-tuning dataset. The base algorithm is used to create the ground truth labels of the fine-tuning dataset. Experimental results on the held-out evaluation set show that the proposed approach achieves a median absolute error of 0.02 and 0.06 degrees for the sharp and blurry images respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge