Simone Venturi

Adaptive Physics-Informed Neural Operator for Coarse-Grained Non-Equilibrium Flows

Oct 27, 2022Abstract:This work proposes a new machine learning (ML)-based paradigm aiming to enhance the computational efficiency of non-equilibrium reacting flow simulations while ensuring compliance with the underlying physics. The framework combines dimensionality reduction and neural operators through a hierarchical and adaptive deep learning strategy to learn the solution of multi-scale coarse-grained governing equations for chemical kinetics. The proposed surrogate's architecture is structured as a tree, where the leaf nodes correspond to separate physics-informed deep operator networks (PI-DeepONets). The hierarchical attribute has two advantages: i) It allows the simplification of the training phase via transfer learning, starting from the slowest temporal scales; ii) It accelerates the prediction step by enabling adaptivity as the surrogate's evaluation is limited to the necessary leaf nodes based on the local degree of non-equilibrium of the gas. The model is applied to the study of chemical kinetics relevant for application to hypersonic flight, and it is tested here on a pure oxygen gas mixture. The proposed ML framework can adaptively predict the dynamics of almost thirty species with a relative error smaller than 4% for a broad range of initial conditions. This work lays the foundation for constructing an efficient ML-based surrogate coupled with reactive Navier-Stokes solvers for accurately characterizing non-equilibrium phenomena.

SVD Perspectives for Augmenting DeepONet Flexibility and Interpretability

Apr 27, 2022

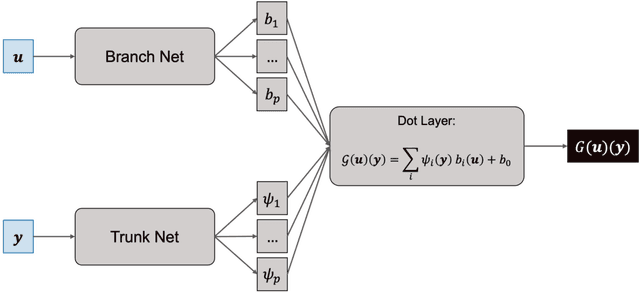

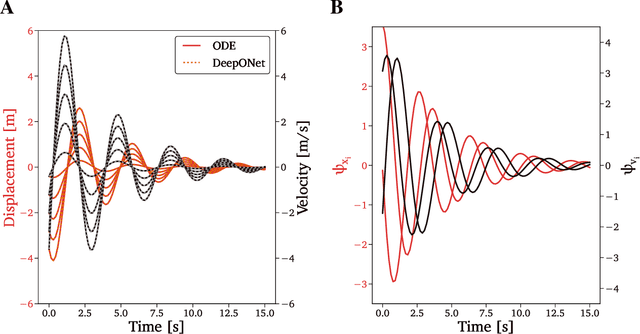

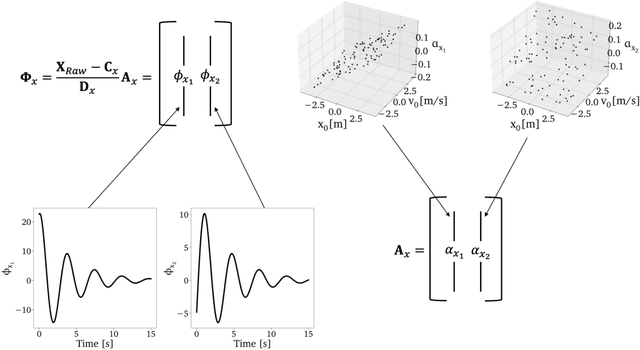

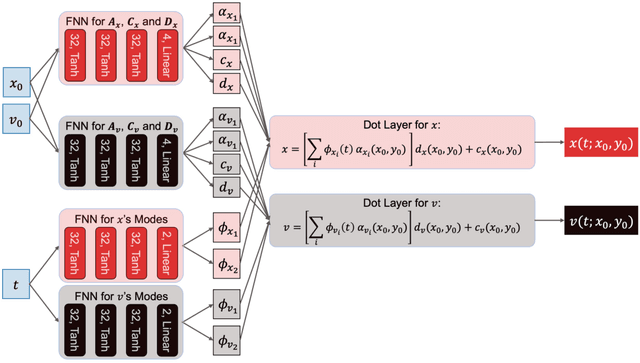

Abstract:Deep operator networks (DeepONets) are powerful architectures for fast and accurate emulation of complex dynamics. As their remarkable generalization capabilities are primarily enabled by their projection-based attribute, we investigate connections with low-rank techniques derived from the singular value decomposition (SVD). We demonstrate that some of the concepts behind proper orthogonal decomposition (POD)-neural networks can improve DeepONet's design and training phases. These ideas lead us to a methodology extension that we name SVD-DeepONet. Moreover, through multiple SVD analyses, we find that DeepONet inherits from its projection-based attribute strong inefficiencies in representing dynamics characterized by symmetries. Inspired by the work on shifted-POD, we develop flexDeepONet, an architecture enhancement that relies on a pre-transformation network for generating a moving reference frame and isolating the rigid components of the dynamics. In this way, the physics can be represented on a latent space free from rotations, translations, and stretches, and an accurate projection can be performed to a low-dimensional basis. In addition to flexibility and interpretability, the proposed perspectives increase DeepONet's generalization capabilities and computational efficiencies. For instance, we show flexDeepONet can accurately surrogate the dynamics of 19 variables in a combustion chemistry application by relying on 95% less trainable parameters than the ones of the vanilla architecture. We argue that DeepONet and SVD-based methods can reciprocally benefit from each other. In particular, the flexibility of the former in leveraging multiple data sources and multifidelity knowledge in the form of both unstructured data and physics-informed constraints has the potential to greatly extend the applicability of methodologies such as POD and PCA.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge