Shree K. Nayar

Hierarchical Material Recognition from Local Appearance

May 28, 2025Abstract:We introduce a taxonomy of materials for hierarchical recognition from local appearance. Our taxonomy is motivated by vision applications and is arranged according to the physical traits of materials. We contribute a diverse, in-the-wild dataset with images and depth maps of the taxonomy classes. Utilizing the taxonomy and dataset, we present a method for hierarchical material recognition based on graph attention networks. Our model leverages the taxonomic proximity between classes and achieves state-of-the-art performance. We demonstrate the model's potential to generalize to adverse, real-world imaging conditions, and that novel views rendered using the depth maps can enhance this capability. Finally, we show the model's capacity to rapidly learn new materials in a few-shot learning setting.

Cricket: A Self-Powered Chirping Pixel

May 04, 2025Abstract:We present a sensor that can measure light and wirelessly communicate the measurement, without the need for an external power source or a battery. Our sensor, called cricket, harvests energy from incident light. It is asleep for most of the time and transmits a short and strong radio frequency chirp when its harvested energy reaches a specific level. The carrier frequency of each cricket is fixed and reveals its identity, and the duration between consecutive chirps is a measure of the incident light level. We have characterized the radiometric response function, signal-to-noise ratio and dynamic range of cricket. We have experimentally verified that cricket can be miniaturized at the expense of increasing the duration between chirps. We show that a cube with a cricket on each of its sides can be used to estimate the centroid of any complex illumination, which has value in applications such as solar tracking. We also demonstrate the use of crickets for creating untethered sensor arrays that can produce video and control lighting for energy conservation. Finally, we modified cricket's circuit to develop battery-free electronic sunglasses that can instantly adapt to environmental illumination.

* 13 pages, 18 figures. Project page: https://cave.cs.columbia.edu/projects/categories/project?cid=Computational%20Imaging&pid=Cricket%20A%20Self-Powered%20Chirping%20Pixel

Minimal Sensing for Orienting a Solar Panel

Apr 14, 2025Abstract:A solar panel harvests the most energy when pointing in the direction that maximizes the total illumination (irradiance) falling on it. Given an arbitrary orientation of a panel and an arbitrary environmental illumination, we address the problem of finding the direction of maximum total irradiance. We develop a minimal sensing approach where measurements from just four photodetectors are used to iteratively vary the tilt of the panel to maximize the irradiance. Many environments produce irradiance functions with multiple local maxima. As a result, simply measuring the gradient of the irradiance function and applying gradient ascent will not work. We show that a larger, optimized tilt between the detectors and the panel is equivalent to blurring the irradiance function. This has the effect of eliminating local maxima and turning the irradiance function into a unimodal one, whose maximum can be found using gradient ascent. We show that there is a close relationship between our approach and scale space theory. We have collected a large dataset of high-dynamic range lighting environments in New York City, called \textit{UrbanSky}. We used this dataset to conduct simulations to verify the robustness of our approach. Finally, we have built a portable solar panel with four compact detectors and an actuator to conduct experiments in various real-world settings: direct sunlight, cloudy sky, urban settings with occlusions and shadows, and complex indoor lighting. In all cases, we show significant improvements in harvested energy compared to standard approaches for controlling the orientation of a solar panel.

Minimalist Vision with Freeform Pixels

Dec 30, 2024Abstract:A minimalist vision system uses the smallest number of pixels needed to solve a vision task. While traditional cameras use a large grid of square pixels, a minimalist camera uses freeform pixels that can take on arbitrary shapes to increase their information content. We show that the hardware of a minimalist camera can be modeled as the first layer of a neural network, where the subsequent layers are used for inference. Training the network for any given task yields the shapes of the camera's freeform pixels, each of which is implemented using a photodetector and an optical mask. We have designed minimalist cameras for monitoring indoor spaces (with 8 pixels), measuring room lighting (with 8 pixels), and estimating traffic flow (with 8 pixels). The performance demonstrated by these systems is on par with a traditional camera with orders of magnitude more pixels. Minimalist vision has two major advantages. First, it naturally tends to preserve the privacy of individuals in the scene since the captured information is inadequate for extracting visual details. Second, since the number of measurements made by a minimalist camera is very small, we show that it can be fully self-powered, i.e., function without an external power supply or a battery.

* Project page: https://cave.cs.columbia.edu/projects/categories/project?cid=Computational+Imaging&pid=Minimalist+Vision+with+Freeform+Pixels, published at ECCV 2024

AirCode: Unobtrusive Physical Tags for Digital Fabrication

Aug 07, 2017

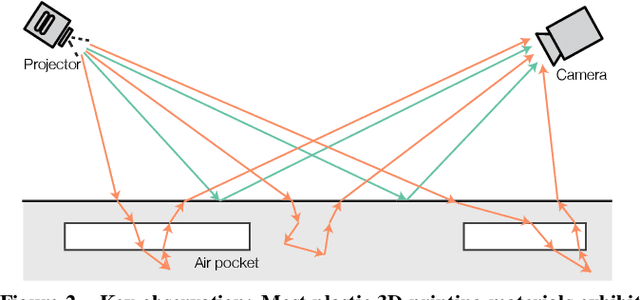

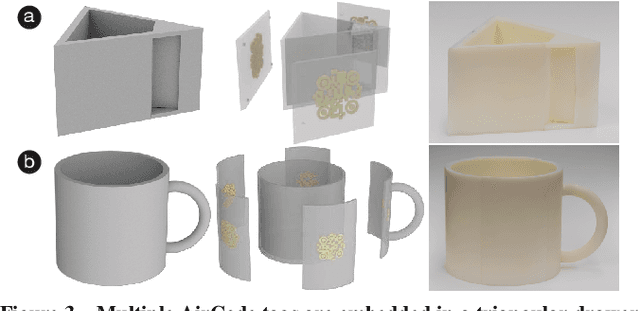

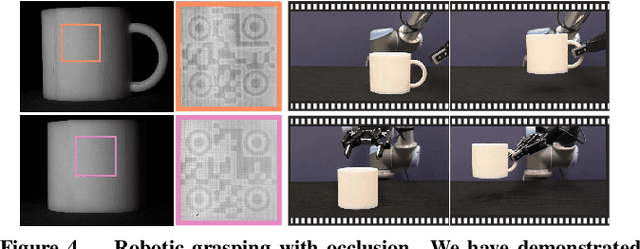

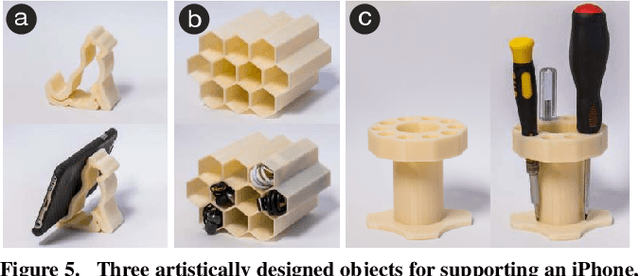

Abstract:We present AirCode, a technique that allows the user to tag physically fabricated objects with given information. An AirCode tag consists of a group of carefully designed air pockets placed beneath the object surface. These air pockets are easily produced during the fabrication process of the object, without any additional material or postprocessing. Meanwhile, the air pockets affect only the scattering light transport under the surface, and thus are hard to notice to our naked eyes. But, by using a computational imaging method, the tags become detectable. We present a tool that automates the design of air pockets for the user to encode information. AirCode system also allows the user to retrieve the information from captured images via a robust decoding algorithm. We demonstrate our tagging technique with applications for metadata embedding, robotic grasping, as well as conveying object affordances.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge