Shailja Gupta

Upgrade or Switch: Do We Need a New Registry Architecture for the Internet of AI Agents?

Jun 13, 2025Abstract:The emerging Internet of AI Agents challenges existing web infrastructure designed for human-scale, reactive interactions. Unlike traditional web resources, autonomous AI agents initiate actions, maintain persistent state, spawn sub-agents, and negotiate directly with peers: demanding millisecond-level discovery, instant credential revocation, and cryptographic behavioral proofs that exceed current DNS/PKI capabilities. This paper analyzes whether to upgrade existing infrastructure or implement purpose-built registry architectures for autonomous agents. We identify critical failure points: DNS propagation (24-48 hours vs. required milliseconds), certificate revocation unable to scale to trillions of entities, and IPv4/IPv6 addressing inadequate for agent-scale routing. We evaluate three approaches: (1) Upgrade paths, (2) Switch options, (3) Hybrid registries. Drawing parallels to dialup-to-broadband transitions, we find that agent requirements constitute qualitative, and not incremental, changes. While upgrades offer compatibility and faster deployment, clean-slate solutions provide better performance but require longer for adoption. Our analysis suggests hybrid approaches will emerge, with centralized registries for critical agents and federated meshes for specialized use cases.

LOKA Protocol: A Decentralized Framework for Trustworthy and Ethical AI Agent Ecosystems

Apr 15, 2025Abstract:The rise of autonomous AI agents, capable of perceiving, reasoning, and acting independently, signals a profound shift in how digital ecosystems operate, govern, and evolve. As these agents proliferate beyond centralized infrastructures, they expose foundational gaps in identity, accountability, and ethical alignment. Three critical questions emerge: Identity: Who or what is the agent? Accountability: Can its actions be verified, audited, and trusted? Ethical Consensus: Can autonomous systems reliably align with human values and prevent harmful emergent behaviors? We present the novel LOKA Protocol (Layered Orchestration for Knowledgeful Agents), a unified, systems-level architecture for building ethically governed, interoperable AI agent ecosystems. LOKA introduces a proposed Universal Agent Identity Layer (UAIL) for decentralized, verifiable identity; intent-centric communication protocols for semantic coordination across diverse agents; and a Decentralized Ethical Consensus Protocol (DECP) that enables agents to make context-aware decisions grounded in shared ethical baselines. Anchored in emerging standards such as Decentralized Identifiers (DIDs), Verifiable Credentials (VCs), and post-quantum cryptography, LOKA offers a scalable, future-resilient blueprint for multi-agent AI governance. By embedding identity, trust, and ethics into the protocol layer itself, LOKA establishes the foundation for a new era of responsible, transparent, and autonomous AI ecosystems operating across digital and physical domains.

Fairness in Multi-Agent AI: A Unified Framework for Ethical and Equitable Autonomous Systems

Feb 11, 2025

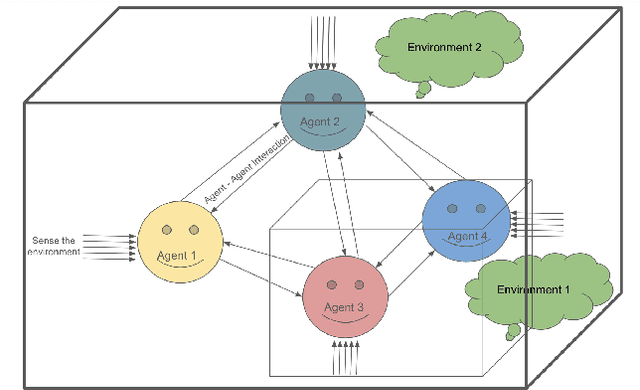

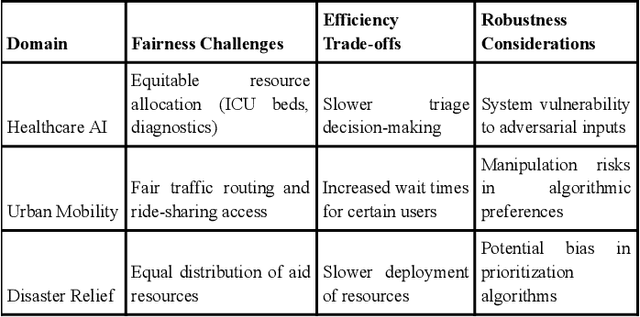

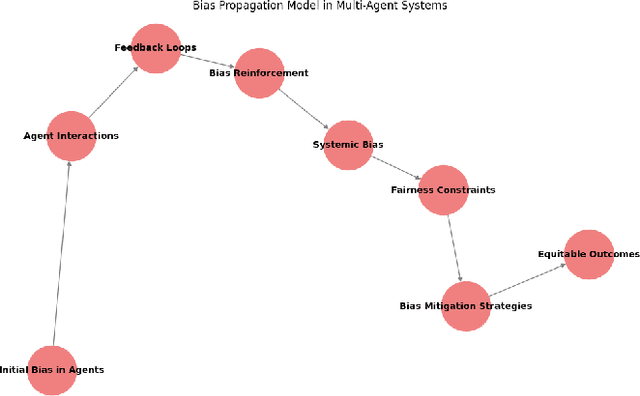

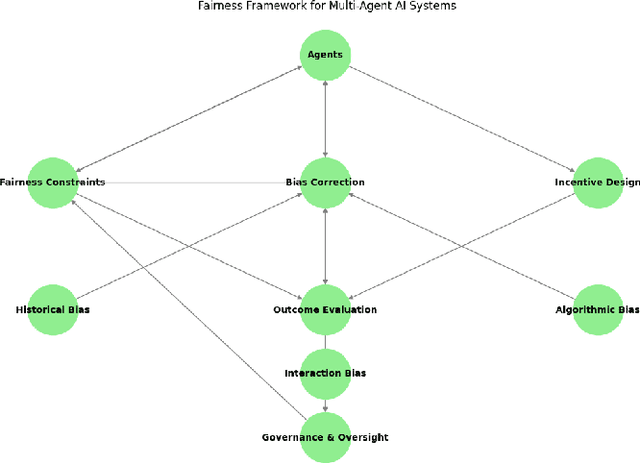

Abstract:Ensuring fairness in decentralized multi-agent systems presents significant challenges due to emergent biases, systemic inefficiencies, and conflicting agent incentives. This paper provides a comprehensive survey of fairness in multi-agent AI, introducing a novel framework where fairness is treated as a dynamic, emergent property of agent interactions. The framework integrates fairness constraints, bias mitigation strategies, and incentive mechanisms to align autonomous agent behaviors with societal values while balancing efficiency and robustness. Through empirical validation, we demonstrate that incorporating fairness constraints results in more equitable decision-making. This work bridges the gap between AI ethics and system design, offering a foundation for accountable, transparent, and socially responsible multi-agent AI systems.

Comprehensive Framework for Evaluating Conversational AI Chatbots

Feb 10, 2025

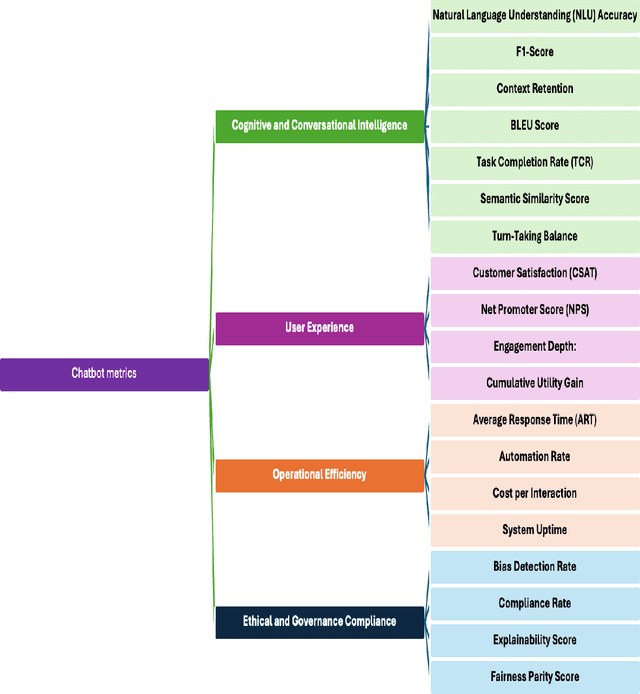

Abstract:Conversational AI chatbots are transforming industries by streamlining customer service, automating transactions, and enhancing user engagement. However, evaluating these systems remains a challenge, particularly in financial services, where compliance, user trust, and operational efficiency are critical. This paper introduces a novel evaluation framework that systematically assesses chatbots across four dimensions: cognitive and conversational intelligence, user experience, operational efficiency, and ethical and regulatory compliance. By integrating advanced AI methodologies with financial regulations, the framework bridges theoretical foundations and real-world deployment challenges. Additionally, we outline future research directions, emphasizing improvements in conversational coherence, real-time adaptability, and fairness.

A Comprehensive Survey of Retrieval-Augmented Generation (RAG): Evolution, Current Landscape and Future Directions

Oct 03, 2024

Abstract:This paper presents a comprehensive study of Retrieval-Augmented Generation (RAG), tracing its evolution from foundational concepts to the current state of the art. RAG combines retrieval mechanisms with generative language models to enhance the accuracy of outputs, addressing key limitations of LLMs. The study explores the basic architecture of RAG, focusing on how retrieval and generation are integrated to handle knowledge-intensive tasks. A detailed review of the significant technological advancements in RAG is provided, including key innovations in retrieval-augmented language models and applications across various domains such as question-answering, summarization, and knowledge-based tasks. Recent research breakthroughs are discussed, highlighting novel methods for improving retrieval efficiency. Furthermore, the paper examines ongoing challenges such as scalability, bias, and ethical concerns in deployment. Future research directions are proposed, focusing on improving the robustness of RAG models, expanding the scope of application of RAG models, and addressing societal implications. This survey aims to serve as a foundational resource for researchers and practitioners in understanding the potential of RAG and its trajectory in natural language processing.

A Comprehensive Survey of Bias in LLMs: Current Landscape and Future Directions

Sep 24, 2024Abstract:Large Language Models(LLMs) have revolutionized various applications in natural language processing (NLP) by providing unprecedented text generation, translation, and comprehension capabilities. However, their widespread deployment has brought to light significant concerns regarding biases embedded within these models. This paper presents a comprehensive survey of biases in LLMs, aiming to provide an extensive review of the types, sources, impacts, and mitigation strategies related to these biases. We systematically categorize biases into several dimensions. Our survey synthesizes current research findings and discusses the implications of biases in real-world applications. Additionally, we critically assess existing bias mitigation techniques and propose future research directions to enhance fairness and equity in LLMs. This survey serves as a foundational resource for researchers, practitioners, and policymakers concerned with addressing and understanding biases in LLMs.

Comprehensive Study on Sentiment Analysis: From Rule-based to modern LLM based system

Sep 16, 2024

Abstract:This paper provides a comprehensive survey of sentiment analysis within the context of artificial intelligence (AI) and large language models (LLMs). Sentiment analysis, a critical aspect of natural language processing (NLP), has evolved significantly from traditional rule-based methods to advanced deep learning techniques. This study examines the historical development of sentiment analysis, highlighting the transition from lexicon-based and pattern-based approaches to more sophisticated machine learning and deep learning models. Key challenges are discussed, including handling bilingual texts, detecting sarcasm, and addressing biases. The paper reviews state-of-the-art approaches, identifies emerging trends, and outlines future research directions to advance the field. By synthesizing current methodologies and exploring future opportunities, this survey aims to understand sentiment analysis in the AI and LLM context thoroughly.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge