Sayeef Salahuddin

Efficient Optimization Accelerator Framework for Multistate Ising Problems

May 26, 2025Abstract:Ising Machines are a prominent class of hardware architectures that aim to solve NP-hard combinatorial optimization problems. These machines consist of a network of interacting binary spins/neurons that evolve to represent the optimum ground state energy solution. Generally, combinatorial problems are transformed into quadratic unconstrained binary optimization (QUBO) form to harness the computational efficiency of these Ising machines. However, this transformation, especially for multi-state problems, often leads to a more complex exploration landscape than the original problem, thus severely impacting the solution quality. To address this challenge, we model the spin interactions as a generalized boolean logic function to significantly reduce the exploration space. We benchmark the graph coloring problem from the class of multi-state NP-hard optimization using probabilistic Ising solvers to illustrate the effectiveness of our framework. The proposed methodology achieves similar accuracy compared to state-of-the-art heuristics and machine learning algorithms, and demonstrates significant improvement over the existing Ising methods. Additionally, we demonstrate that combining parallel tempering with our existing framework further reduces the coloring error by up to 50% compared to the conventionally used Gibbs sampling algorithm. We also design a 1024-neuron all-to-all connected probabilistic Ising accelerator that shows up to 10000x performance acceleration compared to heuristics while reducing the number of required physical neurons by 1.5-4x compared to conventional Ising machines. Indeed, this accelerator solution demonstrates improvement across all metrics over the current methods, i.e., energy, performance, area, and solution quality. Thus, this work expands the potential of existing Ising hardware to solve a broad class of these multistate optimization problems.

Combining Learned Representations for Combinatorial Optimization

Sep 09, 2019

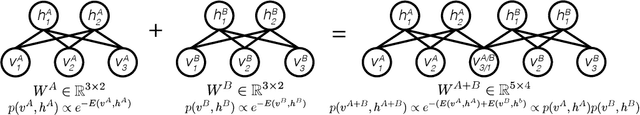

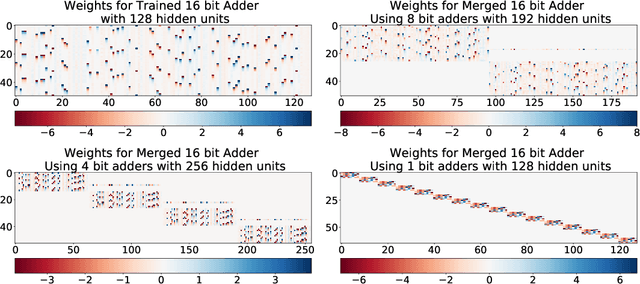

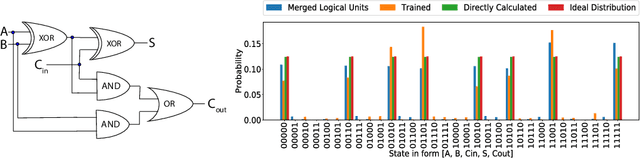

Abstract:We propose a new approach to combine Restricted Boltzmann Machines (RBMs) that can be used to solve combinatorial optimization problems. This allows synthesis of larger models from smaller RBMs that have been pretrained, thus effectively bypassing the problem of learning in large RBMs, and creating a system able to model a large, complex multi-modal space. We validate this approach by using learned representations to create ``invertible boolean logic'', where we can use Markov chain Monte Carlo (MCMC) approaches to find the solution to large scale boolean satisfiability problems and show viability towards other combinatorial optimization problems. Using this method, we are able to solve 64 bit addition based problems, as well as factorize 16 bit numbers. We find that these combined representations can provide a more accurate result for the same sample size as compared to a fully trained model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge