Sarah Alotaibi

A Smart Healthcare System for Monkeypox Skin Lesion Detection and Tracking

May 25, 2025Abstract:Monkeypox is a viral disease characterized by distinctive skin lesions and has been reported in many countries. The recent global outbreak has emphasized the urgent need for scalable, accessible, and accurate diagnostic solutions to support public health responses. In this study, we developed ITMAINN, an intelligent, AI-driven healthcare system specifically designed to detect Monkeypox from skin lesion images using advanced deep learning techniques. Our system consists of three main components. First, we trained and evaluated several pretrained models using transfer learning on publicly available skin lesion datasets to identify the most effective models. For binary classification (Monkeypox vs. non-Monkeypox), the Vision Transformer, MobileViT, Transformer-in-Transformer, and VGG16 achieved the highest performance, each with an accuracy and F1-score of 97.8%. For multiclass classification, which contains images of patients with Monkeypox and five other classes (chickenpox, measles, hand-foot-mouth disease, cowpox, and healthy), ResNetViT and ViT Hybrid models achieved 92% accuracy, with F1 scores of 92.24% and 92.19%, respectively. The best-performing and most lightweight model, MobileViT, was deployed within the mobile application. The second component is a cross-platform smartphone application that enables users to detect Monkeypox through image analysis, track symptoms, and receive recommendations for nearby healthcare centers based on their location. The third component is a real-time monitoring dashboard designed for health authorities to support them in tracking cases, analyzing symptom trends, guiding public health interventions, and taking proactive measures. This system is fundamental in developing responsive healthcare infrastructure within smart cities. Our solution, ITMAINN, is part of revolutionizing public health management.

BioFaceNet: Deep Biophysical Face Image Interpretation

Sep 13, 2019

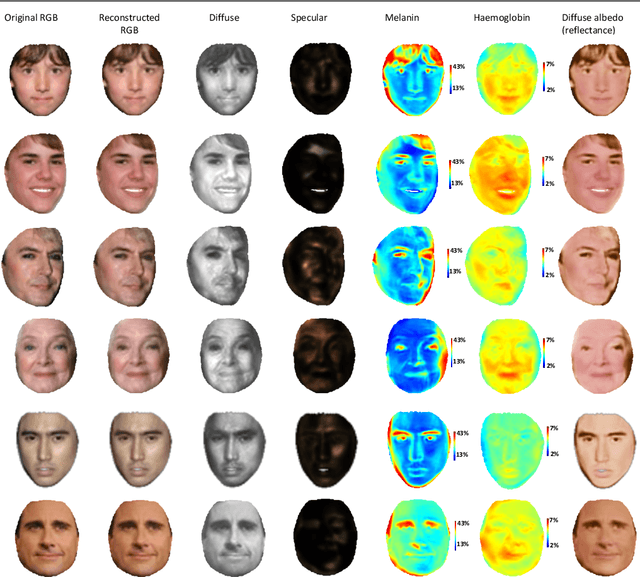

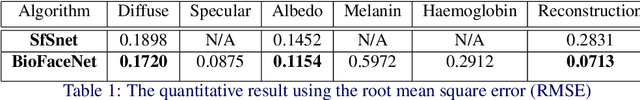

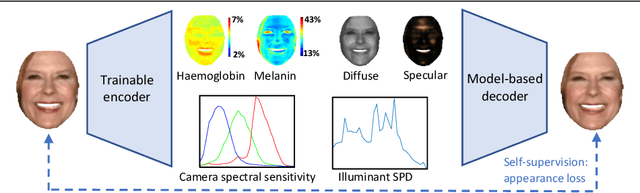

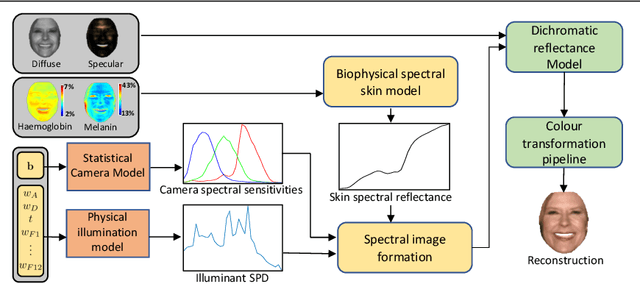

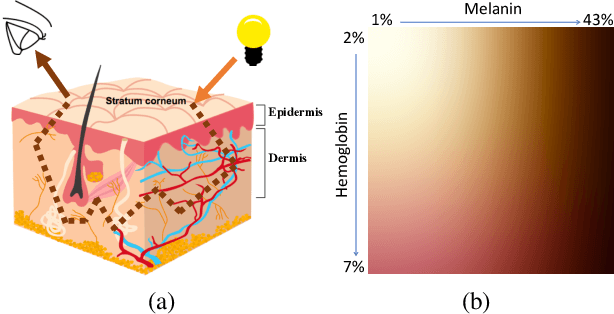

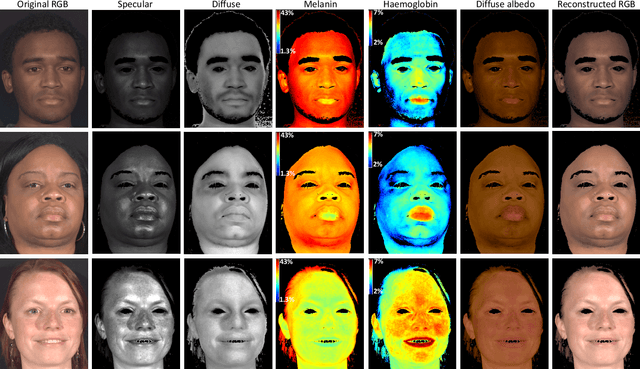

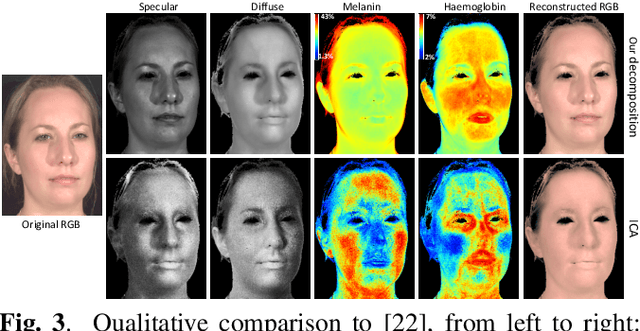

Abstract:In this paper we present BioFaceNet, a deep CNN that learns to decompose a single face image into biophysical parameters maps, diffuse and specular shading maps as well as estimating the spectral power distribution of the scene illuminant and the spectral sensitivity of the camera. The network comprises a fully convolutional encoder for estimating the spatial maps with a fully connected branch for estimating the vector quantities. The network is trained using a self-supervised appearance loss computed via a model-based decoder. The task is highly underconstrained so we impose a number of model-based priors. Skin spectral reflectance is restricted to a biophysical model, we impose a statistical prior on camera spectral sensitivities, a physical constraint on illumination spectra, a sparsity prior on specular reflections and direct supervision on diffuse shading using a rough shape proxy. We show convincing qualitative results on in-the-wild data and introduce a benchmark for quantitative evaluation on this new task.

Decomposing multispectral face images into diffuse and specular shading and biophysical parameters

Feb 18, 2019

Abstract:We propose a novel biophysical and dichromatic reflectance model that efficiently characterises spectral skin reflectance. We show how to fit the model to multispectral face images enabling high quality estimation of diffuse and specular shading as well as biophysical parameter maps (melanin and haemoglobin). Our method works from a single image without requiring complex controlled lighting setups yet provides quantitatively accurate reconstructions and qualitatively convincing decomposition and editing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge