Sanskar Singh

SARCH: Multimodal Search for Archaeological Archives

Nov 07, 2025Abstract:In this paper, we describe a multi-modal search system designed to search old archaeological books and reports. This corpus is digitally available as scanned PDFs, but varies widely in the quality of scans. Our pipeline, designed for multi-modal archaeological documents, extracts and indexes text, images (classified into maps, photos, layouts, and others), and tables. We evaluated different retrieval strategies, including keyword-based search, embedding-based models, and a hybrid approach that selects optimal results from both modalities. We report and analyze our preliminary results and discuss future work in this exciting vertical.

Video Vision Transformers for Violence Detection

Sep 08, 2022

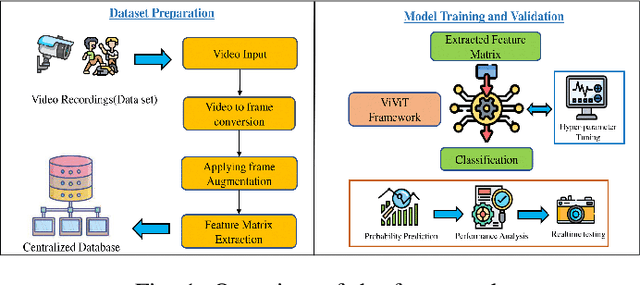

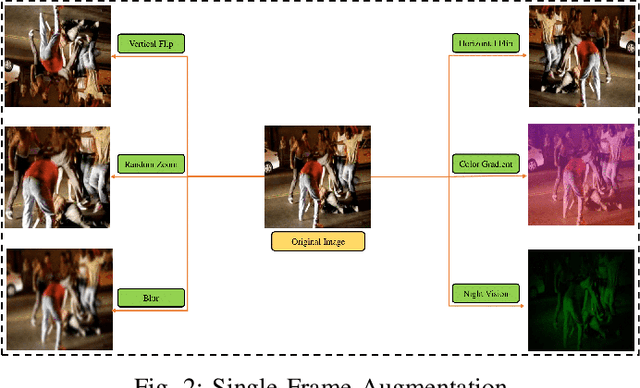

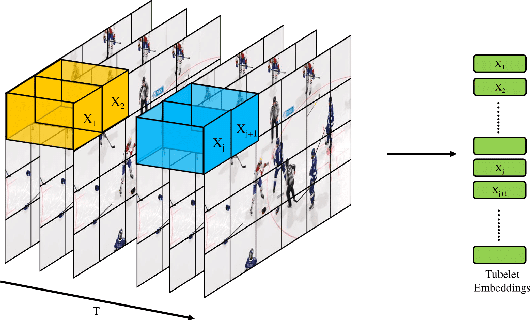

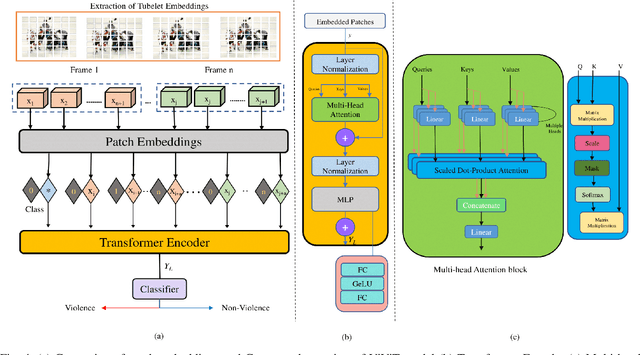

Abstract:Law enforcement and city safety are significantly impacted by detecting violent incidents in surveillance systems. Although modern (smart) cameras are widely available and affordable, such technological solutions are impotent in most instances. Furthermore, personnel monitoring CCTV recordings frequently show a belated reaction, resulting in the potential cause of catastrophe to people and property. Thus automated detection of violence for swift actions is very crucial. The proposed solution uses a novel end-to-end deep learning-based video vision transformer (ViViT) that can proficiently discern fights, hostile movements, and violent events in video sequences. The study presents utilizing a data augmentation strategy to overcome the downside of weaker inductive biasness while training vision transformers on a smaller training datasets. The evaluated results can be subsequently sent to local concerned authority, and the captured video can be analyzed. In comparison to state-of-theart (SOTA) approaches the proposed method achieved auspicious performance on some of the challenging benchmark datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge