Salah A. Aly

Detecting Glioma, Meningioma, and Pituitary Tumors, and Normal Brain Tissues based on Yolov11 and Yolov8 Deep Learning Models

Mar 31, 2025

Abstract:Accurate and quick diagnosis of normal brain tissue Glioma, Meningioma, and Pituitary Tumors is crucial for optimal treatment planning and improved medical results. Magnetic Resonance Imaging (MRI) is widely used as a non-invasive diagnostic tool for detecting brain abnormalities, including tumors. However, manual interpretation of MRI scans is often time-consuming, prone to human error, and dependent on highly specialized expertise. This paper proposes an advanced AI-driven technique to detecting glioma, meningioma, and pituitary brain tumors using YoloV11 and YoloV8 deep learning models. Methods: Using a transfer learning-based fine-tuning approach, we integrate cutting-edge deep learning techniques with medical imaging to classify brain tumors into four categories: No-Tumor, Glioma, Meningioma, and Pituitary Tumors. Results: The study utilizes the publicly accessible CE-MRI Figshare dataset and involves fine-tuning pre-trained models YoloV8 and YoloV11 of 99.49% and 99.56% accuracies; and customized CNN accuracy of 96.98%. The results validate the potential of CNNs in achieving high precision in brain tumor detection and classification, highlighting their transformative role in medical imaging and diagnostics.

Revolutionizing Communication with Deep Learning and XAI for Enhanced Arabic Sign Language Recognition

Jan 14, 2025

Abstract:This study introduces an integrated approach to recognizing Arabic Sign Language (ArSL) using state-of-the-art deep learning models such as MobileNetV3, ResNet50, and EfficientNet-B2. These models are further enhanced by explainable AI (XAI) techniques to boost interpretability. The ArSL2018 and RGB Arabic Alphabets Sign Language (AASL) datasets are employed, with EfficientNet-B2 achieving peak accuracies of 99.48\% and 98.99\%, respectively. Key innovations include sophisticated data augmentation methods to mitigate class imbalance, implementation of stratified 5-fold cross-validation for better generalization, and the use of Grad-CAM for clear model decision transparency. The proposed system not only sets new benchmarks in recognition accuracy but also emphasizes interpretability, making it suitable for applications in healthcare, education, and inclusive communication technologies.

Early Diagnoses of Acute Lymphoblastic Leukemia Using YOLOv8 and YOLOv11 Deep Learning Models

Oct 14, 2024

Abstract:Thousands of individuals succumb annually to leukemia alone. This study explores the application of image processing and deep learning techniques for detecting Acute Lymphoblastic Leukemia (ALL), a severe form of blood cancer responsible for numerous annual fatalities. As artificial intelligence technologies advance, the research investigates the reliability of these methods in real-world scenarios. The study focuses on recent developments in ALL detection, particularly using the latest YOLO series models, to distinguish between malignant and benign white blood cells and to identify different stages of ALL, including early stages. Additionally, the models are capable of detecting hematogones, which are often misclassified as ALL. By utilizing advanced deep learning models like YOLOv8 and YOLOv11, the study achieves high accuracy rates reaching 98.8%, demonstrating the effectiveness of these algorithms across multiple datasets and various real-world situations.

Diagnosis of Malignant Lymphoma Cancer Using Hybrid Optimized Techniques Based on Dense Neural Networks

Oct 09, 2024

Abstract:Lymphoma diagnosis, particularly distinguishing between subtypes, is critical for effective treatment but remains challenging due to the subtle morphological differences in histopathological images. This study presents a novel hybrid deep learning framework that combines DenseNet201 for feature extraction with a Dense Neural Network (DNN) for classification, optimized using the Harris Hawks Optimization (HHO) algorithm. The model was trained on a dataset of 15,000 biopsy images, spanning three lymphoma subtypes: Chronic Lymphocytic Leukemia (CLL), Follicular Lymphoma (FL), and Mantle Cell Lymphoma (MCL). Our approach achieved a testing accuracy of 99.33\%, demonstrating significant improvements in both accuracy and model interpretability. Comprehensive evaluation using precision, recall, F1-score, and ROC-AUC underscores the model's robustness and potential for clinical adoption. This framework offers a scalable solution for improving diagnostic accuracy and efficiency in oncology.

Advanced Arabic Alphabet Sign Language Recognition Using Transfer Learning and Transformer Models

Oct 01, 2024

Abstract:This paper presents an Arabic Alphabet Sign Language recognition approach, using deep learning methods in conjunction with transfer learning and transformer-based models. We study the performance of the different variants on two publicly available datasets, namely ArSL2018 and AASL. This task will make full use of state-of-the-art CNN architectures like ResNet50, MobileNetV2, and EfficientNetB7, and the latest transformer models such as Google ViT and Microsoft Swin Transformer. These pre-trained models have been fine-tuned on the above datasets in an attempt to capture some unique features of Arabic sign language motions. Experimental results present evidence that the suggested methodology can receive a high recognition accuracy, by up to 99.6\% and 99.43\% on ArSL2018 and AASL, respectively. That is far beyond the previously reported state-of-the-art approaches. This performance opens up even more avenues for communication that may be more accessible to Arabic-speaking deaf and hard-of-hearing, and thus encourages an inclusive society.

* 6 pages, 8 figures

Hybridizing Base-Line 2D-CNN Model with Cat Swarm Optimization for Enhanced Advanced Persistent Threat Detection

Aug 30, 2024Abstract:In the realm of cyber-security, detecting Advanced Persistent Threats (APTs) remains a formidable challenge due to their stealthy and sophisticated nature. This research paper presents an innovative approach that leverages Convolutional Neural Networks (CNNs) with a 2D baseline model, enhanced by the cutting-edge Cat Swarm Optimization (CSO) algorithm, to significantly improve APT detection accuracy. By seamlessly integrating the 2D-CNN baseline model with CSO, we unlock the potential for unprecedented accuracy and efficiency in APT detection. The results unveil an impressive accuracy score of $98.4\%$, marking a significant enhancement in APT detection across various attack stages, illuminating a path forward in combating these relentless and sophisticated threats.

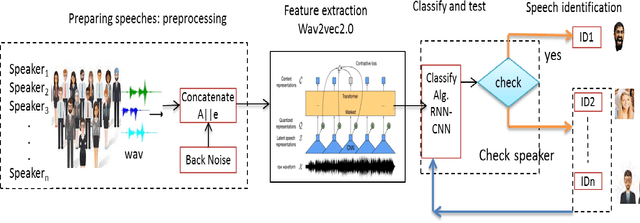

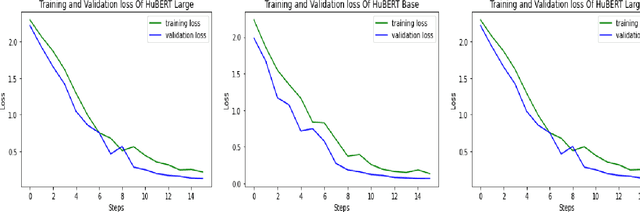

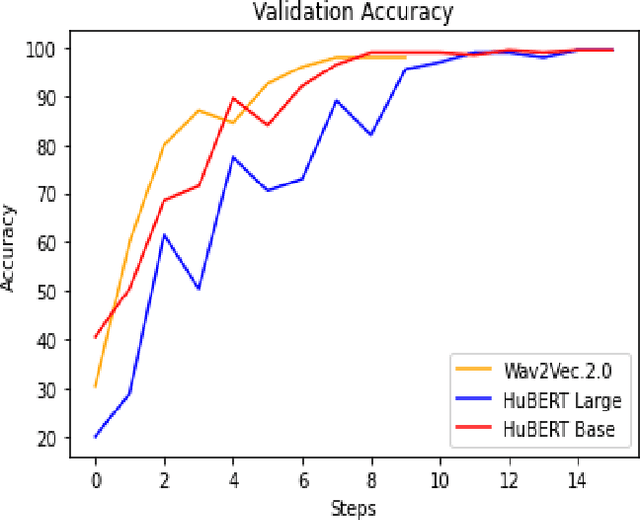

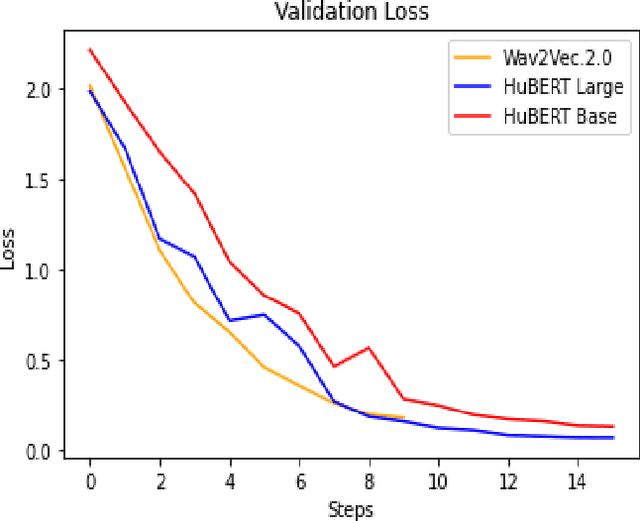

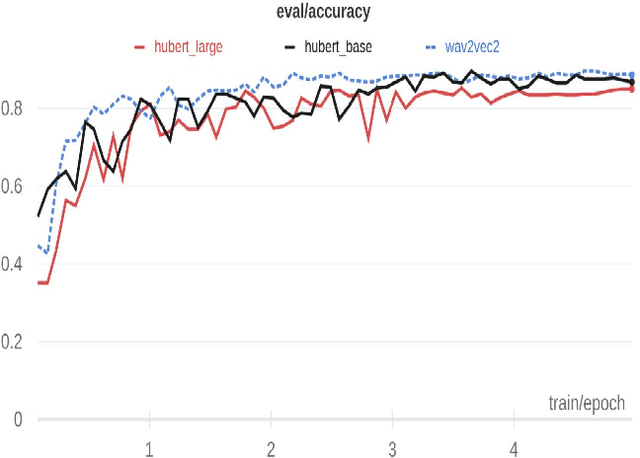

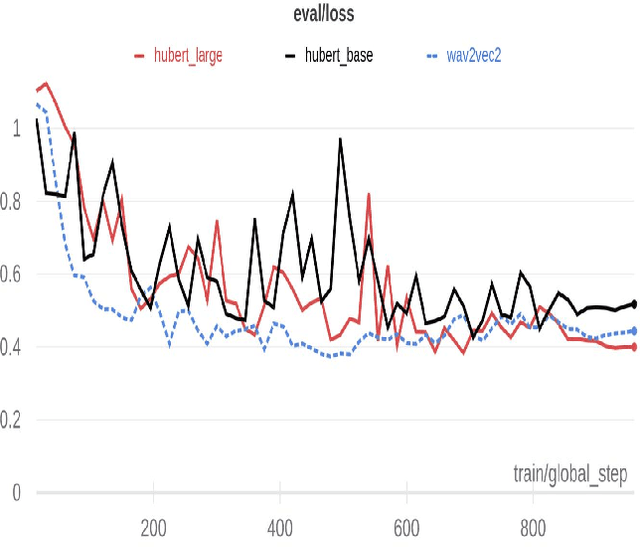

Towards an Efficient Voice Identification Using Wav2Vec2.0 and HuBERT Based on the Quran Reciters Dataset

Nov 11, 2021

Abstract:Current authentication and trusted systems depend on classical and biometric methods to recognize or authorize users. Such methods include audio speech recognitions, eye, and finger signatures. Recent tools utilize deep learning and transformers to achieve better results. In this paper, we develop a deep learning constructed model for Arabic speakers identification by using Wav2Vec2.0 and HuBERT audio representation learning tools. The end-to-end Wav2Vec2.0 paradigm acquires contextualized speech representations learnings by randomly masking a set of feature vectors, and then applies a transformer neural network. We employ an MLP classifier that is able to differentiate between invariant labeled classes. We show several experimental results that safeguard the high accuracy of the proposed model. The experiments ensure that an arbitrary wave signal for a certain speaker can be identified with 98% and 97.1% accuracies in the cases of Wav2Vec2.0 and HuBERT, respectively.

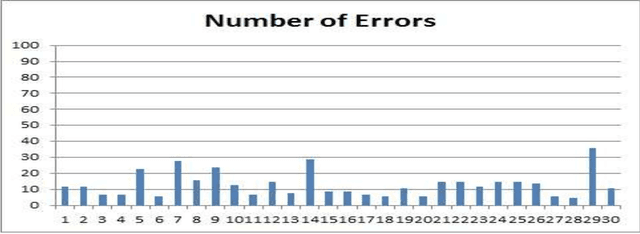

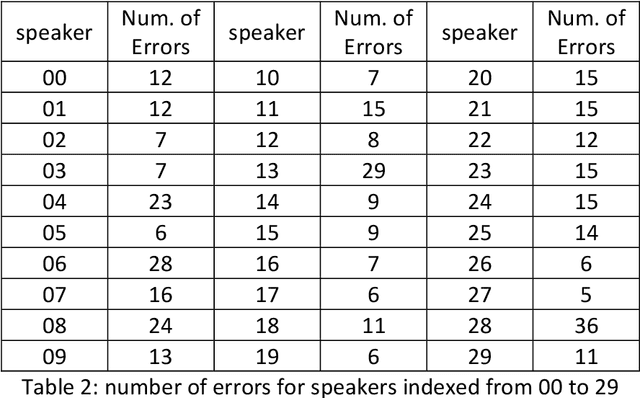

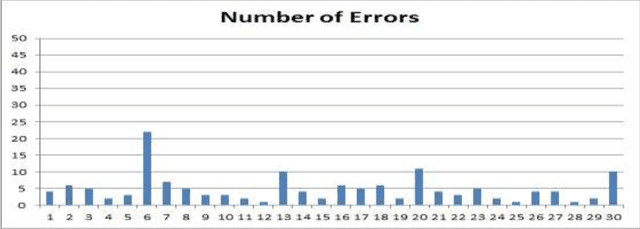

ASMDD: Arabic Speech Mispronunciation Detection Dataset

Nov 01, 2021

Abstract:The largest dataset of Arabic speech mispronunciation detections in Egyptian dialogues is introduced. The dataset is composed of annotated audio files representing the top 100 words that are most frequently used in the Arabic language, pronounced by 100 Egyptian children (aged between 2 and 8 years old). The dataset is collected and annotated on segmental pronunciation error detections by expert listeners.

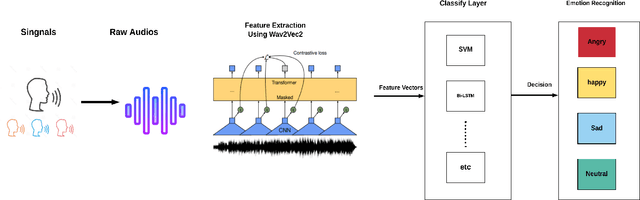

Arabic Speech Emotion Recognition Employing Wav2vec2.0 and HuBERT Based on BAVED Dataset

Oct 09, 2021

Abstract:Recently, there have been tremendous research outcomes in the fields of speech recognition and natural language processing. This is due to the well-developed multi-layers deep learning paradigms such as wav2vec2.0, Wav2vecU, WavBERT, and HuBERT that provide better representation learning and high information capturing. Such paradigms run on hundreds of unlabeled data, then fine-tuned on a small dataset for specific tasks. This paper introduces a deep learning constructed emotional recognition model for Arabic speech dialogues. The developed model employs the state of the art audio representations include wav2vec2.0 and HuBERT. The experiment and performance results of our model overcome the previous known outcomes.

Pilgrims Face Recognition Dataset -- HUFRD

Dec 30, 2012

Abstract:In this work, we define a new pilgrims face recognition dataset, called HUFRD dataset. The new developed dataset presents various pilgrims' images taken from outside the Holy Masjid El-Harram in Makkah during the 2011-2012 Hajj and Umrah seasons. Such dataset will be used to test our developed facial recognition and detection algorithms, as well as assess in the missing and found recognition system \cite{crowdsensing}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge