Ryuma Niiyama

Pneumatic bladder links with wide range of motion joints for articulated inflatable robots

Dec 23, 2025Abstract:Exploration of various applications is the frontier of research on inflatable robots. We proposed an articulated robots consisting of multiple pneumatic bladder links connected by rolling contact joints called Hillberry joints. The bladder link is made of a double-layered structure of tarpaulin sheet and polyurethane sheet, which is both airtight and flexible in shape. The integration of the Hilberry joint into an inflatable robot is also a new approach. The rolling contact joint allows wide range of motion of $\pm 150 ^{\circ}$, the largest among the conventional inflatable joints. Using the proposed mechanism for inflatable robots, we demonstrated moving a 500 g payload with a 3-DoF arm and lifting 3.4 kg and 5 kg payloads with 2-DoF and 1-DoF arms, respectively. We also experimented with a single 3-DoF inflatable leg attached to a dolly to show that the proposed structure worked for legged locomotion.

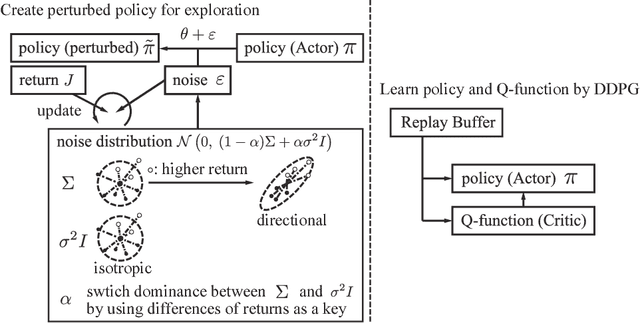

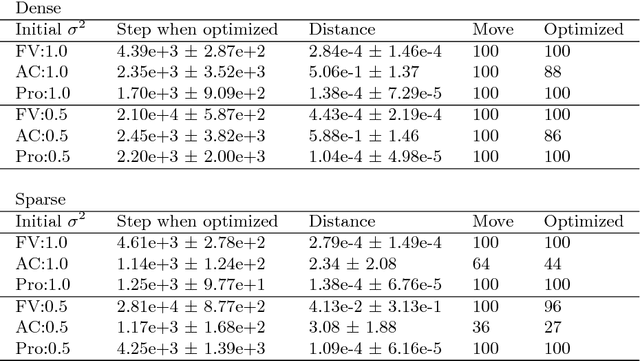

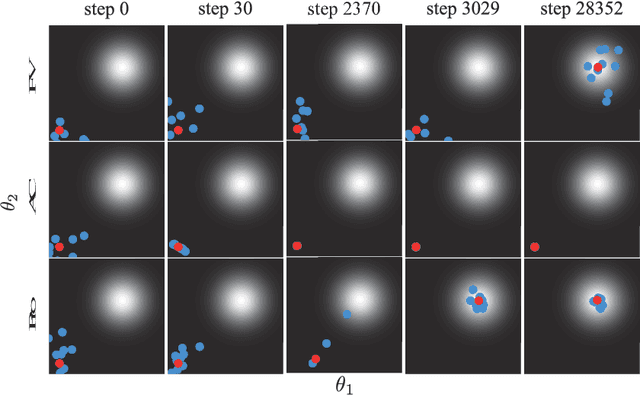

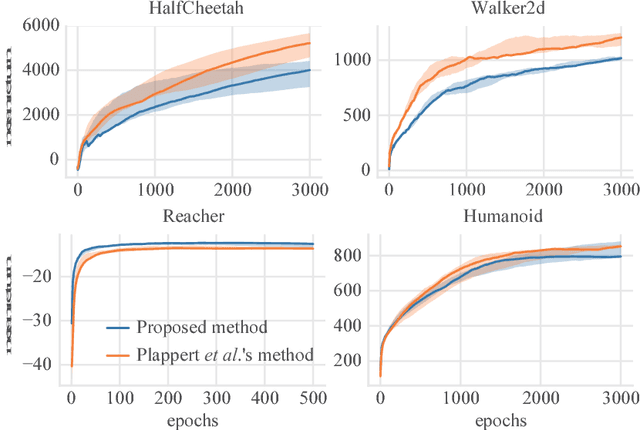

Switching Isotropic and Directional Exploration with Parameter Space Noise in Deep Reinforcement Learning

Sep 27, 2018

Abstract:This paper proposes an exploration method for deep reinforcement learning based on parameter space noise. Recent studies have experimentally shown that parameter space noise results in better exploration than the commonly used action space noise. Previous methods devised a way to update the diagonal covariance matrix of a noise distribution and did not consider the direction of the noise vector and its correlation. In addition, fast updates of the noise distribution are required to facilitate policy learning. We propose a method that deforms the noise distribution according to the accumulated returns and the noises that have led to the returns. Moreover, this method switches isotropic exploration and directional exploration in parameter space with regard to obtained rewards. We validate our exploration strategy in the OpenAI Gym continuous environments and modified environments with sparse rewards. The proposed method achieves results that are competitive with a previous method at baseline tasks. Moreover, our approach exhibits better performance in sparse reward environments by exploration with the switching strategy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge