Ryan Tsai

A generative flow for conditional sampling via optimal transport

Jul 09, 2023Abstract:Sampling conditional distributions is a fundamental task for Bayesian inference and density estimation. Generative models, such as normalizing flows and generative adversarial networks, characterize conditional distributions by learning a transport map that pushes forward a simple reference (e.g., a standard Gaussian) to a target distribution. While these approaches successfully describe many non-Gaussian problems, their performance is often limited by parametric bias and the reliability of gradient-based (adversarial) optimizers to learn these transformations. This work proposes a non-parametric generative model that iteratively maps reference samples to the target. The model uses block-triangular transport maps, whose components are shown to characterize conditionals of the target distribution. These maps arise from solving an optimal transport problem with a weighted $L^2$ cost function, thereby extending the data-driven approach in [Trigila and Tabak, 2016] for conditional sampling. The proposed approach is demonstrated on a two dimensional example and on a parameter inference problem involving nonlinear ODEs.

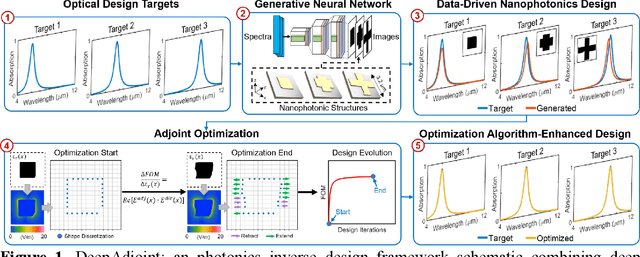

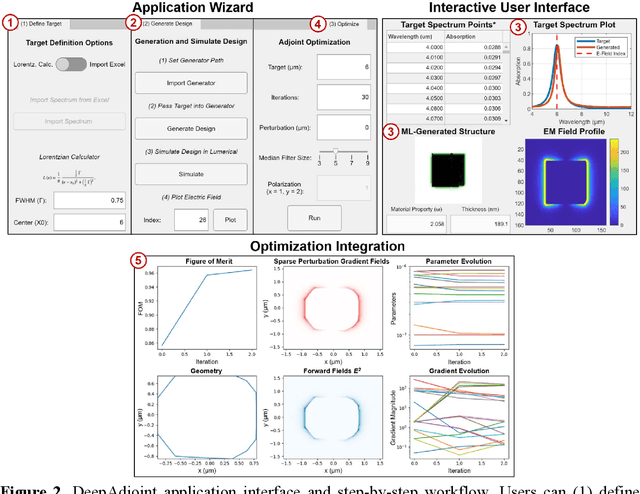

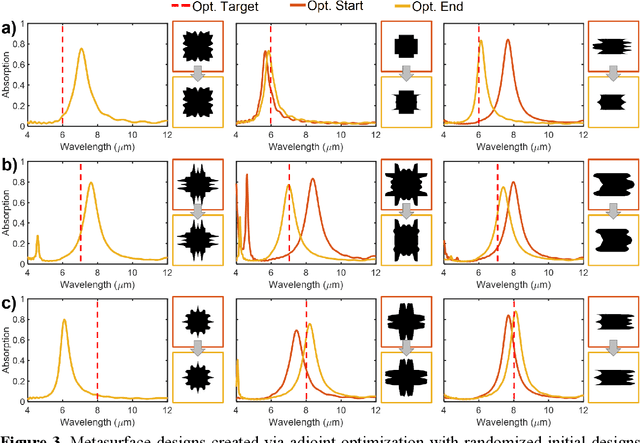

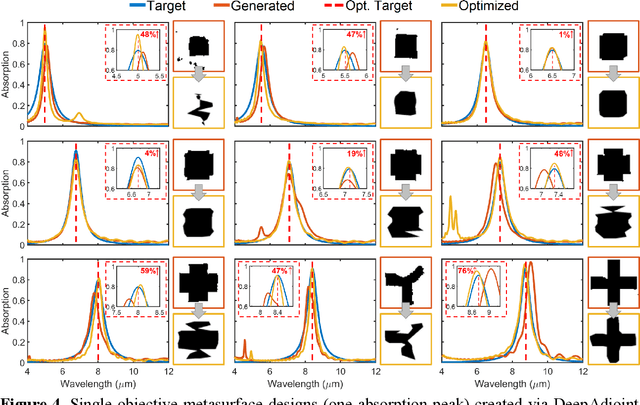

DeepAdjoint: An All-in-One Photonic Inverse Design Framework Integrating Data-Driven Machine Learning with Optimization Algorithms

Sep 28, 2022

Abstract:In recent years, hybrid design strategies combining machine learning (ML) with electromagnetic optimization algorithms have emerged as a new paradigm for the inverse design of photonic structures and devices. While a trained, data-driven neural network can rapidly identify solutions near the global optimum with a given dataset's design space, an iterative optimization algorithm can further refine the solution and overcome dataset limitations. Furthermore, such hybrid ML-optimization methodologies can reduce computational costs and expedite the discovery of novel electromagnetic components. However, existing hybrid ML-optimization methods have yet to optimize across both materials and geometries in a single integrated and user-friendly environment. In addition, due to the challenge of acquiring large datasets for ML, as well as the exponential growth of isolated models being trained for photonics design, there is a need to standardize the ML-optimization workflow while making the pre-trained models easily accessible. Motivated by these challenges, here we introduce DeepAdjoint, a general-purpose, open-source, and multi-objective "all-in-one" global photonics inverse design application framework which integrates pre-trained deep generative networks with state-of-the-art electromagnetic optimization algorithms such as the adjoint variables method. DeepAdjoint allows a designer to specify an arbitrary optical design target, then obtain a photonic structure that is robust to fabrication tolerances and possesses the desired optical properties - all within a single user-guided application interface. Our framework thus paves a path towards the systematic unification of ML and optimization algorithms for photonic inverse design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge