Ryan Brown

Global Maxwell Tomography Using the Volume-Surface Integral Equation for Improved Estimation of Electrical Properties

May 20, 2025Abstract:Objective: Global Maxwell Tomography (GMT) is a noninvasive inverse optimization method for the estimation of electrical properties (EP) from magnetic resonance (MR) measurements. GMT uses the volume integral equation (VIE) in the forward problem and assumes that the sample has negligible effect on the coil currents. Consequently, GMT calculates the coil's incident fields with an initial EP distribution and keeps them constant for all optimization iterations. This can lead to erroneous reconstructions. This work introduces a novel version of GMT that replaces VIE with the volume-surface integral equation (VSIE), which recalculates the coil currents at every iteration based on updated EP estimates before computing the associated fields. Methods: We simulated an 8-channel transceiver coil array for 7 T brain imaging and reconstructed the EP of a realistic head model using VSIE-based GMT. We built the coil, collected experimental MR measurements, and reconstructed EP of a two-compartment phantom. Results: In simulations, VSIE-based GMT outperformed VIE-based GMT by at least 12% for both EP. In experiments, the relative difference with respect to probe-measured EP values in the inner (outer) compartment was 13% (26%) and 17% (33%) for the permittivity and conductivity, respectively. Conclusion: The use of VSIE over VIE enhances GMT's performance by accounting for the effect of the EP on the coil currents. Significance: VSIE-based GMT does not rely on an initial EP estimate, rendering it more suitable for experimental reconstructions compared to the VIE-based GMT.

A Framework for Variational Inference of Lightweight Bayesian Neural Networks with Heteroscedastic Uncertainties

Feb 22, 2024

Abstract:Obtaining heteroscedastic predictive uncertainties from a Bayesian Neural Network (BNN) is vital to many applications. Often, heteroscedastic aleatoric uncertainties are learned as outputs of the BNN in addition to the predictive means, however doing so may necessitate adding more learnable parameters to the network. In this work, we demonstrate that both the heteroscedastic aleatoric and epistemic variance can be embedded into the variances of learned BNN parameters, improving predictive performance for lightweight networks. By complementing this approach with a moment propagation approach to inference, we introduce a relatively simple framework for sampling-free variational inference suitable for lightweight BNNs.

Uncertainty-based Modulation for Lifelong Learning

Jan 27, 2020

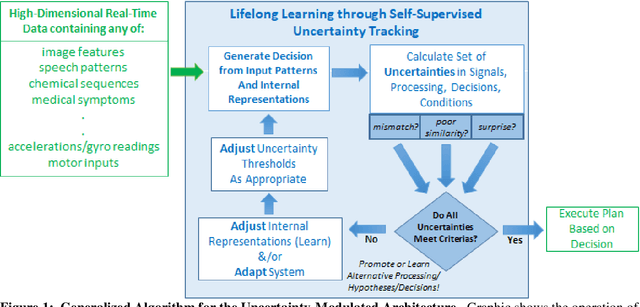

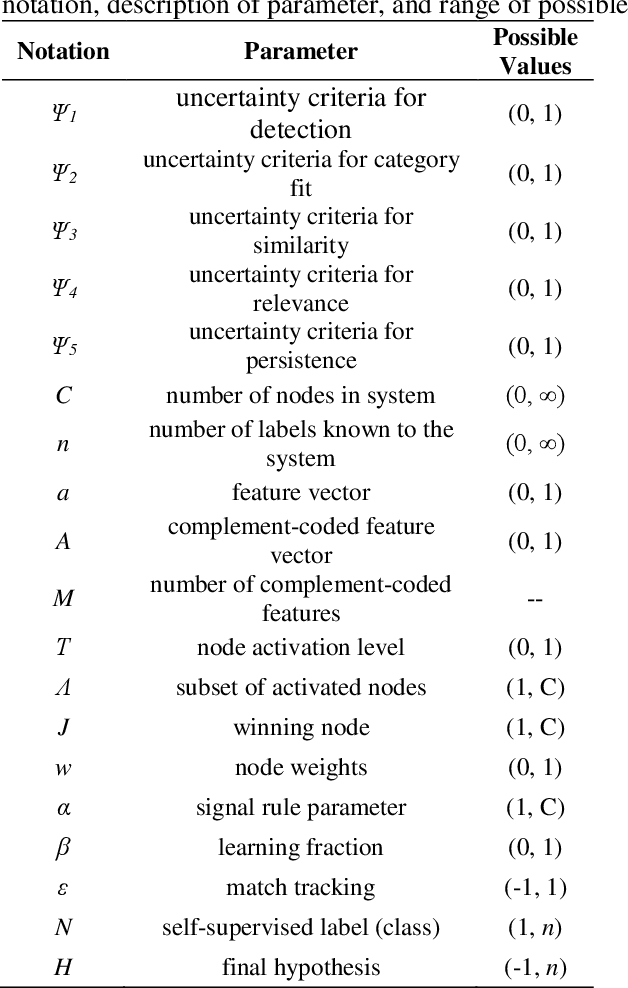

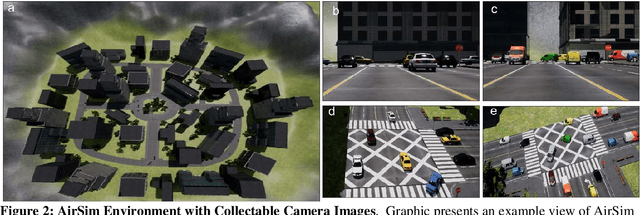

Abstract:The creation of machine learning algorithms for intelligent agents capable of continuous, lifelong learning is a critical objective for algorithms being deployed on real-life systems in dynamic environments. Here we present an algorithm inspired by neuromodulatory mechanisms in the human brain that integrates and expands upon Stephen Grossberg\'s ground-breaking Adaptive Resonance Theory proposals. Specifically, it builds on the concept of uncertainty, and employs a series of neuromodulatory mechanisms to enable continuous learning, including self-supervised and one-shot learning. Algorithm components were evaluated in a series of benchmark experiments that demonstrate stable learning without catastrophic forgetting. We also demonstrate the critical role of developing these systems in a closed-loop manner where the environment and the agent\'s behaviors constrain and guide the learning process. To this end, we integrated the algorithm into an embodied simulated drone agent. The experiments show that the algorithm is capable of continuous learning of new tasks and under changed conditions with high classification accuracy (greater than 94 percent) in a virtual environment, without catastrophic forgetting. The algorithm accepts high dimensional inputs from any state-of-the-art detection and feature extraction algorithms, making it a flexible addition to existing systems. We also describe future development efforts focused on imbuing the algorithm with mechanisms to seek out new knowledge as well as employ a broader range of neuromodulatory processes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge