Raul Fernandez Rojas

On the Validity of Head Motion Patterns as Generalisable Depression Biomarkers

May 29, 2025Abstract:Depression is a debilitating mood disorder negatively impacting millions worldwide. While researchers have explored multiple verbal and non-verbal behavioural cues for automated depression assessment, head motion has received little attention thus far. Further, the common practice of validating machine learning models via a single dataset can limit model generalisability. This work examines the effectiveness and generalisability of models utilising elementary head motion units, termed kinemes, for depression severity estimation. Specifically, we consider three depression datasets from different western cultures (German: AVEC2013, Australian: Blackdog and American: Pitt datasets) with varied contextual and recording settings to investigate the generalisability of the derived kineme patterns via two methods: (i) k-fold cross-validation over individual/multiple datasets, and (ii) model reuse on other datasets. Evaluating classification and regression performance with classical machine learning methods, our results show that: (1) head motion patterns are efficient biomarkers for estimating depression severity, achieving highly competitive performance for both classification and regression tasks on a variety of datasets, including achieving the second best Mean Absolute Error (MAE) on the AVEC2013 dataset, and (2) kineme-based features are more generalisable than (a) raw head motion descriptors for binary severity classification, and (b) other visual behavioural cues for severity estimation (regression).

PainFormer: a Vision Foundation Model for Automatic Pain Assessment

May 02, 2025Abstract:Pain is a manifold condition that impacts a significant percentage of the population. Accurate and reliable pain evaluation for the people suffering is crucial to developing effective and advanced pain management protocols. Automatic pain assessment systems provide continuous monitoring and support decision-making processes, ultimately aiming to alleviate distress and prevent functionality decline. This study introduces PainFormer, a vision foundation model based on multi-task learning principles trained simultaneously on 14 tasks/datasets with a total of 10.9 million samples. Functioning as an embedding extractor for various input modalities, the foundation model provides feature representations to the Embedding-Mixer, a transformer-based module that performs the final pain assessment. Extensive experiments employing behavioral modalities-including RGB, synthetic thermal, and estimated depth videos-and physiological modalities such as ECG, EMG, GSR, and fNIRS revealed that PainFormer effectively extracts high-quality embeddings from diverse input modalities. The proposed framework is evaluated on two pain datasets, BioVid and AI4Pain, and directly compared to 73 different methodologies documented in the literature. Experiments conducted in unimodal and multimodal settings demonstrate state-of-the-art performances across modalities and pave the way toward general-purpose models for automatic pain assessment.

Explainable Depression Detection via Head Motion Patterns

Jul 23, 2023

Abstract:While depression has been studied via multimodal non-verbal behavioural cues, head motion behaviour has not received much attention as a biomarker. This study demonstrates the utility of fundamental head-motion units, termed \emph{kinemes}, for depression detection by adopting two distinct approaches, and employing distinctive features: (a) discovering kinemes from head motion data corresponding to both depressed patients and healthy controls, and (b) learning kineme patterns only from healthy controls, and computing statistics derived from reconstruction errors for both the patient and control classes. Employing machine learning methods, we evaluate depression classification performance on the \emph{BlackDog} and \emph{AVEC2013} datasets. Our findings indicate that: (1) head motion patterns are effective biomarkers for detecting depressive symptoms, and (2) explanatory kineme patterns consistent with prior findings can be observed for the two classes. Overall, we achieve peak F1 scores of 0.79 and 0.82, respectively, over BlackDog and AVEC2013 for binary classification over episodic \emph{thin-slices}, and a peak F1 of 0.72 over videos for AVEC2013.

Measuring Cognitive Workload Using Multimodal Sensors

May 05, 2022

Abstract:This study aims to identify a set of indicators to estimate cognitive workload using a multimodal sensing approach and machine learning. A set of three cognitive tests were conducted to induce cognitive workload in twelve participants at two levels of task difficulty (Easy and Hard). Four sensors were used to measure the participants' physiological change, including, Electrocardiogram (ECG), electrodermal activity (EDA), respiration (RESP), and blood oxygen saturation (SpO2). To understand the perceived cognitive workload, NASA-TLX was used after each test and analysed using Chi-Square test. Three well-know classifiers (LDA, SVM, and DT) were trained and tested independently using the physiological data. The statistical analysis showed that participants' perceived cognitive workload was significantly different (p<0.001) between the tests, which demonstrated the validity of the experimental conditions to induce different cognitive levels. Classification results showed that a fusion of ECG and EDA presented good discriminating power (acc=0.74) for cognitive workload detection. This study provides preliminary results in the identification of a possible set of indicators of cognitive workload. Future work needs to be carried out to validate the indicators using more realistic scenarios and with a larger population.

Pain Assessment based on fNIRS using Bidirectional LSTMs

Dec 27, 2020

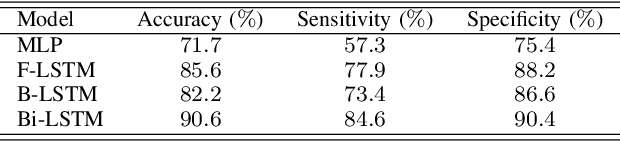

Abstract:Assessing pain in patients unable to speak (also called non-verbal patients) is extremely complicated and often is done by clinical judgement. However, this method is not reliable since patients vital signs can fluctuate significantly due to other underlying medical conditions. No objective diagnosis test exists to date that can assist medical practitioners in the diagnosis of pain. In this study we propose the use of functional near-infrared spectroscopy (fNIRS) and deep learning for the assessment of human pain. The aim of this study is to explore the use deep learning to automatically learn features from fNIRS raw data to reduce the level of subjectivity and domain knowledge required in the design of hand-crafted features. Four deep learning models were evaluated, multilayer perceptron (MLP), forward and backward long short-term memory net-works (LSTM), and bidirectional LSTM. The results showed that the Bi-LSTM model achieved the highest accuracy (90.6%)and faster than the other three models. These results advance knowledge in pain assessment using neuroimaging as a method of diagnosis and represent a step closer to developing a physiologically based diagnosis of human pain that will benefit vulnerable populations who cannot self-report pain.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge