Rajeev Gupta

Personalized Artificial General Intelligence (AGI) via Neuroscience-Inspired Continuous Learning Systems

Apr 27, 2025

Abstract:Artificial Intelligence has made remarkable advancements in recent years, primarily driven by increasingly large deep learning models. However, achieving true Artificial General Intelligence (AGI) demands fundamentally new architectures rather than merely scaling up existing models. Current approaches largely depend on expanding model parameters, which improves task-specific performance but falls short in enabling continuous, adaptable, and generalized learning. Achieving AGI capable of continuous learning and personalization on resource-constrained edge devices is an even bigger challenge. This paper reviews the state of continual learning and neuroscience-inspired AI, and proposes a novel architecture for Personalized AGI that integrates brain-like learning mechanisms for edge deployment. We review literature on continuous lifelong learning, catastrophic forgetting, and edge AI, and discuss key neuroscience principles of human learning, including Synaptic Pruning, Hebbian plasticity, Sparse Coding, and Dual Memory Systems, as inspirations for AI systems. Building on these insights, we outline an AI architecture that features complementary fast-and-slow learning modules, synaptic self-optimization, and memory-efficient model updates to support on-device lifelong adaptation. Conceptual diagrams of the proposed architecture and learning processes are provided. We address challenges such as catastrophic forgetting, memory efficiency, and system scalability, and present application scenarios for mobile AI assistants and embodied AI systems like humanoid robots. We conclude with key takeaways and future research directions toward truly continual, personalized AGI on the edge. While the architecture is theoretical, it synthesizes diverse findings and offers a roadmap for future implementation.

Impersonation: Modeling Persona in Smart Responses to Email

Jun 12, 2018

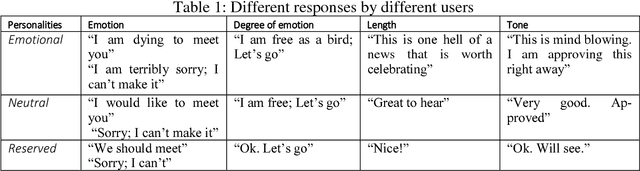

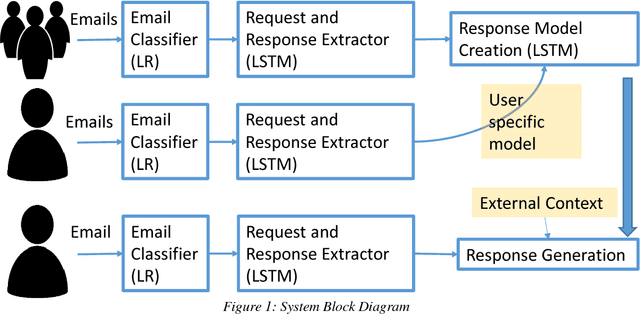

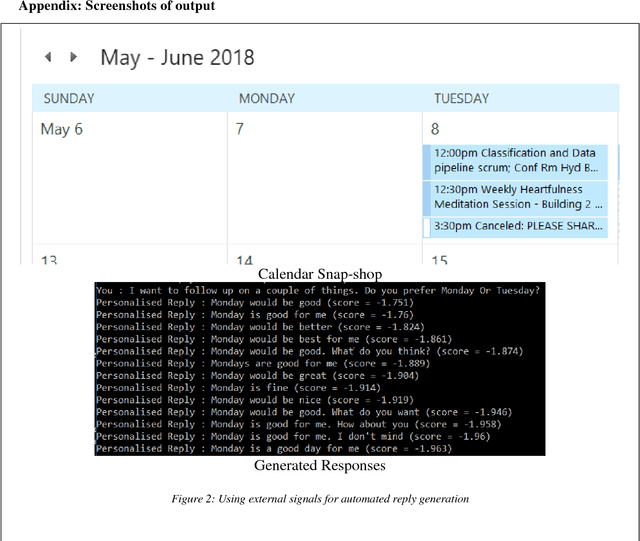

Abstract:In this paper, we present design, implementation, and effectiveness of generating personalized suggestions for email replies. To personalize email responses based on users style and personality, we model the users persona based on her past responses to emails. This model is added to the language-based model created across users using past responses of the all user emails. A users model captures the typical responses of the user given a particular context. The context includes the email received, recipient of the email, and other external signals such as calendar activities, preferences, etc. The context along with users personality (e.g., extrovert, formal, reserved, etc.) is used to suggest responses. These responses can be a mixture of multiple modes: email replies (textual), audio clips, etc. This helps in making responses mimic the user as much as possible and helps the user to be more productive while retaining her mark in the responses.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge