R. Pito Salas

Lessons Learned: The Evolution of an Undergraduate Robotics Course in Computer Science

Apr 27, 2024Abstract:Seven years ago (2016), we began integrating Robotics into our Computer Science curriculum. This paper explores the mission, initial goals and objectives, specific choices we made along the way, and why and outcomes. Of course, we were not the first to do so. Our contribution in this paper is to describe a seven-year experience in the hope that others going down this road will benefit, perhaps avoiding some missteps and dead-ends. We offer our answers to many questions that anyone undertaking bootstrapping a new robotics program may have to deal with. At the end of the paper, we discuss a set of lessons learned, including striking the right balance between depth and breadth in syllabus design and material organization, the significance of utilizing physical robots and criteria for selecting a suitable robotics platform, insights into the scope and design of a robotics lab, the necessity of standardizing hardware and software configurations, along with implementation methods, and strategies for preparing students for the steep learning curve.

Teaching Continuity in Robotics Labs in the Age of Covid and Beyond

May 18, 2021Abstract:This paper argues that training of future Roboticists and Robotics Engineers in Computer Science departments, requires the extensive direct work with real robots, and that this educational mission will be negatively impacted when access to robotics learning laboratories is curtailed. This is exactly the problem that Robotics Labs encountered in early 2020, at the start of the Covid pandemic. The paper then turns to the description of a remote/virtual robotics teaching laboratory and examines in detail what that would mean, what the benefits would be, and how it may be used. Part of this vision was implemented at our institution during 2020 and has been in constant use since then. The specific architecture and implementation, as far as it has been built, is described. The exciting insight in the conclusion is that the work that was encouraged and triggered by a pandemic seems to have very positive longer-term benefits of increasing access to robotics education, increasing the ability of any one institution to scale their robotics education greatly, and potentially do this while reducing costs.

Situated Multimodal Control of a Mobile Robot: Navigation through a Virtual Environment

Jul 13, 2020

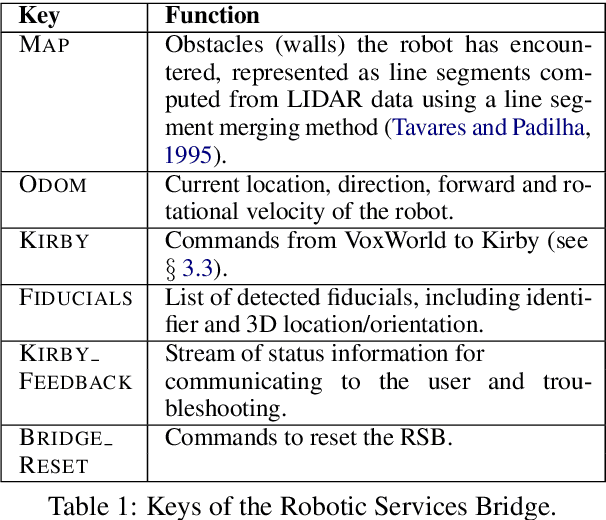

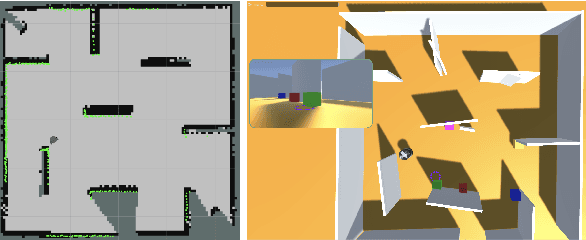

Abstract:We present a new interface for controlling a navigation robot in novel environments using coordinated gesture and language. We use a TurtleBot3 robot with a LIDAR and a camera, an embodied simulation of what the robot has encountered while exploring, and a cross-platform bridge facilitating generic communication. A human partner can deliver instructions to the robot using spoken English and gestures relative to the simulated environment, to guide the robot through navigation tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge