Pramudita Satria Palar

Data-Driven Global Sensitivity Analysis for Engineering Design Based on Individual Conditional Expectations

Dec 12, 2025Abstract:Explainable machine learning techniques have gained increasing attention in engineering applications, especially in aerospace design and analysis, where understanding how input variables influence data-driven models is essential. Partial Dependence Plots (PDPs) are widely used for interpreting black-box models by showing the average effect of an input variable on the prediction. However, their global sensitivity metric can be misleading when strong interactions are present, as averaging tends to obscure interaction effects. To address this limitation, we propose a global sensitivity metric based on Individual Conditional Expectation (ICE) curves. The method computes the expected feature importance across ICE curves, along with their standard deviation, to more effectively capture the influence of interactions. We provide a mathematical proof demonstrating that the PDP-based sensitivity is a lower bound of the proposed ICE-based metric under truncated orthogonal polynomial expansion. In addition, we introduce an ICE-based correlation value to quantify how interactions modify the relationship between inputs and the output. Comparative evaluations were performed on three cases: a 5-variable analytical function, a 5-variable wind-turbine fatigue problem, and a 9-variable airfoil aerodynamics case, where ICE-based sensitivity was benchmarked against PDP, SHapley Additive exPlanations (SHAP), and Sobol' indices. The results show that ICE-based feature importance provides richer insights than the traditional PDP-based approach, while visual interpretations from PDP, ICE, and SHAP complement one another by offering multiple perspectives.

SMT-EX: An Explainable Surrogate Modeling Toolbox for Mixed-Variables Design Exploration

Mar 25, 2025Abstract:Surrogate models are of high interest for many engineering applications, serving as cheap-to-evaluate time-efficient approximations of black-box functions to help engineers and practitioners make decisions and understand complex systems. As such, the need for explainability methods is rising and many studies have been performed to facilitate knowledge discovery from surrogate models. To respond to these enquiries, this paper introduces SMT-EX, an enhancement of the open-source Python Surrogate Modeling Toolbox (SMT) that integrates explainability techniques into a state-of-the-art surrogate modelling framework. More precisely, SMT-EX includes three key explainability methods: Shapley Additive Explanations, Partial Dependence Plot, and Individual Conditional Expectations. A peculiar explainability dependency of SMT has been developed for such purpose that can be easily activated once the surrogate model is built, offering a user-friendly and efficient tool for swift insight extraction. The effectiveness of SMT-EX is showcased through two test cases. The first case is a 10-variable wing weight problem with purely continuous variables and the second one is a 3-variable mixed-categorical cantilever beam bending problem. Relying on SMT-EX analyses for these problems, we demonstrate its versatility in addressing a diverse range of problem characteristics. SMT-Explainability is freely available on Github: https://github.com/SMTorg/smt-explainability .

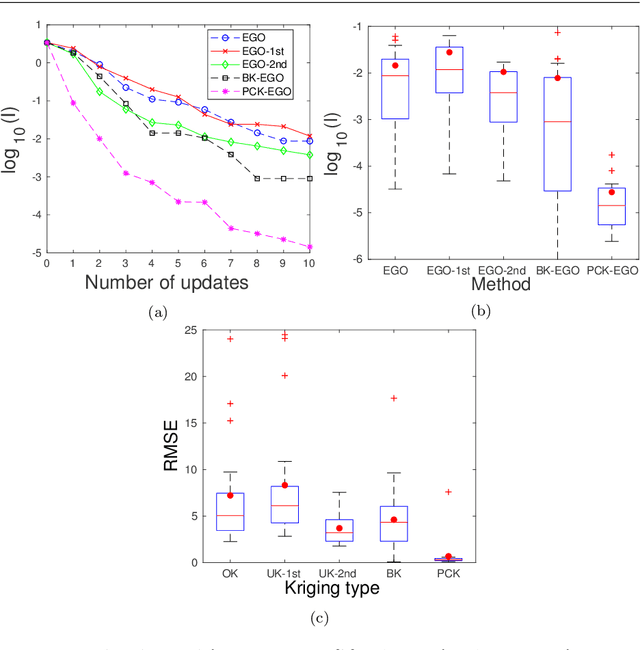

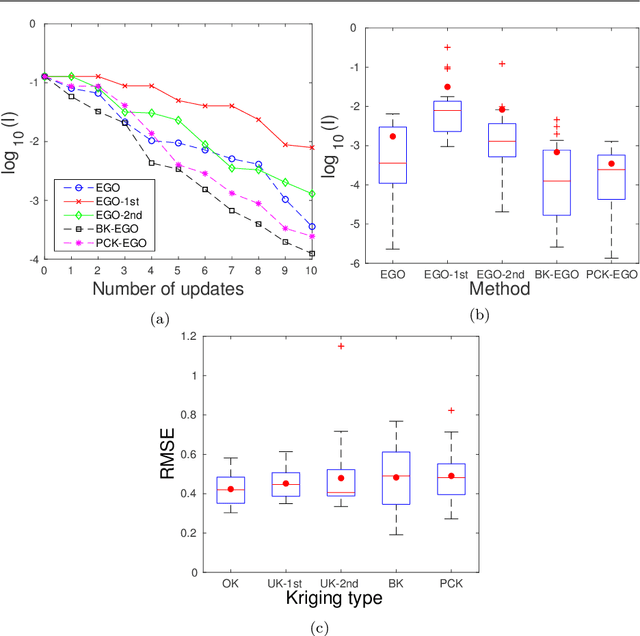

On efficient global optimization via universal Kriging surrogate models

Mar 23, 2018

Abstract:In this paper, we investigate the capability of the universal Kriging (UK) model for single-objective global optimization applied within an efficient global optimization (EGO) framework. We implemented this combined UK-EGO framework and studied four variants of the UK methods, that is, a UK with a first-order polynomial, a UK with a second-order polynomial, a blind Kriging (BK) implementation from the ooDACE toolbox, and a polynomial-chaos Kriging (PCK) implementation. The UK-EGO framework with automatic trend function selection derived from the BK and PCK models works by building a UK surrogate model and then performing optimizations via expected improvement criteria on the Kriging model with the lowest leave-one-out cross-validation error. Next, we studied and compared the UK-EGO variants and standard EGO using five synthetic test functions and one aerodynamic problem. Our results show that the proper choice for the trend function through automatic feature selection can improve the optimization performance of UK-EGO relative to EGO. From our results, we found that PCK-EGO was the best variant, as it had more robust performance as compared to the rest of the UK-EGO schemes; however, total-order expansion should be used to generate the candidate trend function set for high-dimensional problems. Note that, for some test functions, the UK with predetermined polynomial trend functions performed better than that of BK and PCK, indicating that the use of automatic trend function selection does not always lead to the best quality solutions. We also found that although some variants of UK are not as globally accurate as the ordinary Kriging (OK), they can still identify better-optimized solutions due to the addition of the trend function, which helps the optimizer locate the global optimum.

Exploiting Active Subspaces in Global Optimization: How Complex is your Problem?

Jul 09, 2017

Abstract:When applying optimization method to a real-world problem, the possession of prior knowledge and preliminary analysis on the landscape of a global optimization problem can give us an insight into the complexity of the problem. This knowledge can better inform us in deciding what optimization method should be used to tackle the problem. However, this analysis becomes problematic when the dimensionality of the problem is high. This paper presents a framework to take a deeper look at the global optimization problem to be tackled: by analyzing the low-dimensional representation of the problem through discovering the active subspaces of the given problem. The virtue of this is that the problem's complexity can be visualized in a one or two-dimensional plot, thus allow one to get a better grip about the problem's difficulty. One could then have a better idea regarding the complexity of their problem to determine the choice of global optimizer or what surrogate-model type to be used. Furthermore, we also demonstrate how the active subspaces can be used to perform design exploration and analysis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge