Piotr Garbat

Weaknesses of Facial Emotion Recognition Systems

Jan 18, 2026Abstract:Emotion detection from faces is one of the machine learning problems needed for human-computer interaction. The variety of methods used is enormous, which motivated an in-depth review of articles and scientific studies. Three of the most interesting and best solutions are selected, followed by the selection of three datasets that stood out for the diversity and number of images in them. The selected neural networks are trained, and then a series of experiments are performed to compare their performance, including testing on different datasets than a model was trained on. This reveals weaknesses in existing solutions, including differences between datasets, unequal levels of difficulty in recognizing certain emotions and the challenges in differentiating between closely related emotions.

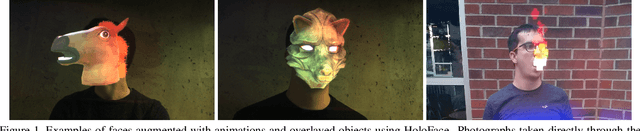

HoloFace: Augmenting Human-to-Human Interactions on HoloLens

Feb 01, 2018

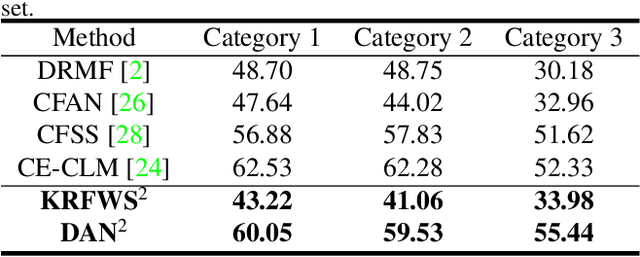

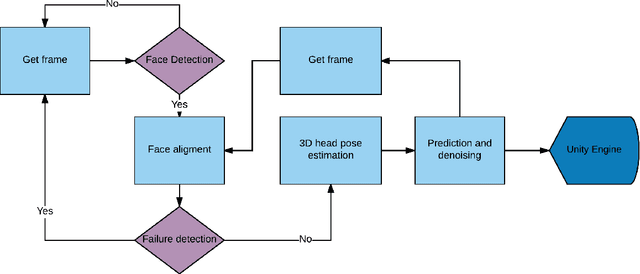

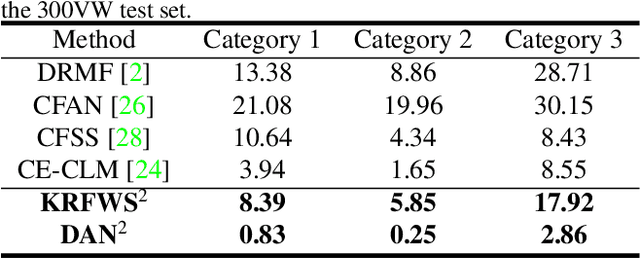

Abstract:We present HoloFace, an open-source framework for face alignment, head pose estimation and facial attribute retrieval for Microsoft HoloLens. HoloFace implements two state-of-the-art face alignment methods which can be used interchangeably: one running locally and one running on a remote backend. Head pose estimation is accomplished by fitting a deformable 3D model to the landmarks localized using face alignment. The head pose provides both the rotation of the head and a position in the world space. The parameters of the fitted 3D face model provide estimates of facial attributes such as mouth opening or smile. Together the above information can be used to augment the faces of people seen by the HoloLens user, and thus their interaction. Potential usage scenarios include facial recognition, emotion recognition, eye gaze tracking and many others. We demonstrate the capabilities of our framework by augmenting the faces of people seen through the HoloLens with various objects and animations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge