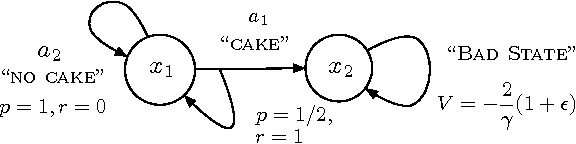

Using Options and Covariance Testing for Long Horizon Off-Policy Policy Evaluation

Dec 05, 2017Zhaohan Daniel Guo, Philip S. Thomas, Emma Brunskill

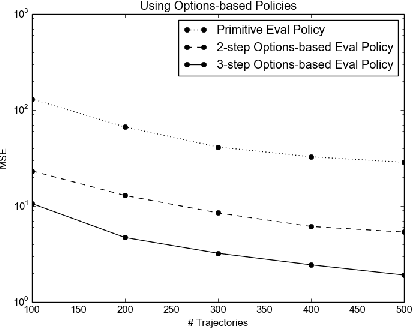

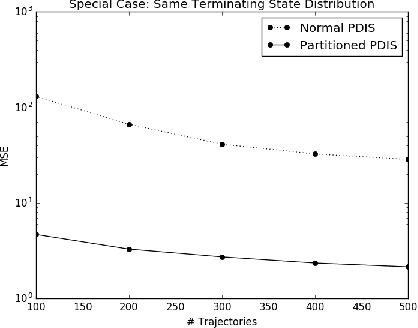

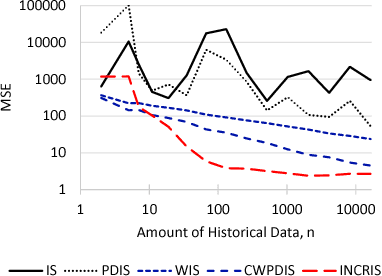

Evaluating a policy by deploying it in the real world can be risky and costly. Off-policy policy evaluation (OPE) algorithms use historical data collected from running a previous policy to evaluate a new policy, which provides a means for evaluating a policy without requiring it to ever be deployed. Importance sampling is a popular OPE method because it is robust to partial observability and works with continuous states and actions. However, the amount of historical data required by importance sampling can scale exponentially with the horizon of the problem: the number of sequential decisions that are made. We propose using policies over temporally extended actions, called options, and show that combining these policies with importance sampling can significantly improve performance for long-horizon problems. In addition, we can take advantage of special cases that arise due to options-based policies to further improve the performance of importance sampling. We further generalize these special cases to a general covariance testing rule that can be used to decide which weights to drop in an IS estimate, and derive a new IS algorithm called Incremental Importance Sampling that can provide significantly more accurate estimates for a broad class of domains.

On Ensuring that Intelligent Machines Are Well-Behaved

Aug 17, 2017Philip S. Thomas, Bruno Castro da Silva, Andrew G. Barto, Emma Brunskill

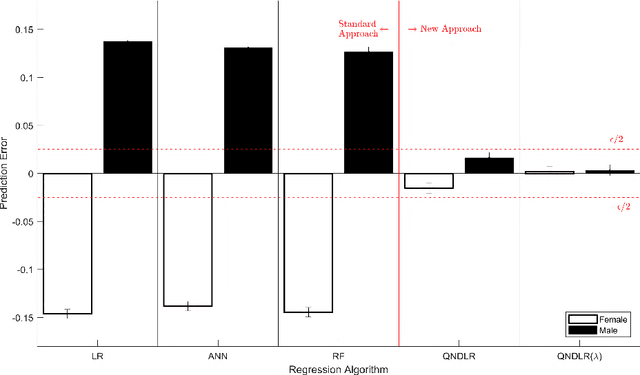

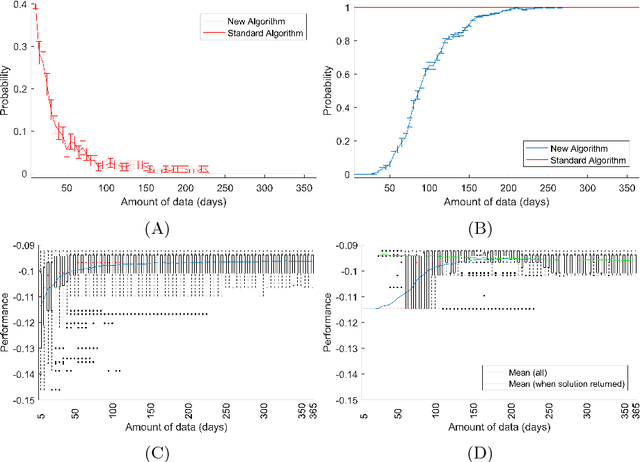

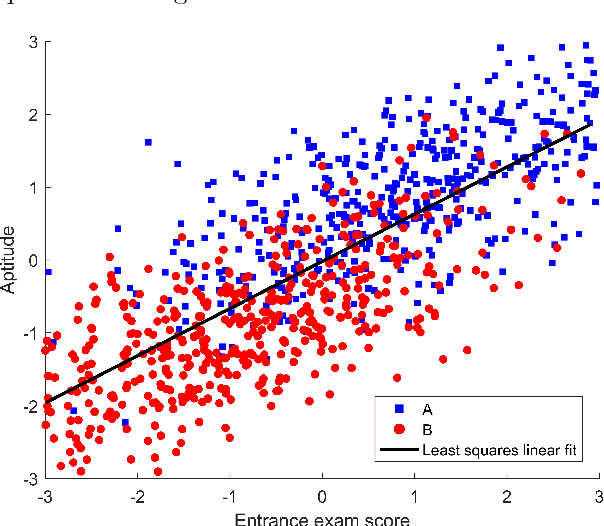

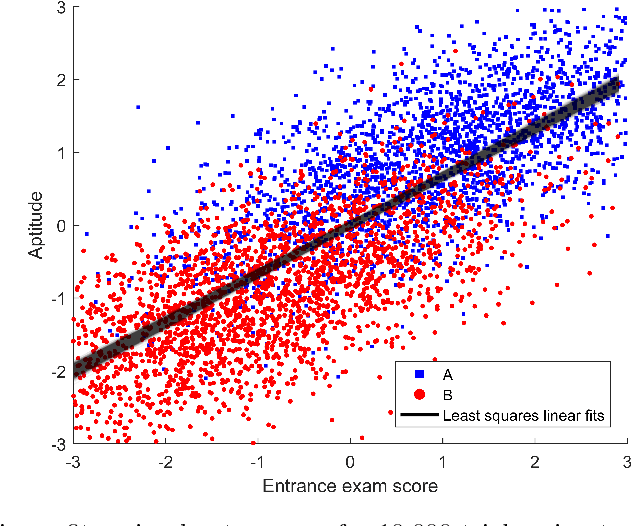

Machine learning algorithms are everywhere, ranging from simple data analysis and pattern recognition tools used across the sciences to complex systems that achieve super-human performance on various tasks. Ensuring that they are well-behaved---that they do not, for example, cause harm to humans or act in a racist or sexist way---is therefore not a hypothetical problem to be dealt with in the future, but a pressing one that we address here. We propose a new framework for designing machine learning algorithms that simplifies the problem of specifying and regulating undesirable behaviors. To show the viability of this new framework, we use it to create new machine learning algorithms that preclude the sexist and harmful behaviors exhibited by standard machine learning algorithms in our experiments. Our framework for designing machine learning algorithms simplifies the safe and responsible application of machine learning.

Policy Gradient Methods for Reinforcement Learning with Function Approximation and Action-Dependent Baselines

Jun 20, 2017Philip S. Thomas, Emma Brunskill

We show how an action-dependent baseline can be used by the policy gradient theorem using function approximation, originally presented with action-independent baselines by (Sutton et al. 2000).

Data-Efficient Policy Evaluation Through Behavior Policy Search

Jun 12, 2017Josiah P. Hanna, Philip S. Thomas, Peter Stone, Scott Niekum

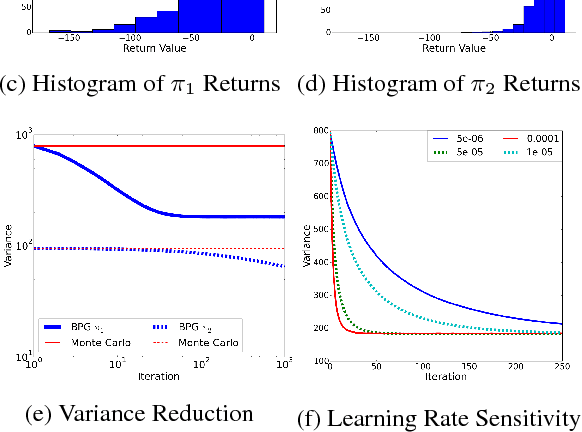

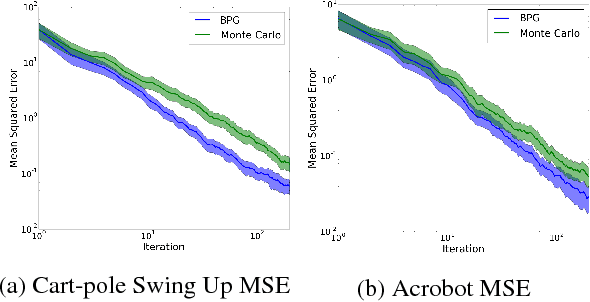

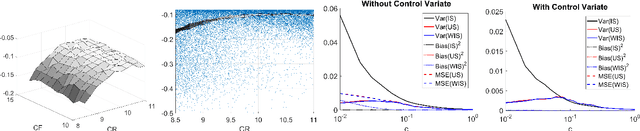

We consider the task of evaluating a policy for a Markov decision process (MDP). The standard unbiased technique for evaluating a policy is to deploy the policy and observe its performance. We show that the data collected from deploying a different policy, commonly called the behavior policy, can be used to produce unbiased estimates with lower mean squared error than this standard technique. We derive an analytic expression for the optimal behavior policy --- the behavior policy that minimizes the mean squared error of the resulting estimates. Because this expression depends on terms that are unknown in practice, we propose a novel policy evaluation sub-problem, behavior policy search: searching for a behavior policy that reduces mean squared error. We present a behavior policy search algorithm and empirically demonstrate its effectiveness in lowering the mean squared error of policy performance estimates.

Decoupling Learning Rules from Representations

Jun 09, 2017Philip S. Thomas, Christoph Dann, Emma Brunskill

In the artificial intelligence field, learning often corresponds to changing the parameters of a parameterized function. A learning rule is an algorithm or mathematical expression that specifies precisely how the parameters should be changed. When creating an artificial intelligence system, we must make two decisions: what representation should be used (i.e., what parameterized function should be used) and what learning rule should be used to search through the resulting set of representable functions. Using most learning rules, these two decisions are coupled in a subtle (and often unintentional) way. That is, using the same learning rule with two different representations that can represent the same sets of functions can result in two different outcomes. After arguing that this coupling is undesirable, particularly when using artificial neural networks, we present a method for partially decoupling these two decisions for a broad class of learning rules that span unsupervised learning, reinforcement learning, and supervised learning.

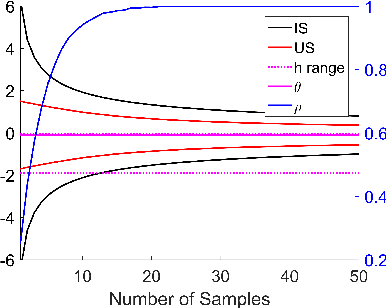

Importance Sampling with Unequal Support

Nov 10, 2016Philip S. Thomas, Emma Brunskill

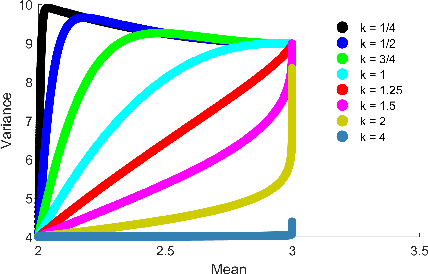

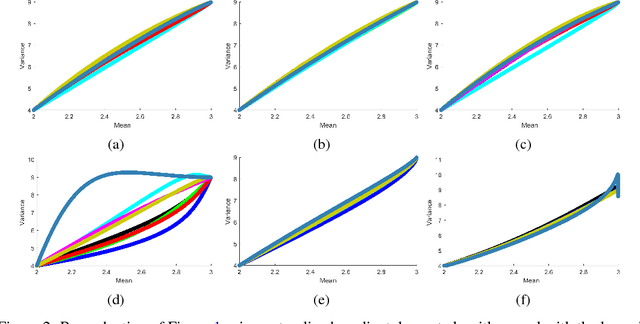

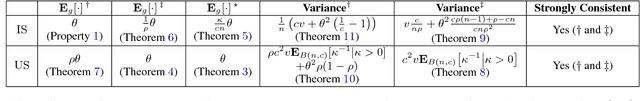

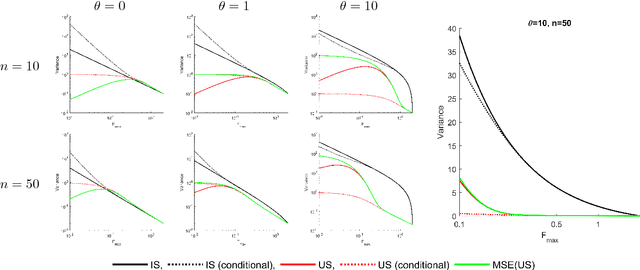

Importance sampling is often used in machine learning when training and testing data come from different distributions. In this paper we propose a new variant of importance sampling that can reduce the variance of importance sampling-based estimates by orders of magnitude when the supports of the training and testing distributions differ. After motivating and presenting our new importance sampling estimator, we provide a detailed theoretical analysis that characterizes both its bias and variance relative to the ordinary importance sampling estimator (in various settings, which include cases where ordinary importance sampling is biased, while our new estimator is not, and vice versa). We conclude with an example of how our new importance sampling estimator can be used to improve estimates of how well a new treatment policy for diabetes will work for an individual, using only data from when the individual used a previous treatment policy.

A Notation for Markov Decision Processes

Sep 08, 2016Philip S. Thomas, Billy Okal

This paper specifies a notation for Markov decision processes.

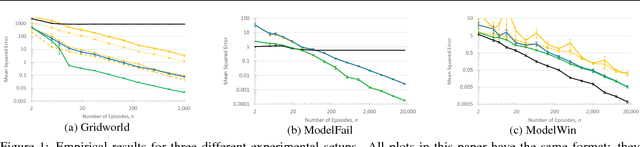

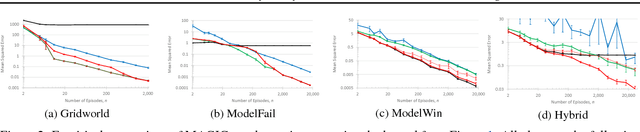

Data-Efficient Off-Policy Policy Evaluation for Reinforcement Learning

Apr 04, 2016Philip S. Thomas, Emma Brunskill

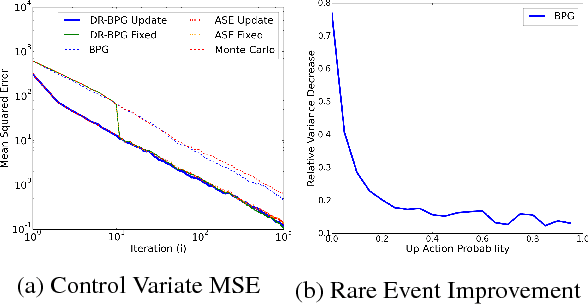

In this paper we present a new way of predicting the performance of a reinforcement learning policy given historical data that may have been generated by a different policy. The ability to evaluate a policy from historical data is important for applications where the deployment of a bad policy can be dangerous or costly. We show empirically that our algorithm produces estimates that often have orders of magnitude lower mean squared error than existing methods---it makes more efficient use of the available data. Our new estimator is based on two advances: an extension of the doubly robust estimator (Jiang and Li, 2015), and a new way to mix between model based estimates and importance sampling based estimates.

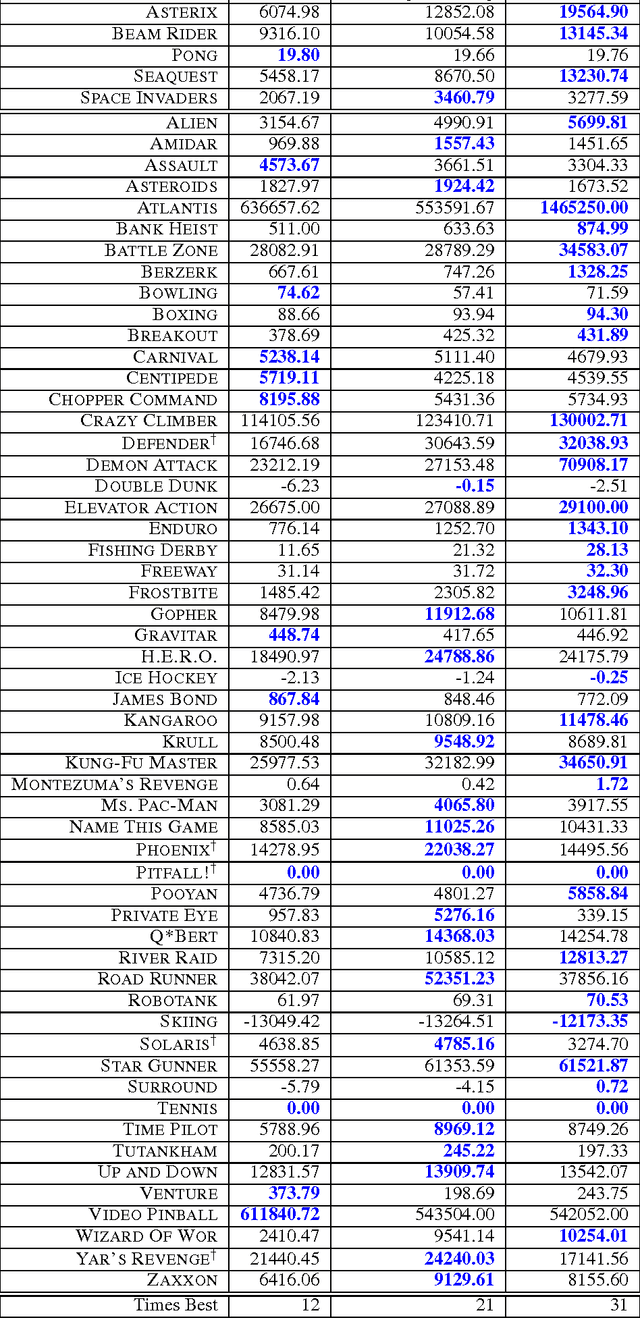

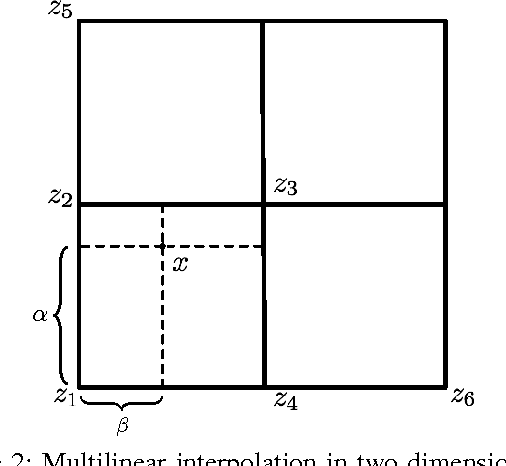

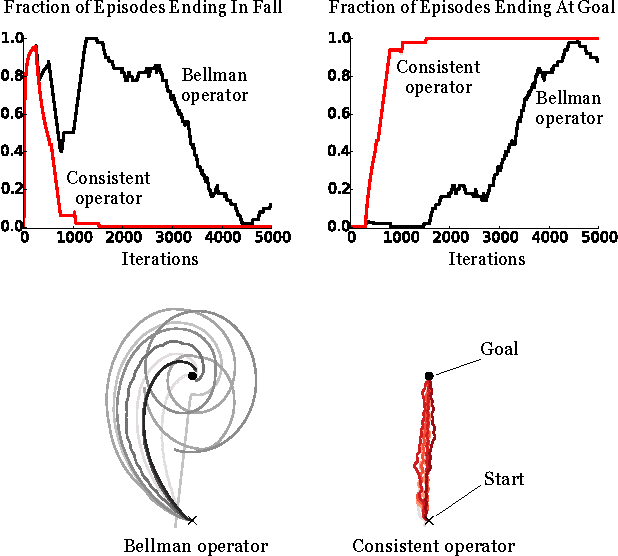

Increasing the Action Gap: New Operators for Reinforcement Learning

Dec 15, 2015Marc G. Bellemare, Georg Ostrovski, Arthur Guez, Philip S. Thomas, Rémi Munos

This paper introduces new optimality-preserving operators on Q-functions. We first describe an operator for tabular representations, the consistent Bellman operator, which incorporates a notion of local policy consistency. We show that this local consistency leads to an increase in the action gap at each state; increasing this gap, we argue, mitigates the undesirable effects of approximation and estimation errors on the induced greedy policies. This operator can also be applied to discretized continuous space and time problems, and we provide empirical results evidencing superior performance in this context. Extending the idea of a locally consistent operator, we then derive sufficient conditions for an operator to preserve optimality, leading to a family of operators which includes our consistent Bellman operator. As corollaries we provide a proof of optimality for Baird's advantage learning algorithm and derive other gap-increasing operators with interesting properties. We conclude with an empirical study on 60 Atari 2600 games illustrating the strong potential of these new operators.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge