Pedro Santana

An Introduction to Deep Reinforcement and Imitation Learning

Dec 11, 2025Abstract:Embodied agents, such as robots and virtual characters, must continuously select actions to execute tasks effectively, solving complex sequential decision-making problems. Given the difficulty of designing such controllers manually, learning-based approaches have emerged as promising alternatives, most notably Deep Reinforcement Learning (DRL) and Deep Imitation Learning (DIL). DRL leverages reward signals to optimize behavior, while DIL uses expert demonstrations to guide learning. This document introduces DRL and DIL in the context of embodied agents, adopting a concise, depth-first approach to the literature. It is self-contained, presenting all necessary mathematical and machine learning concepts as they are needed. It is not intended as a survey of the field; rather, it focuses on a small set of foundational algorithms and techniques, prioritizing in-depth understanding over broad coverage. The material ranges from Markov Decision Processes to REINFORCE and Proximal Policy Optimization (PPO) for DRL, and from Behavioral Cloning to Dataset Aggregation (DAgger) and Generative Adversarial Imitation Learning (GAIL) for DIL.

Monocular Trail Detection and Tracking Aided by Visual SLAM for Small Unmanned Aerial Vehicles

Jun 21, 2018

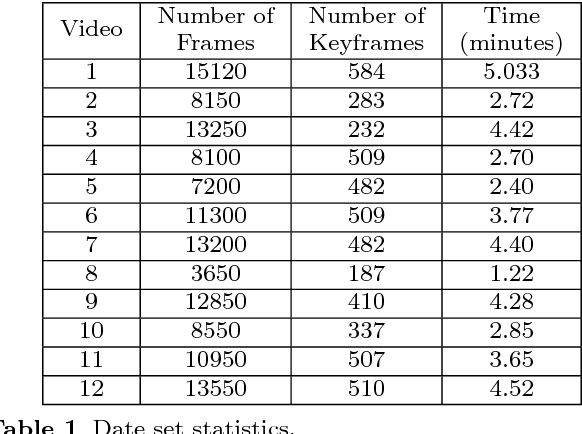

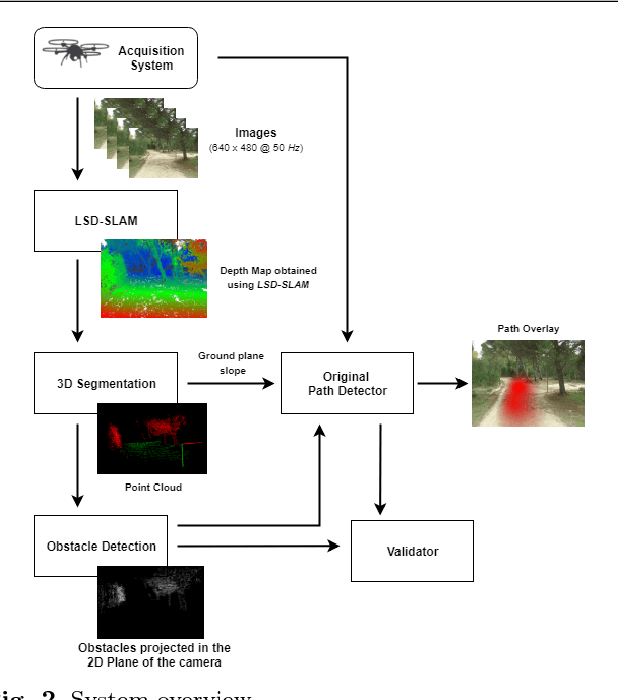

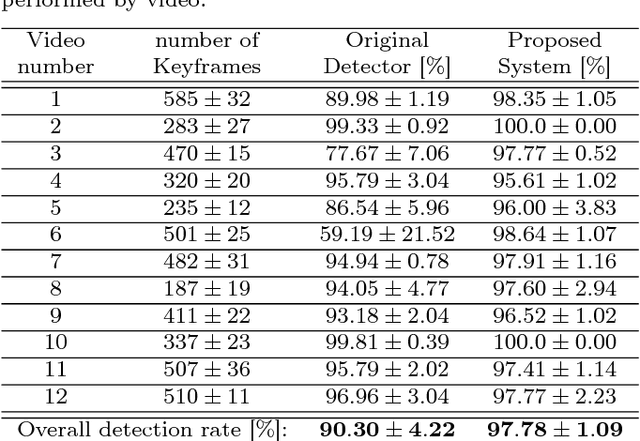

Abstract:This paper presents a monocular vision system susceptible of being installed in unmanned small and medium-sized aerial vehicles built to perform missions in forest environments (e.g., search and rescue). The proposed system extends a previous monocular-based technique for trail detection and tracking so as to take into account volumetric data acquired from a Visual SLAM algorithm and, as a result, to increase its sturdiness upon challenging trails. The experimental results, obtained via a set of 12 videos recorded with a camera installed in a tele-operated, unmanned small-sized aerial vehicle, show the ability of the proposed system to overcome some of the difficulties of the original detector, attaining a success rate of $97.8\,\%$.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge