Paulo Eduardo Santos

Improving Underwater Acoustic Classification Through Learnable Gabor Filter Convolution and Attention Mechanisms

Dec 09, 2025Abstract:Remotely detecting and classifying underwater acoustic targets is critical for environmental monitoring and defence. However, the complex nature of ship-radiated and environmental underwater noise poses significant challenges to accurate signal processing. While recent advancements in machine learning have improved classification accuracy, issues such as limited dataset availability and a lack of standardised experimentation hinder generalisation and robustness. This paper introduces GSE ResNeXt, a deep learning architecture integrating learnable Gabor convolutional layers with a ResNeXt backbone enhanced by squeeze-and-excitation attention mechanisms. The Gabor filters serve as two-dimensional adaptive band-pass filters, extending the feature channel representation. Its combination with channel attention improves training stability and convergence while enhancing the model's ability to extract discriminative features. The model is evaluated on three classification tasks of increasing complexity. In particular, the impact of temporal differences between the training and testing data is explored, revealing that the distance between the vessel and sensor significantly affects performance. Results show that, GSE ResNeXt consistently outperforms baseline models like Xception, ResNet, and MobileNetV2, in terms of classification performance. Regarding stability and convergence, the addition of Gabor convolutions in the initial layers of the model represents a 28% reduction in training time. These results emphasise the importance of signal processing strategies in improving the reliability and generalisation of models under different environmental conditions, especially in data-limited underwater acoustic classification scenarios. Future developments should focus on mitigating the impact of environmental factors on input signals.

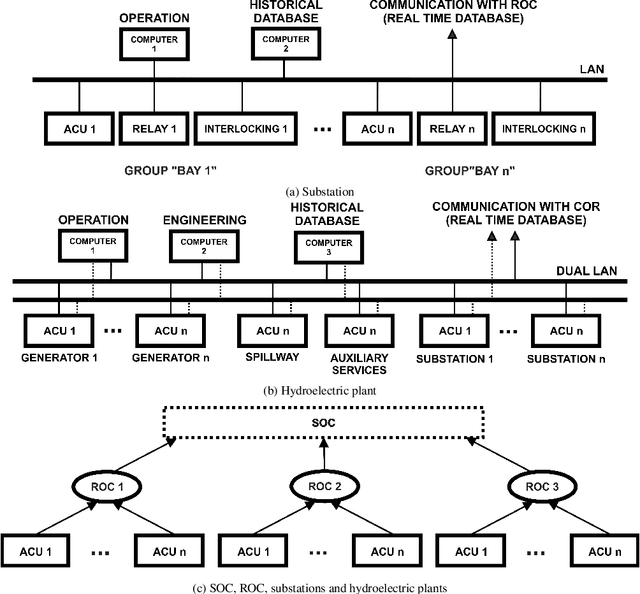

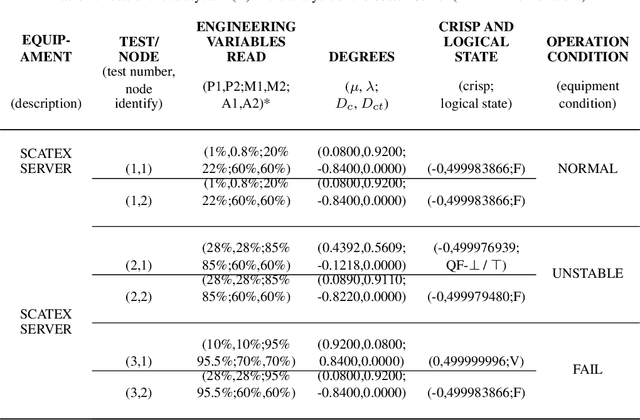

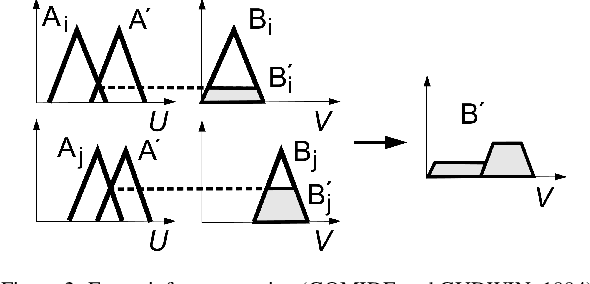

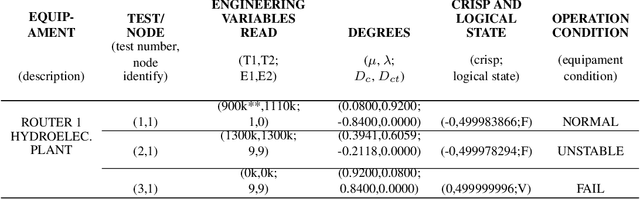

Monitoring electrical systems data-network equipment by means of Fuzzy and Paraconsistent Annotated Logic

May 24, 2021

Abstract:The constant increase in the amount and complexity of information obtained from IT data networkelements, for its correct monitoring and management, is a reality. The same happens to data net-works in electrical systems that provide effective supervision and control of substations and hydro-electric plants. Contributing to this fact is the growing number of installations and new environmentsmonitored by such data networks and the constant evolution of the technologies involved. This sit-uation potentially leads to incomplete and/or contradictory data, issues that must be addressed inorder to maintain a good level of monitoring and, consequently, management of these systems. Inthis paper, a prototype of an expert system is developed to monitor the status of equipment of datanetworks in electrical systems, which deals with inconsistencies without trivialising the inferences.This is accomplished in the context of the remote control of hydroelectric plants and substationsby a Regional Operation Centre (ROC). The expert system is developed with algorithms definedupon a combination of Fuzzy logic and Paraconsistent Annotated Logic with Annotation of TwoValues (PAL2v) in order to analyse uncertain signals and generate the operating conditions (faulty,normal, unstable or inconsistent / indeterminate) of the equipment that are identified as importantfor the remote control of hydroelectric plants and substations. A prototype of this expert systemwas installed on a virtualised server with CLP500 software (from the EFACEC manufacturer) thatwas applied to investigate scenarios consisting of a Regional (Brazilian) Operation Centre, with aGeneric Substation and a Generic Hydroelectric Plant, representing a remote control environment.

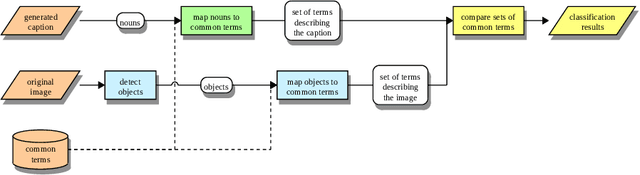

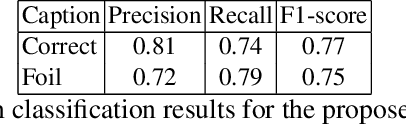

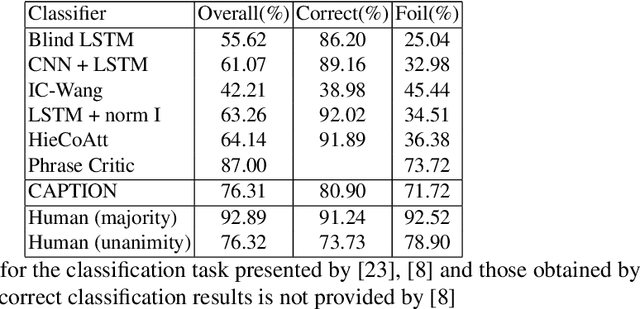

CAPTION: Correction by Analyses, POS-Tagging and Interpretation of Objects using only Nouns

Oct 02, 2020

Abstract:Recently, Deep Learning (DL) methods have shown an excellent performance in image captioning and visual question answering. However, despite their performance, DL methods do not learn the semantics of the words that are being used to describe a scene, making it difficult to spot incorrect words used in captions or to interchange words that have similar meanings. This work proposes a combination of DL methods for object detection and natural language processing to validate image's captions. We test our method in the FOIL-COCO data set, since it provides correct and incorrect captions for various images using only objects represented in the MS-COCO image data set. Results show that our method has a good overall performance, in some cases similar to the human performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge