Paul Checchin

When and Where Localization Fails: An Analysis of the Iterative Closest Point in Evolving Environment

Jul 23, 2025Abstract:Robust relocalization in dynamic outdoor environments remains a key challenge for autonomous systems relying on 3D lidar. While long-term localization has been widely studied, short-term environmental changes, occurring over days or weeks, remain underexplored despite their practical significance. To address this gap, we present a highresolution, short-term multi-temporal dataset collected weekly from February to April 2025 across natural and semi-urban settings. Each session includes high-density point cloud maps, 360 deg panoramic images, and trajectory data. Projected lidar scans, derived from the point cloud maps and modeled with sensor-accurate occlusions, are used to evaluate alignment accuracy against the ground truth using two Iterative Closest Point (ICP) variants: Point-to-Point and Point-to-Plane. Results show that Point-to-Plane offers significantly more stable and accurate registration, particularly in areas with sparse features or dense vegetation. This study provides a structured dataset for evaluating short-term localization robustness, a reproducible framework for analyzing scan-to-map alignment under noise, and a comparative evaluation of ICP performance in evolving outdoor environments. Our analysis underscores how local geometry and environmental variability affect localization success, offering insights for designing more resilient robotic systems.

R-AGNO-RPN: A LIDAR-Camera Region Deep Network for Resolution-Agnostic Detection

Dec 10, 2020

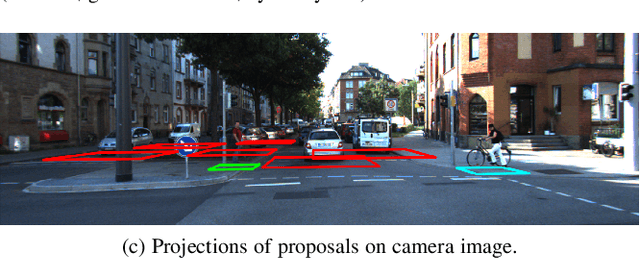

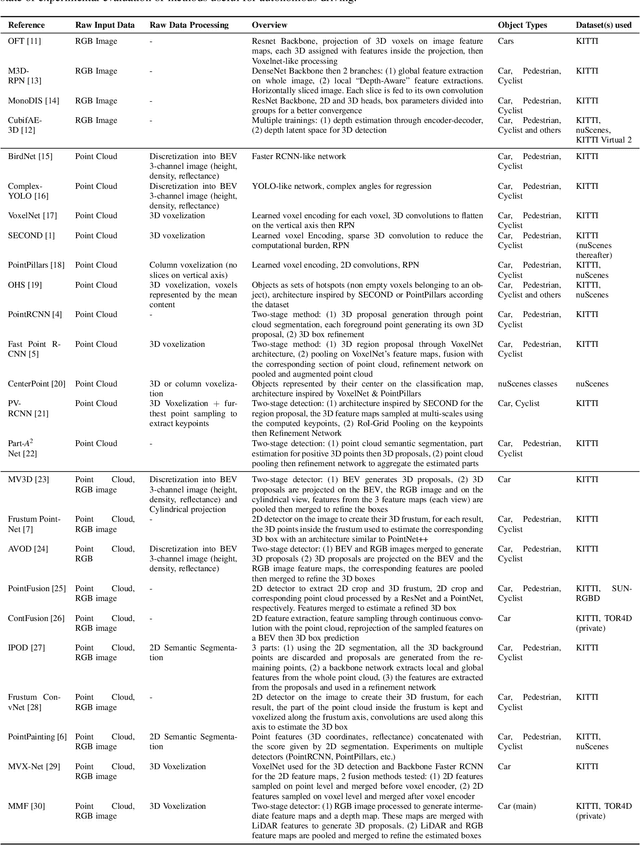

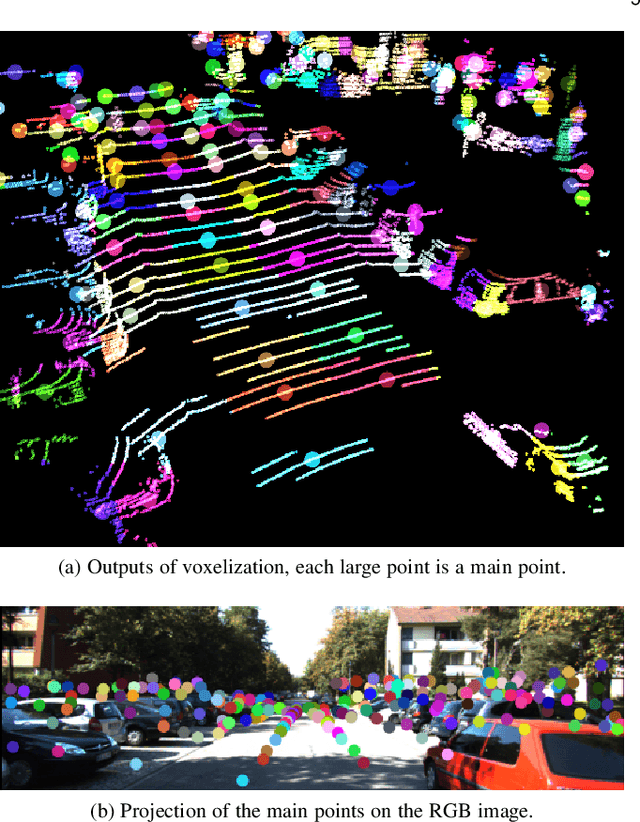

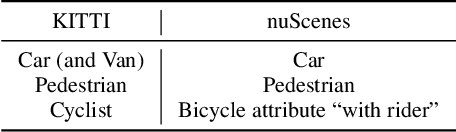

Abstract:Current neural networks-based object detection approaches processing LiDAR point clouds are generally trained from one kind of LiDAR sensors. However, their performances decrease when they are tested with data coming from a different LiDAR sensor than the one used for training, i.e., with a different point cloud resolution. In this paper, R-AGNO-RPN, a region proposal network built on fusion of 3D point clouds and RGB images is proposed for 3D object detection regardless of point cloud resolution. As our approach is designed to be also applied on low point cloud resolutions, the proposed method focuses on object localization instead of estimating refined boxes on reduced data. The resilience to low-resolution point cloud is obtained through image features accurately mapped to Bird's Eye View and a specific data augmentation procedure that improves the contribution of the RGB images. To show the proposed network's ability to deal with different point clouds resolutions, experiments are conducted on both data coming from the KITTI 3D Object Detection and the nuScenes datasets. In addition, to assess its performances, our method is compared to PointPillars, a well-known 3D detection network. Experimental results show that even on point cloud data reduced by $80\%$ of its original points, our method is still able to deliver relevant proposals localization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge