Patrick E. Leser

Bayesian Symbolic Regression via Posterior Sampling

Dec 11, 2025Abstract:Symbolic regression is a powerful tool for discovering governing equations directly from data, but its sensitivity to noise hinders its broader application. This paper introduces a Sequential Monte Carlo (SMC) framework for Bayesian symbolic regression that approximates the posterior distribution over symbolic expressions, enhancing robustness and enabling uncertainty quantification for symbolic regression in the presence of noise. Differing from traditional genetic programming approaches, the SMC-based algorithm combines probabilistic selection, adaptive tempering, and the use of normalized marginal likelihood to efficiently explore the search space of symbolic expressions, yielding parsimonious expressions with improved generalization. When compared to standard genetic programming baselines, the proposed method better deals with challenging, noisy benchmark datasets. The reduced tendency to overfit and enhanced ability to discover accurate and interpretable equations paves the way for more robust symbolic regression in scientific discovery and engineering design applications.

Generative Modeling of Random Fields from Limited Data via Constrained Latent Flow Matching

May 19, 2025Abstract:Deep generative models are promising tools for science and engineering, but their reliance on abundant, high-quality data limits applicability. We present a novel framework for generative modeling of random fields (probability distributions over continuous functions) that incorporates domain knowledge to supplement limited, sparse, and indirect data. The foundation of the approach is latent flow matching, where generative modeling occurs on compressed function representations in the latent space of a pre-trained variational autoencoder (VAE). Innovations include the adoption of a function decoder within the VAE and integration of physical/statistical constraints into the VAE training process. In this way, a latent function representation is learned that yields continuous random field samples satisfying domain-specific constraints when decoded, even in data-limited regimes. Efficacy is demonstrated on two challenging applications: wind velocity field reconstruction from sparse sensors and material property inference from a limited number of indirect measurements. Results show that the proposed framework achieves significant improvements in reconstruction accuracy compared to unconstrained methods and enables effective inference with relatively small training datasets that is intractable without constraints.

Entropy-based adaptive design for contour finding and estimating reliability

May 24, 2021

Abstract:In reliability analysis, methods used to estimate failure probability are often limited by the costs associated with model evaluations. Many of these methods, such as multifidelity importance sampling (MFIS), rely upon a computationally efficient, surrogate model like a Gaussian process (GP) to quickly generate predictions. The quality of the GP fit, particularly in the vicinity of the failure region(s), is instrumental in supplying accurately predicted failures for such strategies. We introduce an entropy-based GP adaptive design that, when paired with MFIS, provides more accurate failure probability estimates and with higher confidence. We show that our greedy data acquisition strategy better identifies multiple failure regions compared to existing contour-finding schemes. We then extend the method to batch selection, without sacrificing accuracy. Illustrative examples are provided on benchmark data as well as an application to an impact damage simulator for National Aeronautics and Space Administration (NASA) spacesuits.

Inverse Estimation of Elastic Modulus Using Physics-Informed Generative Adversarial Networks

May 20, 2020

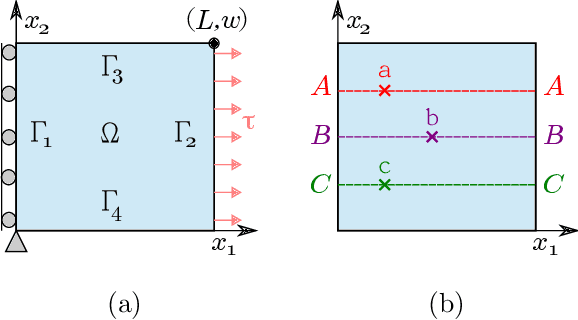

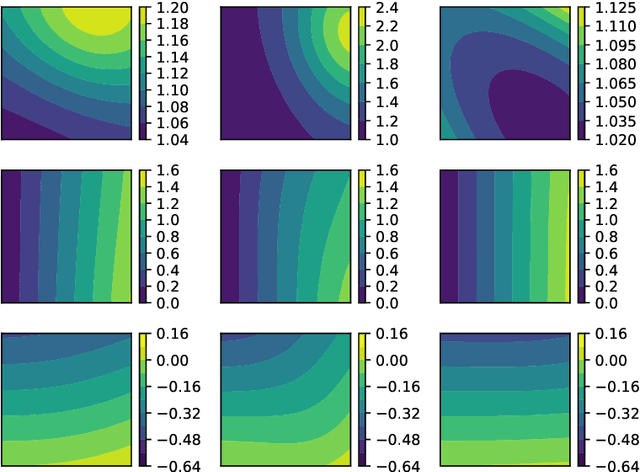

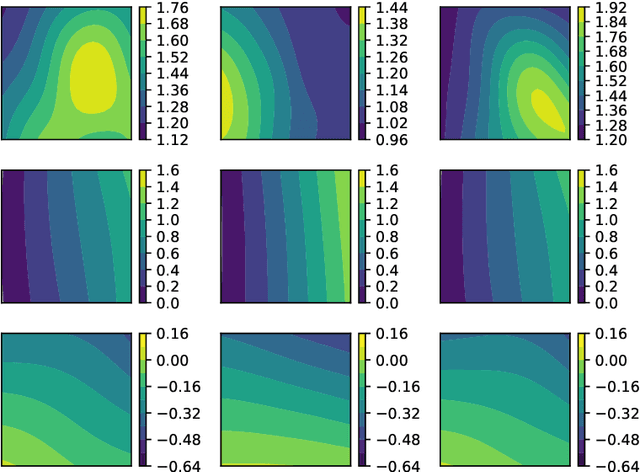

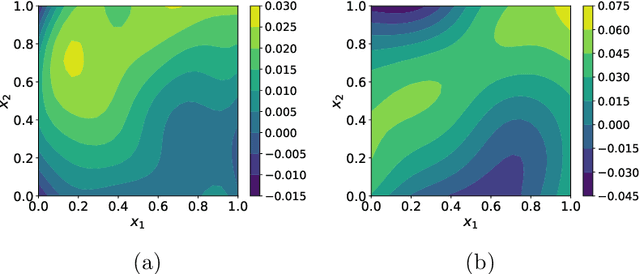

Abstract:While standard generative adversarial networks (GANs) rely solely on training data to learn unknown probability distributions, physics-informed GANs (PI-GANs) encode physical laws in the form of stochastic partial differential equations (PDEs) using auto differentiation. By relating observed data to unobserved quantities of interest through PDEs, PI-GANs allow for the estimation of underlying probability distributions without their direct measurement (i.e. inverse problems). The scalable nature of GANs allows high-dimensional, spatially-dependent probability distributions (i.e., random fields) to be inferred, while incorporating prior information through PDEs allows the training datasets to be relatively small. In this work, PI-GANs are demonstrated for the application of elastic modulus estimation in mechanical testing. Given measured deformation data, the underlying probability distribution of spatially-varying elastic modulus (stiffness) is learned. Two feed-forward deep neural network generators are used to model the deformation and material stiffness across a two dimensional domain. Wasserstein GANs with gradient penalty are employed for enhanced stability. In the absence of explicit training data, it is demonstrated that the PI-GAN learns to generate realistic, physically-admissible realizations of material stiffness by incorporating the PDE that relates it to the measured deformation. It is shown that the statistics (mean, standard deviation, point-wise distributions, correlation length) of these generated stiffness samples have good agreement with the true distribution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge