Ngai-Man Cheung

Neural Correlates of Face Familiarity Perception

Jul 31, 2022

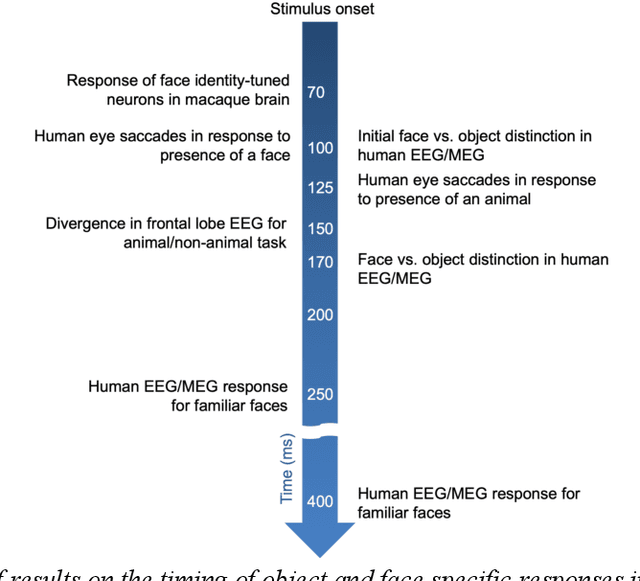

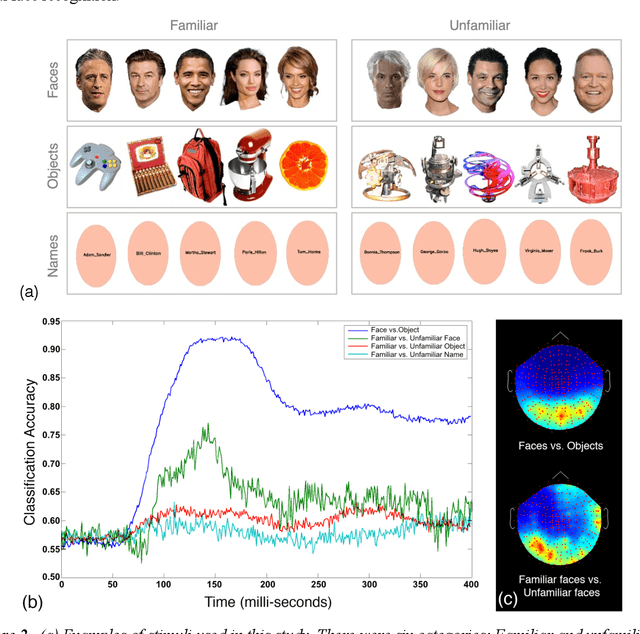

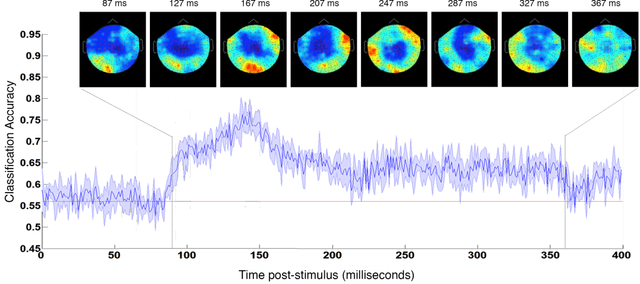

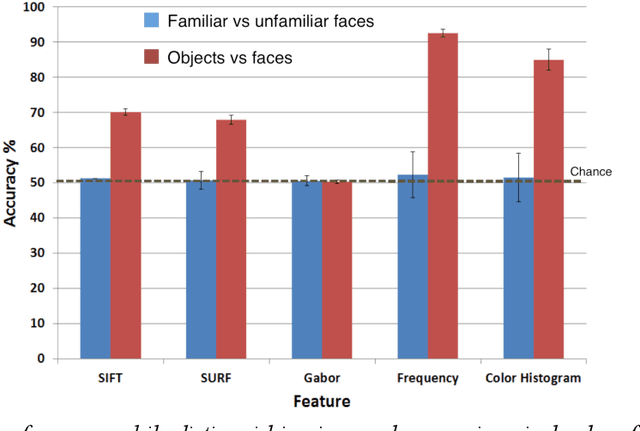

Abstract:In the domain of face recognition, there exists a puzzling timing discrepancy between results from macaque neurophysiology on the one hand and human electrophysiology on the other. Single unit recordings in macaques have demonstrated face identity specific responses in extra-striate visual cortex within 100 milliseconds of stimulus onset. In EEG and MEG experiments with humans, however, a consistent distinction between neural activity corresponding to unfamiliar and familiar faces has been reported to emerge around 250 ms. This points to the possibility that there may be a hitherto undiscovered early correlate of face familiarity perception in human electrophysiological traces. We report here a successful search for such a correlate in dense MEG recordings using pattern classification techniques. Our analyses reveal markers of face familiarity as early as 85 ms after stimulus onset. Low-level attributes of the images, such as luminance and color distributions, are unable to account for this early emerging response difference. These results help reconcile human and macaque data, and provide clues regarding neural mechanisms underlying familiar face perception.

Revisiting Label Smoothing and Knowledge Distillation Compatibility: What was Missing?

Jun 29, 2022

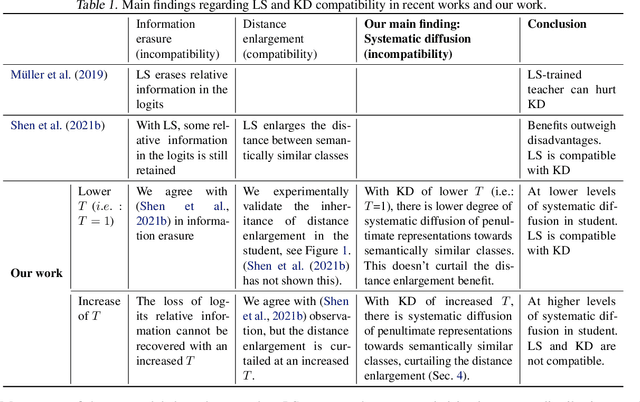

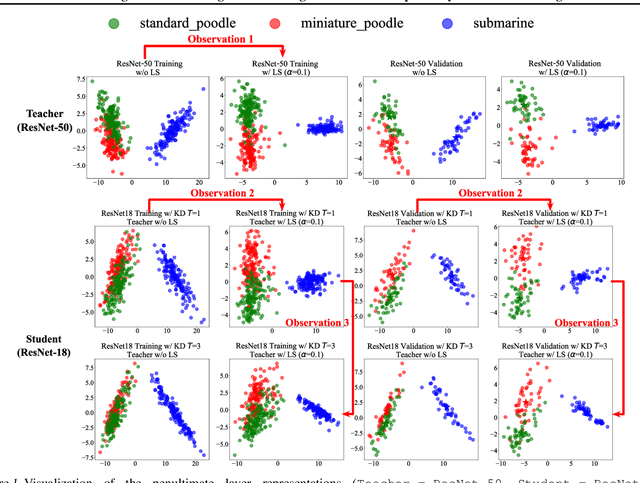

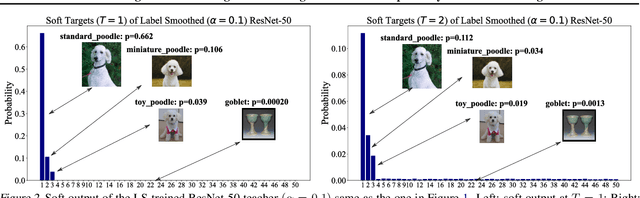

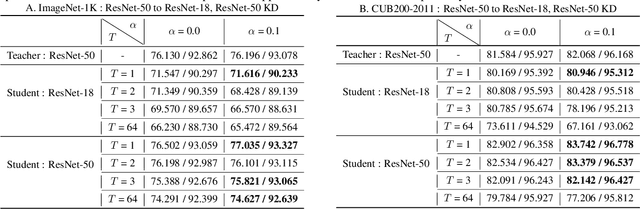

Abstract:This work investigates the compatibility between label smoothing (LS) and knowledge distillation (KD). Contemporary findings addressing this thesis statement take dichotomous standpoints: Muller et al. (2019) and Shen et al. (2021b). Critically, there is no effort to understand and resolve these contradictory findings, leaving the primal question -- to smooth or not to smooth a teacher network? -- unanswered. The main contributions of our work are the discovery, analysis and validation of systematic diffusion as the missing concept which is instrumental in understanding and resolving these contradictory findings. This systematic diffusion essentially curtails the benefits of distilling from an LS-trained teacher, thereby rendering KD at increased temperatures ineffective. Our discovery is comprehensively supported by large-scale experiments, analyses and case studies including image classification, neural machine translation and compact student distillation tasks spanning across multiple datasets and teacher-student architectures. Based on our analysis, we suggest practitioners to use an LS-trained teacher with a low-temperature transfer to achieve high performance students. Code and models are available at https://keshik6.github.io/revisiting-ls-kd-compatibility/

A Closer Look at Few-shot Image Generation

May 08, 2022

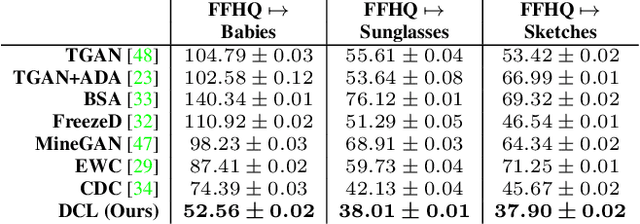

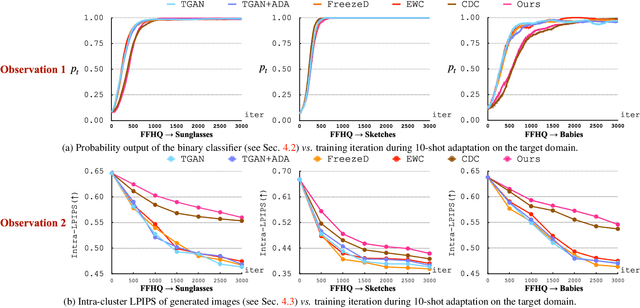

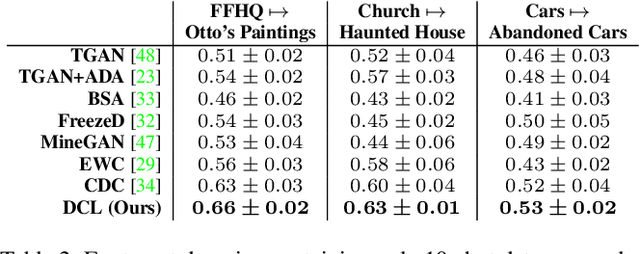

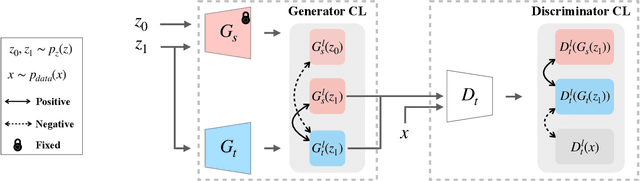

Abstract:Modern GANs excel at generating high quality and diverse images. However, when transferring the pretrained GANs on small target data (e.g., 10-shot), the generator tends to replicate the training samples. Several methods have been proposed to address this few-shot image generation task, but there is a lack of effort to analyze them under a unified framework. As our first contribution, we propose a framework to analyze existing methods during the adaptation. Our analysis discovers that while some methods have disproportionate focus on diversity preserving which impede quality improvement, all methods achieve similar quality after convergence. Therefore, the better methods are those that can slow down diversity degradation. Furthermore, our analysis reveals that there is still plenty of room to further slow down diversity degradation. Informed by our analysis and to slow down the diversity degradation of the target generator during adaptation, our second contribution proposes to apply mutual information (MI) maximization to retain the source domain's rich multi-level diversity information in the target domain generator. We propose to perform MI maximization by contrastive loss (CL), leverage the generator and discriminator as two feature encoders to extract different multi-level features for computing CL. We refer to our method as Dual Contrastive Learning (DCL). Extensive experiments on several public datasets show that, while leading to a slower diversity-degrading generator during adaptation, our proposed DCL brings visually pleasant quality and state-of-the-art quantitative performance.

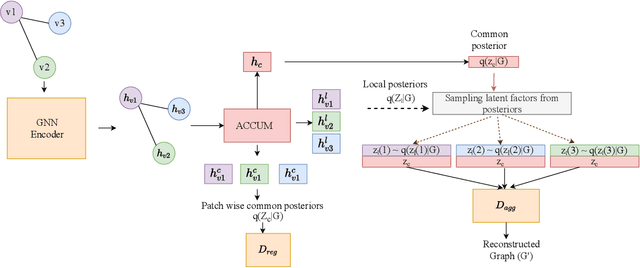

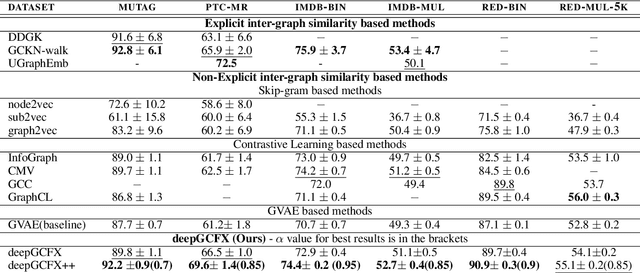

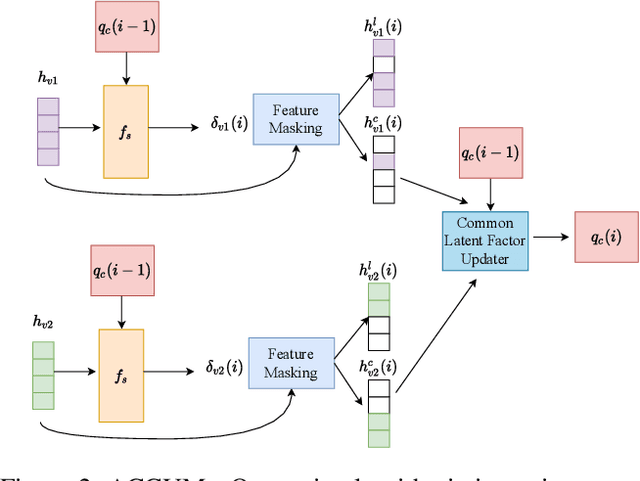

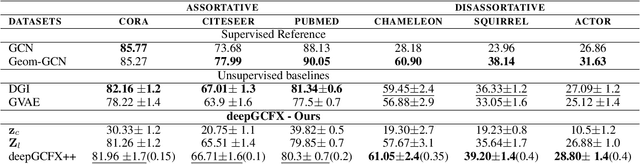

Graph-wise Common Latent Factor Extraction for Unsupervised Graph Representation Learning

Dec 16, 2021

Abstract:Unsupervised graph-level representation learning plays a crucial role in a variety of tasks such as molecular property prediction and community analysis, especially when data annotation is expensive. Currently, most of the best-performing graph embedding methods are based on Infomax principle. The performance of these methods highly depends on the selection of negative samples and hurt the performance, if the samples were not carefully selected. Inter-graph similarity-based methods also suffer if the selected set of graphs for similarity matching is low in quality. To address this, we focus only on utilizing the current input graph for embedding learning. We are motivated by an observation from real-world graph generation processes where the graphs are formed based on one or more global factors which are common to all elements of the graph (e.g., topic of a discussion thread, solubility level of a molecule). We hypothesize extracting these common factors could be highly beneficial. Hence, this work proposes a new principle for unsupervised graph representation learning: Graph-wise Common latent Factor EXtraction (GCFX). We further propose a deep model for GCFX, deepGCFX, based on the idea of reversing the above-mentioned graph generation process which could explicitly extract common latent factors from an input graph and achieve improved results on downstream tasks to the current state-of-the-art. Through extensive experiments and analysis, we demonstrate that, while extracting common latent factors is beneficial for graph-level tasks to alleviate distractions caused by local variations of individual nodes or local neighbourhoods, it also benefits node-level tasks by enabling long-range node dependencies, especially for disassortative graphs.

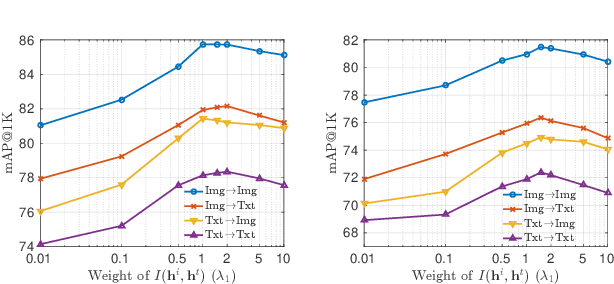

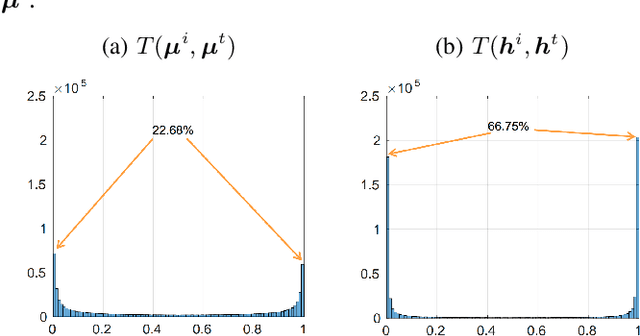

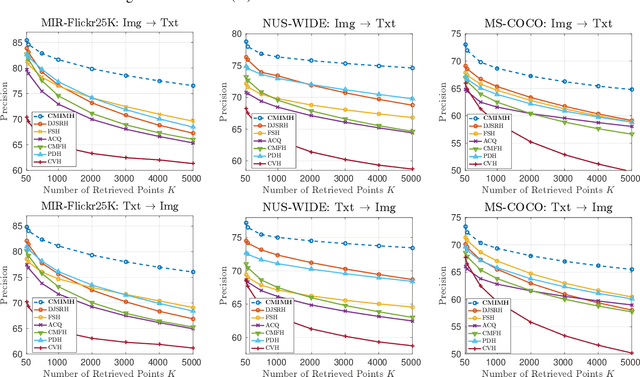

Multi-Modal Mutual Information Maximization: A Novel Approach for Unsupervised Deep Cross-Modal Hashing

Dec 13, 2021

Abstract:In this paper, we adopt the maximizing mutual information (MI) approach to tackle the problem of unsupervised learning of binary hash codes for efficient cross-modal retrieval. We proposed a novel method, dubbed Cross-Modal Info-Max Hashing (CMIMH). First, to learn informative representations that can preserve both intra- and inter-modal similarities, we leverage the recent advances in estimating variational lower-bound of MI to maximize the MI between the binary representations and input features and between binary representations of different modalities. By jointly maximizing these MIs under the assumption that the binary representations are modelled by multivariate Bernoulli distributions, we can learn binary representations, which can preserve both intra- and inter-modal similarities, effectively in a mini-batch manner with gradient descent. Furthermore, we find out that trying to minimize the modality gap by learning similar binary representations for the same instance from different modalities could result in less informative representations. Hence, balancing between reducing the modality gap and losing modality-private information is important for the cross-modal retrieval tasks. Quantitative evaluations on standard benchmark datasets demonstrate that the proposed method consistently outperforms other state-of-the-art cross-modal retrieval methods.

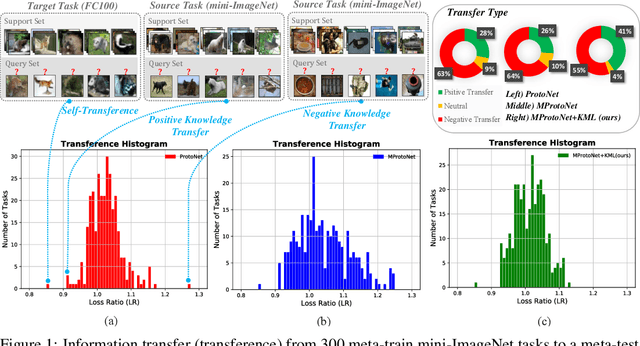

Revisit Multimodal Meta-Learning through the Lens of Multi-Task Learning

Oct 27, 2021

Abstract:Multimodal meta-learning is a recent problem that extends conventional few-shot meta-learning by generalizing its setup to diverse multimodal task distributions. This setup makes a step towards mimicking how humans make use of a diverse set of prior skills to learn new skills. Previous work has achieved encouraging performance. In particular, in spite of the diversity of the multimodal tasks, previous work claims that a single meta-learner trained on a multimodal distribution can sometimes outperform multiple specialized meta-learners trained on individual unimodal distributions. The improvement is attributed to knowledge transfer between different modes of task distributions. However, there is no deep investigation to verify and understand the knowledge transfer between multimodal tasks. Our work makes two contributions to multimodal meta-learning. First, we propose a method to quantify knowledge transfer between tasks of different modes at a micro-level. Our quantitative, task-level analysis is inspired by the recent transference idea from multi-task learning. Second, inspired by hard parameter sharing in multi-task learning and a new interpretation of related work, we propose a new multimodal meta-learner that outperforms existing work by considerable margins. While the major focus is on multimodal meta-learning, our work also attempts to shed light on task interaction in conventional meta-learning. The code for this project is available at https://miladabd.github.io/KML.

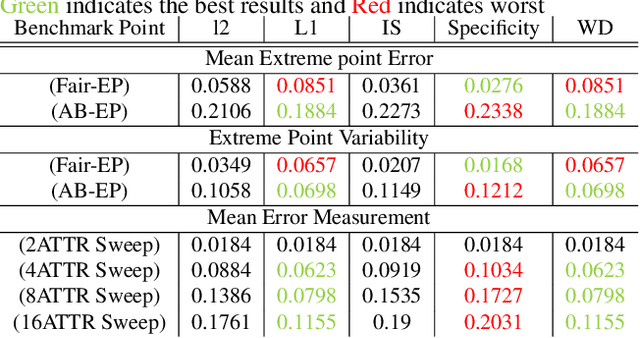

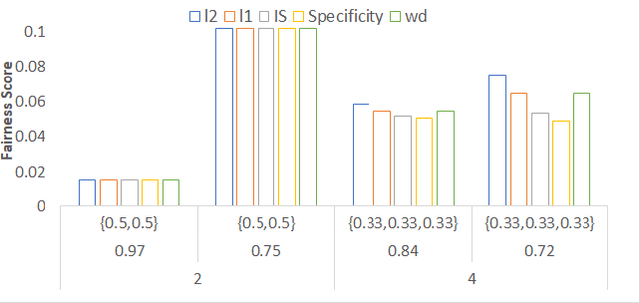

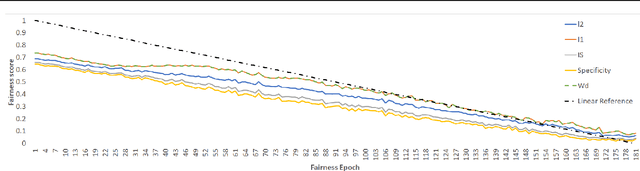

Measuring Fairness in Generative Models

Jul 16, 2021

Abstract:Deep generative models have made much progress in improving training stability and quality of generated data. Recently there has been increased interest in the fairness of deep-generated data. Fairness is important in many applications, e.g. law enforcement, as biases will affect efficacy. Central to fair data generation are the fairness metrics for the assessment and evaluation of different generative models. In this paper, we first review fairness metrics proposed in previous works and highlight potential weaknesses. We then discuss a performance benchmark framework along with the assessment of alternative metrics.

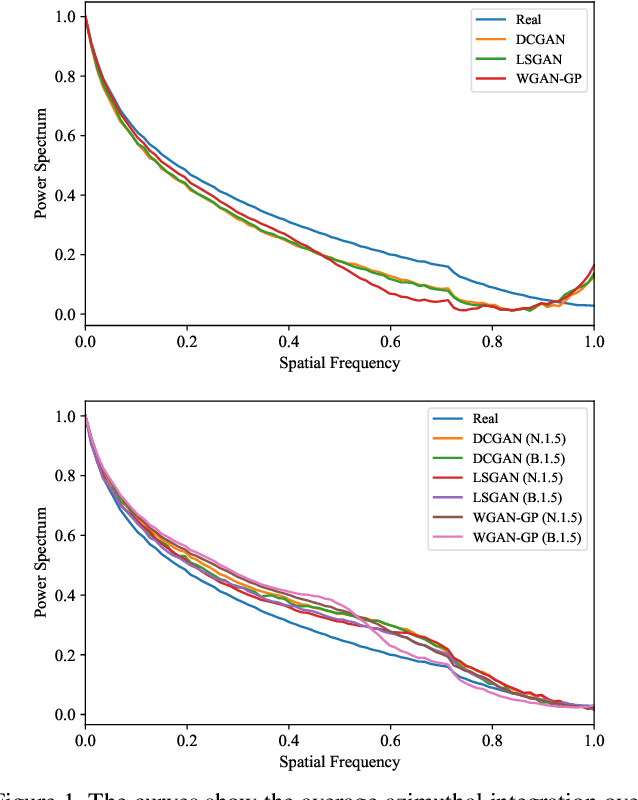

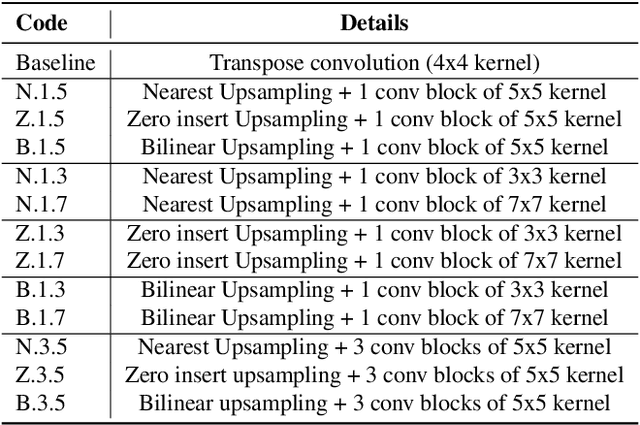

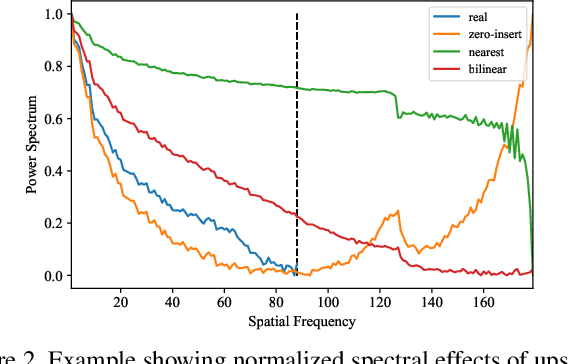

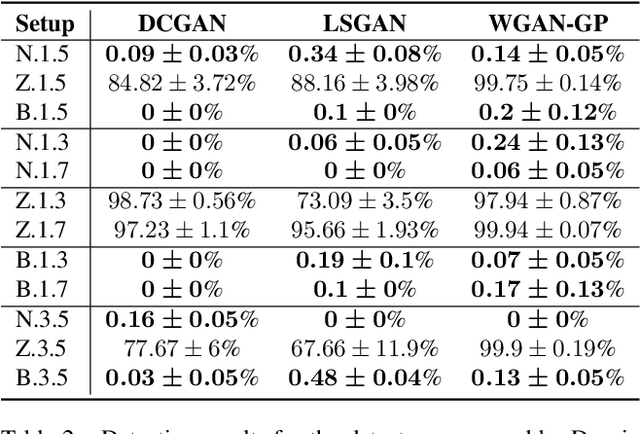

A Closer Look at Fourier Spectrum Discrepancies for CNN-generated Images Detection

Mar 31, 2021

Abstract:CNN-based generative modelling has evolved to produce synthetic images indistinguishable from real images in the RGB pixel space. Recent works have observed that CNN-generated images share a systematic shortcoming in replicating high frequency Fourier spectrum decay attributes. Furthermore, these works have successfully exploited this systematic shortcoming to detect CNN-generated images reporting up to 99% accuracy across multiple state-of-the-art GAN models. In this work, we investigate the validity of assertions claiming that CNN-generated images are unable to achieve high frequency spectral decay consistency. We meticulously construct a counterexample space of high frequency spectral decay consistent CNN-generated images emerging from our handcrafted experiments using DCGAN, LSGAN, WGAN-GP and StarGAN, where we empirically show that this frequency discrepancy can be avoided by a minor architecture change in the last upsampling operation. We subsequently use images from this counterexample space to successfully bypass the recently proposed forensics detector which leverages on high frequency Fourier spectrum decay attributes for CNN-generated image detection. Through this study, we show that high frequency Fourier spectrum decay discrepancies are not inherent characteristics for existing CNN-based generative models--contrary to the belief of some existing work--, and such features are not robust to perform synthetic image detection. Our results prompt re-thinking of using high frequency Fourier spectrum decay attributes for CNN-generated image detection. Code and models are available at https://keshik6.github.io/Fourier-Discrepancies-CNN-Detection/

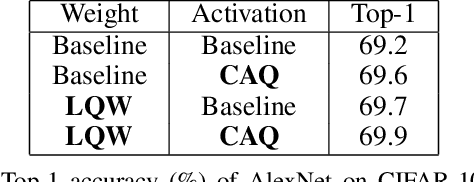

Direct Quantization for Training Highly Accurate Low Bit-width Deep Neural Networks

Dec 26, 2020

Abstract:This paper proposes two novel techniques to train deep convolutional neural networks with low bit-width weights and activations. First, to obtain low bit-width weights, most existing methods obtain the quantized weights by performing quantization on the full-precision network weights. However, this approach would result in some mismatch: the gradient descent updates full-precision weights, but it does not update the quantized weights. To address this issue, we propose a novel method that enables {direct} updating of quantized weights {with learnable quantization levels} to minimize the cost function using gradient descent. Second, to obtain low bit-width activations, existing works consider all channels equally. However, the activation quantizers could be biased toward a few channels with high-variance. To address this issue, we propose a method to take into account the quantization errors of individual channels. With this approach, we can learn activation quantizers that minimize the quantization errors in the majority of channels. Experimental results demonstrate that our proposed method achieves state-of-the-art performance on the image classification task, using AlexNet, ResNet and MobileNetV2 architectures on CIFAR-100 and ImageNet datasets.

Unsupervised Deep Cross-modality Spectral Hashing

Aug 18, 2020

Abstract:This paper presents a novel framework, namely Deep Cross-modality Spectral Hashing (DCSH), to tackle the unsupervised learning problem of binary hash codes for efficient cross-modal retrieval. The framework is a two-step hashing approach which decouples the optimization into (1) binary optimization and (2) hashing function learning. In the first step, we propose a novel spectral embedding-based algorithm to simultaneously learn single-modality and binary cross-modality representations. While the former is capable of well preserving the local structure of each modality, the latter reveals the hidden patterns from all modalities. In the second step, to learn mapping functions from informative data inputs (images and word embeddings) to binary codes obtained from the first step, we leverage the powerful CNN for images and propose a CNN-based deep architecture to learn text modality. Quantitative evaluations on three standard benchmark datasets demonstrate that the proposed DCSH method consistently outperforms other state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge