Namid Stillman

TABL-ABM: A Hybrid Framework for Synthetic LOB Generation

Oct 26, 2025Abstract:The recent application of deep learning models to financial trading has heightened the need for high fidelity financial time series data. This synthetic data can be used to supplement historical data to train large trading models. The state-of-the-art models for the generative application often rely on huge amounts of historical data and large, complicated models. These models range from autoregressive and diffusion-based models through to architecturally simpler models such as the temporal-attention bilinear layer. Agent-based approaches to modelling limit order book dynamics can also recreate trading activity through mechanistic models of trader behaviours. In this work, we demonstrate how a popular agent-based framework for simulating intraday trading activity, the Chiarella model, can be combined with one of the most performant deep learning models for forecasting multi-variate time series, the TABL model. This forecasting model is coupled to a simulation of a matching engine with a novel method for simulating deleted order flow. Our simulator gives us the ability to test the generative abilities of the forecasting model using stylised facts. Our results show that this methodology generates realistic price dynamics however, when analysing deeper, parts of the markets microstructure are not accurately recreated, highlighting the necessity for including more sophisticated agent behaviors into the modeling framework to help account for tail events.

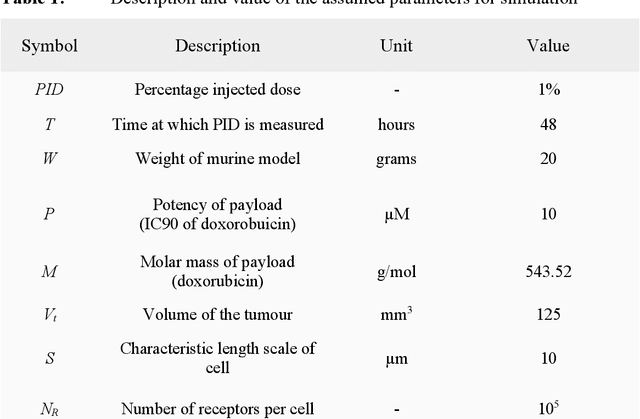

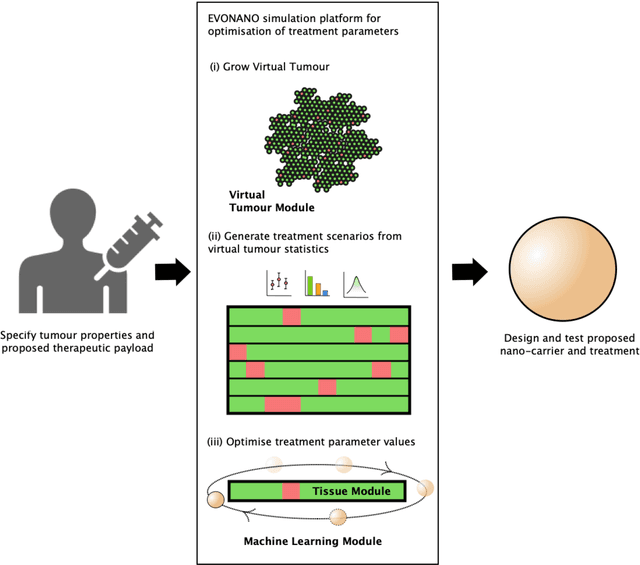

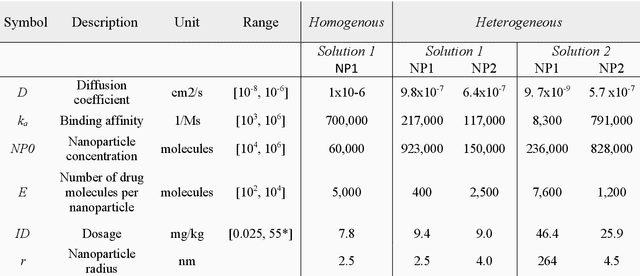

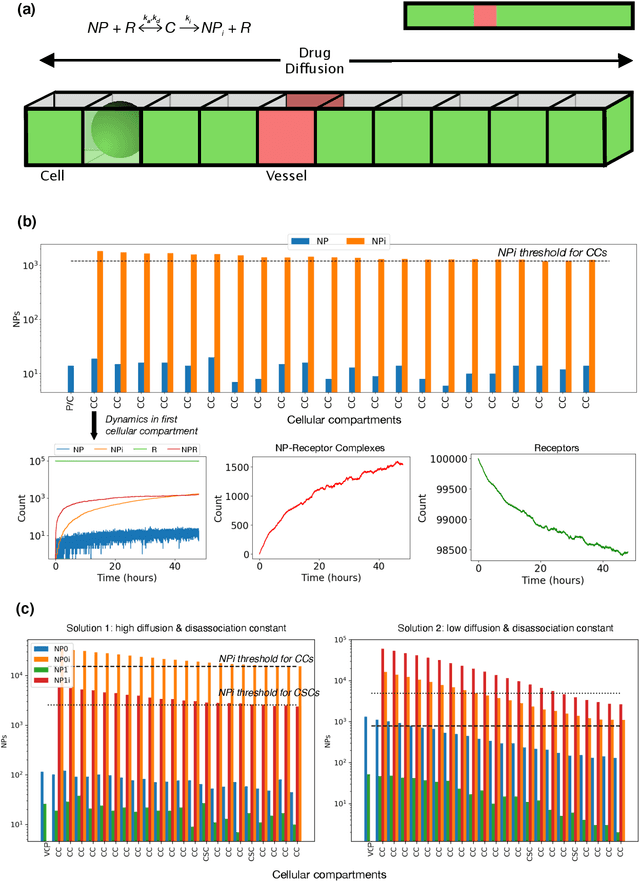

Evolutionary computational platform for the automatic discovery of nanocarriers for cancer treatment

Feb 01, 2021

Abstract:We present the EVONANO platform for the evolution of nanomedicines with application to anti-cancer treatments. EVONANO includes a simulator to grow tumours, extract representative scenarios, and then simulate nanoparticle transport through these scenarios to predict nanoparticle distribution. The nanoparticle designs are optimised using machine learning to efficiently find the most effective anti-cancer treatments. We demonstrate our platform with two examples optimising the properties of nanoparticles and treatment to selectively kill cancer cells over a range of tumour environments.

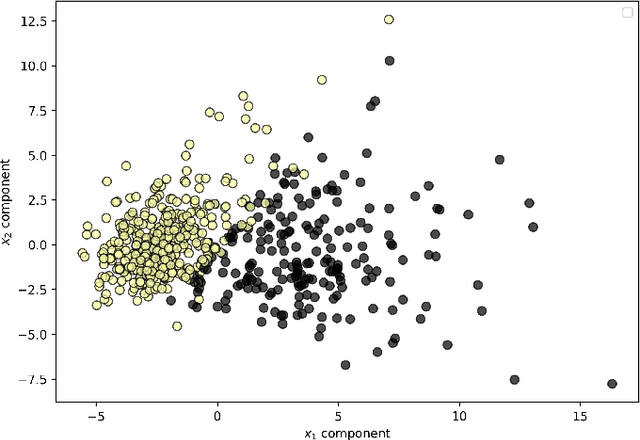

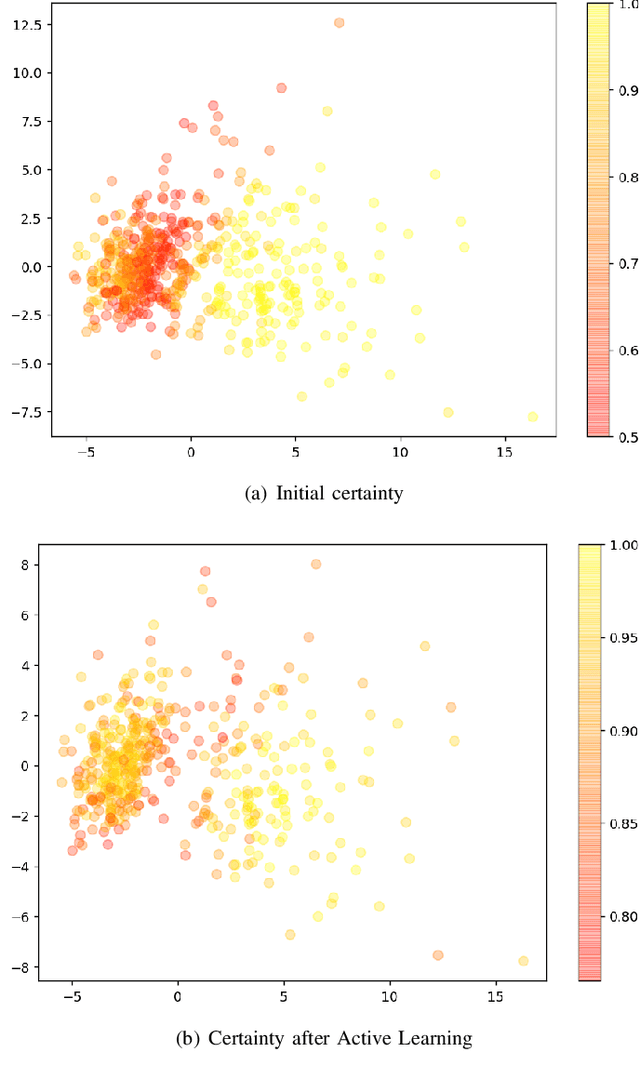

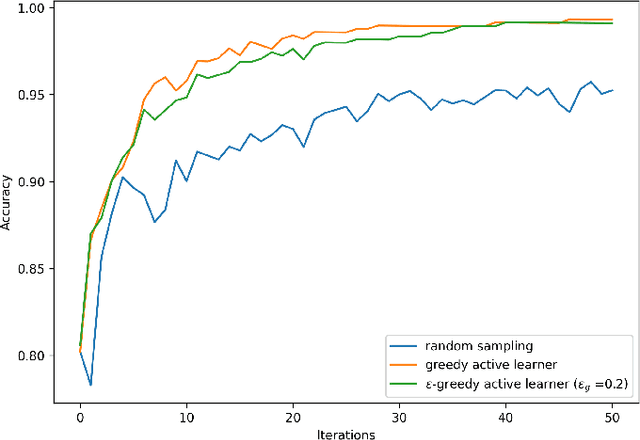

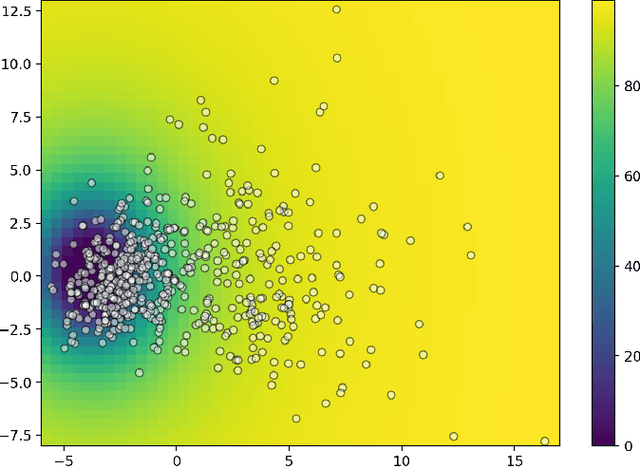

Model Exploration with Cost-Aware Learning

Oct 09, 2020

Abstract:We present an extension to active learning routines in which non-constant costs are explicitly considered. This work considers both known and unknown costs and introduces the term \epsilon-frugal for learners that do not only consider minimizing total costs but are also able to explore high cost regions of the sample space. We demonstrate our extension on a well-known machine learning dataset and find that out \epsilon-frugal learners outperform both learners with known costs and random sampling.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge