Mohamed Boussaha

Supervized Segmentation with Graph-Structured Deep Metric Learning

May 10, 2019

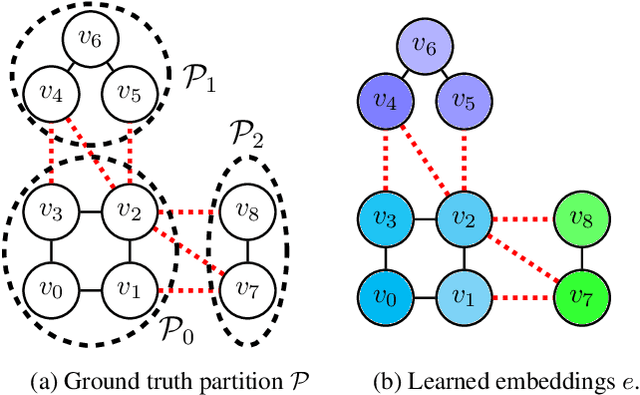

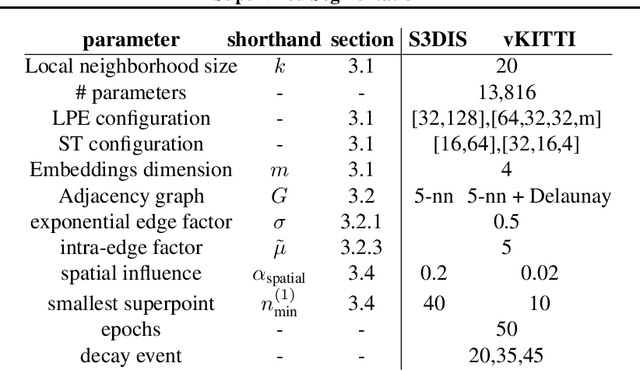

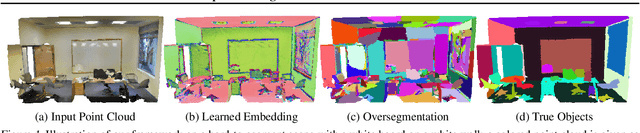

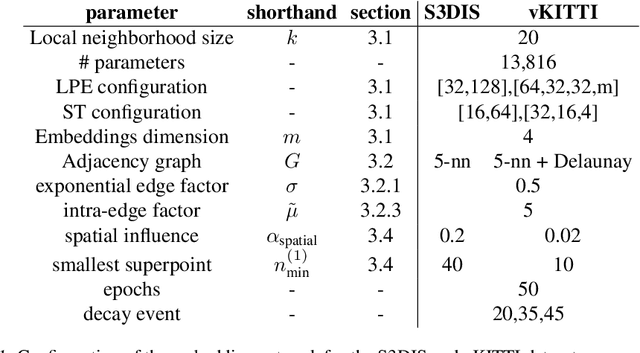

Abstract:We present a fully-supervized method for learning to segment data structured by an adjacency graph. We introduce the graph-structured contrastive loss, a loss function structured by a ground truth segmentation. It promotes learning vertex embeddings which are homogeneous within desired segments, and have high contrast at their interface. Thus, computing a piecewise-constant approximation of such embeddings produces a graph-partition close to the objective segmentation. This loss is fully backpropagable, which allows us to learn vertex embeddings with deep learning algorithms. We evaluate our methods on a 3D point cloud oversegmentation task, defining a new state-of-the-art by a large margin. These results are based on the published work of Landrieu and Boussaha 2019.

Point Cloud Oversegmentation with Graph-Structured Deep Metric Learning

Apr 03, 2019

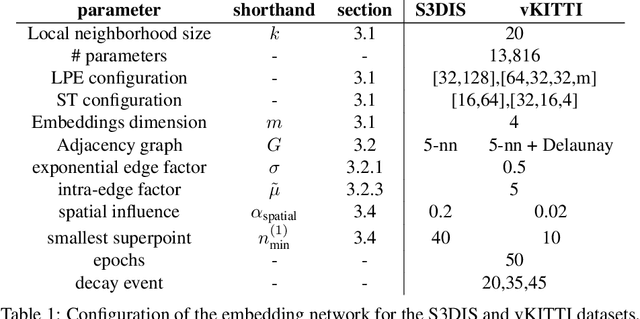

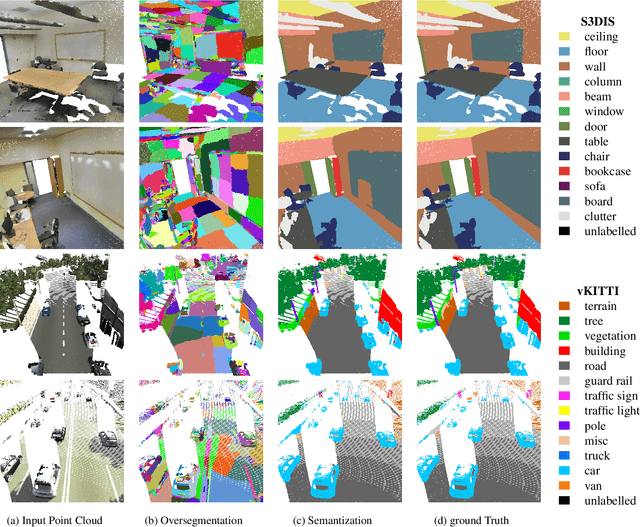

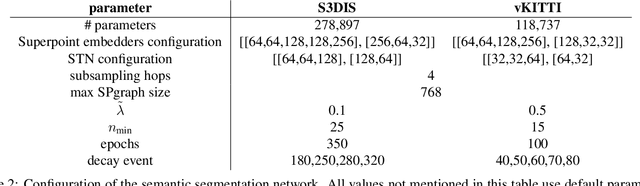

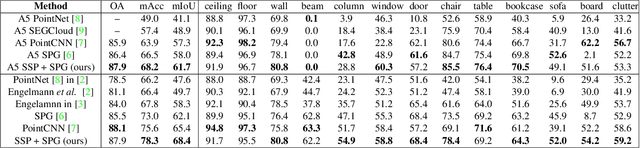

Abstract:We propose a new supervized learning framework for oversegmenting 3D point clouds into superpoints. We cast this problem as learning deep embeddings of the local geometry and radiometry of 3D points, such that the border of objects presents high contrasts. The embeddings are computed using a lightweight neural network operating on the points' local neighborhood. Finally, we formulate point cloud oversegmentation as a graph partition problem with respect to the learned embeddings. This new approach allows us to set a new state-of-the-art in point cloud oversegmentation by a significant margin, on a dense indoor dataset (S3DIS) and a sparse outdoor one (vKITTI). Our best solution requires over five times fewer superpoints to reach similar performance than previously published methods on S3DIS. Furthermore, we show that our framework can be used to improve superpoint-based semantic segmentation algorithms, setting a new state-of-the-art for this task as well.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge